Related Research Articles

Anisotropy is the structural property of non-uniformity in different directions, as opposed to isotropy. An anisotropic object or pattern has properties that differ according to direction of measurement. For example, many materials exhibit very different properties when measured along different axes: physical or mechanical properties.

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Vector quantization (VQ) is a classical quantization technique from signal processing that allows the modeling of probability density functions by the distribution of prototype vectors. It was originally used for data compression. It works by dividing a large set of points (vectors) into groups having approximately the same number of points closest to them. Each group is represented by its centroid point, as in k-means and some other clustering algorithms. In simpler terms, vector quantization chooses a set of points to represent a larger set of points.

In computer graphics, photon mapping is a two-pass global illumination rendering algorithm developed by Henrik Wann Jensen between 1995 and 2001 that approximately solves the rendering equation for integrating light radiance at a given point in space. Rays from the light source and rays from the camera are traced independently until some termination criterion is met, then they are connected in a second step to produce a radiance value. The algorithm is used to realistically simulate the interaction of light with different types of objects. Specifically, it is capable of simulating the refraction of light through a transparent substance such as glass or water, diffuse interreflection between illuminated objects, the subsurface scattering of light in translucent materials, and some of the effects caused by particulate matter such as smoke or water vapor. Photon mapping can also be extended to more accurate simulations of light, such as spectral rendering. Progressive photon mapping (PPM) starts with ray tracing and then adds more and more photon mapping passes to provide a progressively more accurate render.

In image processing and photography, a color histogram is a representation of the distribution of colors in an image. For digital images, a color histogram represents the number of pixels that have colors in each of a fixed list of color ranges, that span the image's color space, the set of all possible colors.

Computational photography refers to digital image capture and processing techniques that use digital computation instead of optical processes. Computational photography can improve the capabilities of a camera, or introduce features that were not possible at all with film based photography, or reduce the cost or size of camera elements. Examples of computational photography include in-camera computation of digital panoramas, high-dynamic-range images, and light field cameras. Light field cameras use novel optical elements to capture three dimensional scene information which can then be used to produce 3D images, enhanced depth-of-field, and selective de-focusing. Enhanced depth-of-field reduces the need for mechanical focusing systems. All of these features use computational imaging techniques.

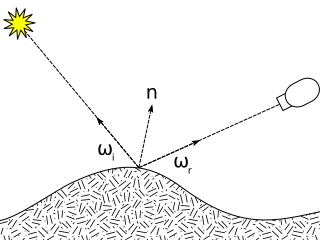

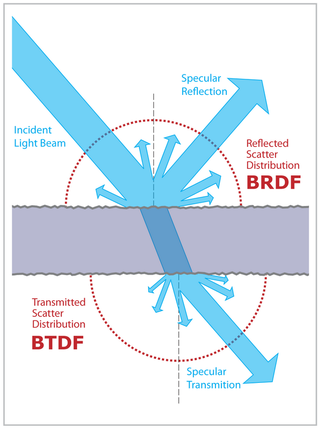

The bidirectional reflectance distribution function (BRDF), symbol , is a function of four real variables that defines how light is reflected at an opaque surface. It is employed in the optics of real-world light, in computer graphics algorithms, and in computer vision algorithms. The function takes an incoming light direction, , and outgoing direction, , and returns the ratio of reflected radiance exiting along to the irradiance incident on the surface from direction . Each direction is itself parameterized by azimuth angle and zenith angle , therefore the BRDF as a whole is a function of 4 variables. The BRDF has units sr−1, with steradians (sr) being a unit of solid angle.

Path tracing is a computer graphics Monte Carlo method of rendering images of three-dimensional scenes such that the global illumination is faithful to reality. Fundamentally, the algorithm is integrating over all the illuminance arriving to a single point on the surface of an object. This illuminance is then reduced by a surface reflectance function (BRDF) to determine how much of it will go towards the viewpoint camera. This integration procedure is repeated for every pixel in the output image. When combined with physically accurate models of surfaces, accurate models of real light sources, and optically correct cameras, path tracing can produce still images that are indistinguishable from photographs.

A gonioreflectometer is a device for measuring a bidirectional reflectance distribution function (BRDF).

The definition of the BSDF is not well standardized. The term was probably introduced in 1980 by Bartell, Dereniak, and Wolfe. Most often it is used to name the general mathematical function which describes the way in which the light is scattered by a surface. However, in practice, this phenomenon is usually split into the reflected and transmitted components, which are then treated separately as BRDF and BTDF.

The Oren–Nayar reflectance model, developed by Michael Oren and Shree K. Nayar, is a reflectivity model for diffuse reflection from rough surfaces. It has been shown to accurately predict the appearance of a wide range of natural surfaces, such as concrete, plaster, sand, etc.

Object recognition – technology in the field of computer vision for finding and identifying objects in an image or video sequence. Humans recognize a multitude of objects in images with little effort, despite the fact that the image of the objects may vary somewhat in different view points, in many different sizes and scales or even when they are translated or rotated. Objects can even be recognized when they are partially obstructed from view. This task is still a challenge for computer vision systems. Many approaches to the task have been implemented over multiple decades.

A structured-light 3D scanner is a 3D scanning device for measuring the three-dimensional shape of an object using projected light patterns and a camera system.

Computer graphics is a sub-field of computer science which studies methods for digitally synthesizing and manipulating visual content. Although the term often refers to the study of three-dimensional computer graphics, it also encompasses two-dimensional graphics and image processing.

Photometric stereo is a technique in computer vision for estimating the surface normals of objects by observing that object under different lighting conditions (photometry). It is based on the fact that the amount of light reflected by a surface is dependent on the orientation of the surface in relation to the light source and the observer. By measuring the amount of light reflected into a camera, the space of possible surface orientations is limited. Given enough light sources from different angles, the surface orientation may be constrained to a single orientation or even overconstrained.

Spectralon is a fluoropolymer that has the highest diffuse reflectance of any known material or coating over the ultraviolet, visible, and near-infrared regions of the spectrum. It exhibits highly Lambertian behavior, and can be machined into a wide variety of shapes for the construction of optical components such as calibration targets, integrating spheres, and optical pump cavities for lasers.

A digital outcrop model (DOM), also called a virtual outcrop model, is a digital 3D representation of the outcrop surface, mostly in a form of textured polygon mesh.

This is a glossary of terms relating to computer graphics.

Physically based rendering (PBR) is a computer graphics approach that seeks to render images in a way that models the lights and surfaces with optics in the real world. It is often referred to as "Physically Based Lighting" or "Physically Based Shading". Many PBR pipelines aim to achieve photorealism. Feasible and quick approximations of the bidirectional reflectance distribution function and rendering equation are of mathematical importance in this field. Photogrammetry may be used to help discover and encode accurate optical properties of materials. PBR principles may be implemented in real-time applications using Shaders or offline applications using Ray tracing (graphics) or Path tracing.

References

- 1 2 Kristin J. Dana; Bram van Ginneken; Shree K. Nayar; Jan J. Koenderink (1999). "Reflectance and texture of real world surfaces". ACM Transactions on Graphics. 18 (1): 1–34. doi: 10.1145/300776.300778 . S2CID 622815.

- 1 2 Kristin J. Dana; Bram van Ginneken; Shree K. Nayar; Jan J. Koenderink (1996). "Reflectance and texture of real world surfaces". Columbia University Technical Report CUCS-048-96.

{{cite web}}: Missing or empty|url=(help) - 1 2 Jiří Filip; Michal Haindl (2009). "Bidirectional Texture Function Modeling: A State of the Art Survey". IEEE Transactions on Pattern Analysis and Machine Intelligence. 31 (11): 1921–1940. doi:10.1109/TPAMI.2008.246. PMID 19762922. S2CID 9283615.

- ↑ Jensen, H.W.; Marschner, S.R.; Levoy, M.; Hanrahan, P. (2001). "A practical model for subsurface light transport". ACM SIGGRAPH. pp. 511–518.

{{cite web}}: Missing or empty|url=(help) - ↑ Vlastimil Havran; Jiří Filip; Karol Myszkowski (2009). "Bidirectional Texture Function Compression based on Multi-Level Vector Quantization". Computer Graphics Forum. 29 (1): 175–190. Archived from the original on 2010-08-04.

- ↑ Michal Haindl; Jiří Filip (2013). Visual Texture: Accurate Material Appearance Measurement, Representation and Modeling. Advances in Computer Vision and Pattern Recognition. Springer-Verlag London 2013. p. 285. ISBN 978-1-4471-4901-9.

- ↑ Oana G. Cula; Kristin J. Dana; Frank P. Murphy; Babar K. Rao (2005). "Skin Texture Modeling". International Journal of Computer Vision: 97–119.