In algorithmic information theory, the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory.

Information theory is the mathematical study of the quantification, storage, and communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, neurobiology, physics, and electrical engineering.

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution. Inferential statistical analysis infers properties of a population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is sampled from a larger population.

In philosophy, Occam's razor is the problem-solving principle that recommends searching for explanations constructed with the smallest possible set of elements. It is also known as the principle of parsimony or the law of parsimony. Attributed to William of Ockham, a 14th-century English philosopher and theologian, it is frequently cited as Entia non sunt multiplicanda praeter necessitatem, which translates as "Entities must not be multiplied beyond necessity", although Occam never used these exact words. Popularly, the principle is sometimes paraphrased as "of two competing theories, the simpler explanation of an entity is to be preferred."

Abductive reasoning is a form of logical inference that seeks the simplest and most likely conclusion from a set of observations. It was formulated and advanced by American philosopher and logician Charles Sanders Peirce beginning in the latter half of the 19th century.

Hypercomputation or super-Turing computation is a set of hypothetical models of computation that can provide outputs that are not Turing-computable. For example, a machine that could solve the halting problem would be a hypercomputer; so too would one that could correctly evaluate every statement in Peano arithmetic.

Minimum message length (MML) is a Bayesian information-theoretic method for statistical model comparison and selection. It provides a formal information theory restatement of Occam's Razor: even when models are equal in their measure of fit-accuracy to the observed data, the one generating the most concise explanation of data is more likely to be correct. MML was invented by Chris Wallace, first appearing in the seminal paper "An information measure for classification". MML is intended not just as a theoretical construct, but as a technique that may be deployed in practice. It differs from the related concept of Kolmogorov complexity in that it does not require use of a Turing-complete language to model data.

Manuel Blum is a Venezuelan born American computer scientist who received the Turing Award in 1995 "In recognition of his contributions to the foundations of computational complexity theory and its application to cryptography and program checking".

Minimum Description Length (MDL) is a model selection principle where the shortest description of the data is the best model. MDL methods learn through a data compression perspective and are sometimes described as mathematical applications of Occam's razor. The MDL principle can be extended to other forms of inductive inference and learning, for example to estimation and sequential prediction, without explicitly identifying a single model of the data.

In computer science, computational learning theory is a subfield of artificial intelligence devoted to studying the design and analysis of machine learning algorithms.

Inductive reasoning is any of various methods of reasoning in which broad generalizations or principles are derived from a body of observations. Inductive reasoning is in contrast to deductive reasoning, where the conclusion of a deductive argument is certain, given the premises are correct; in contrast, the truth of the conclusion of an inductive argument is at best probable, based upon the evidence given.

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s. It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the method together with Bayes' rule to obtain probabilities of prediction for an algorithm's future outputs.

Solomonoff's theory of inductive inference proves that, under its common sense assumptions (axioms), the best possible scientific model is the shortest algorithm that generates the empirical data under consideration. In addition to the choice of data, other assumptions are that, to avoid the post-hoc fallacy, the programming language must be chosen prior to the data and that the environment being observed is generated by an unknown algorithm. This is also called a theory of induction. Due to its basis in the dynamical character of Algorithmic Information Theory, it encompasses statistical as well as dynamical information criteria for model selection. It was introduced by Ray Solomonoff, based on probability theory and theoretical computer science. In essence, Solomonoff's induction derives the posterior probability of any computable theory, given a sequence of observed data. This posterior probability is derived from Bayes' rule and some universal prior, that is, a prior that assigns a positive probability to any computable theory.

Christopher S. Strachey was a British computer scientist. He was one of the founders of denotational semantics, and a pioneer in programming language design and computer time-sharing. He has also been credited as possibly being the first developer of a video game and for coining terms such as polymorphism and referential transparency that are still widely used by developers today. He was a member of the Strachey family, prominent in government, arts, administration, and academia.

Algorithmic information theory (AIT) is a branch of theoretical computer science that concerns itself with the relationship between computation and information of computably generated objects, such as strings or any other data structure. In other words, it is shown within algorithmic information theory that computational incompressibility "mimics" the relations or inequalities found in information theory. According to Gregory Chaitin, it is "the result of putting Shannon's information theory and Turing's computability theory into a cocktail shaker and shaking vigorously."

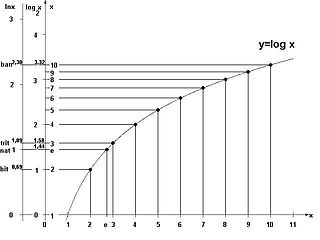

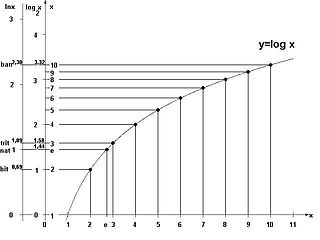

The natural unit of information, sometimes also nit or nepit, is a unit of information or information entropy, based on natural logarithms and powers of e, rather than the powers of 2 and base 2 logarithms, which define the shannon. This unit is also known by its unit symbol, the nat. One nat is the information content of an event when the probability of that event occurring is 1/e.

Inductive programming (IP) is a special area of automatic programming, covering research from artificial intelligence and programming, which addresses learning of typically declarative and often recursive programs from incomplete specifications, such as input/output examples or constraints.

Inductive probability attempts to give the probability of future events based on past events. It is the basis for inductive reasoning, and gives the mathematical basis for learning and the perception of patterns. It is a source of knowledge about the world.

Universality probability is an abstruse probability measure in computational complexity theory that concerns universal Turing machines.