Interoperability is a characteristic of a product or system to work with other products or systems. While the term was initially defined for information technology or systems engineering services to allow for information exchange, a broader definition takes into account social, political, and organizational factors that impact system-to-system performance.

System of systems is a collection of task-oriented or dedicated systems that pool their resources and capabilities together to create a new, more complex system which offers more functionality and performance than simply the sum of the constituent systems. Currently, systems of systems is a critical research discipline for which frames of reference, thought processes, quantitative analysis, tools, and design methods are incomplete. The methodology for defining, abstracting, modeling, and analyzing system of systems problems is typically referred to as system of systems engineering.

An agent-based model (ABM) is a computational model for simulating the actions and interactions of autonomous agents in order to understand the behavior of a system and what governs its outcomes. It combines elements of game theory, complex systems, emergence, computational sociology, multi-agent systems, and evolutionary programming. Monte Carlo methods are used to understand the stochasticity of these models. Particularly within ecology, ABMs are also called individual-based models (IBMs). A review of recent literature on individual-based models, agent-based models, and multiagent systems shows that ABMs are used in many scientific domains including biology, ecology and social science. Agent-based modeling is related to, but distinct from, the concept of multi-agent systems or multi-agent simulation in that the goal of ABM is to search for explanatory insight into the collective behavior of agents obeying simple rules, typically in natural systems, rather than in designing agents or solving specific practical or engineering problems.

Fluid–structure interaction (FSI) is the interaction of some movable or deformable structure with an internal or surrounding fluid flow. Fluid–structure interactions can be stable or oscillatory. In oscillatory interactions, the strain induced in the solid structure causes it to move such that the source of strain is reduced, and the structure returns to its former state only for the process to repeat.

Semantic interoperability is the ability of computer systems to exchange data with unambiguous, shared meaning. Semantic interoperability is a requirement to enable machine computable logic, inferencing, knowledge discovery, and data federation between information systems.

Statistical energy analysis (SEA) is a method for predicting the transmission of sound and vibration through complex structural acoustic systems. The method is particularly well suited for quick system level response predictions at the early design stage of a product, and for predicting responses at higher frequencies. In SEA a system is represented in terms of a number of coupled subsystems and a set of linear equations are derived that describe the input, storage, transmission and dissipation of energy within each subsystem. The parameters in the SEA equations are typically obtained by making certain statistical assumptions about the local dynamic properties of each subsystem (similar to assumptions made in room acoustics and statistical mechanics). These assumptions significantly simplify the analysis and make it possible to analyze the response of systems that are often too complex to analyze using other methods (such as finite element and boundary element methods).

Contact dynamics deals with the motion of multibody systems subjected to unilateral contacts and friction. Such systems are omnipresent in many multibody dynamics applications. Consider for example

Business semantics management (BSM) encompasses the technology, methodology, organization, and culture that brings business stakeholders together to collaboratively realize the reconciliation of their heterogeneous metadata; and consequently the application of the derived business semantics patterns to establish semantic alignment between the underlying data structures.

Live, Virtual, & Constructive (LVC) Simulation is a broadly used taxonomy for classifying Modeling and Simulation (M&S). However, categorizing a simulation as a live, virtual, or constructive environment is problematic since there is no clear division among these categories. The degree of human participation in a simulation is infinitely variable, as is the degree of equipment realism. The categorization of simulations also lacks a category for simulated people working real equipment.

This article documents the effort of the Health Level Seven(HL7) community and specifically the former HL7 Architecture Board (ArB) to develop an interoperability framework that would support services, messages, and Clinical Document Architecture(CDA) ISO 10871.

The JAUS Tool Set (JTS) is a software engineering tool for the design of software services used in a distributed computing environment. JTS provides a Graphical User Interface (GUI) and supporting tools for the rapid design, documentation, and implementation of service interfaces that adhere to the Society of Automotive Engineers' standard AS5684A, the JAUS Service Interface Design Language (JSIDL). JTS is designed to support the modeling, analysis, implementation, and testing of the protocol for an entire distributed system.

Model Driven Interoperability (MDI) is a methodological framework, which provides a conceptual and technical support to make interoperable enterprises using ontologies and semantic annotations, following model driven development (MDD) principles.

Medical device connectivity is the establishment and maintenance of a connection through which data is transferred between a medical device, such as a patient monitor, and an information system. The term is used interchangeably with biomedical device connectivity or biomedical device integration. By eliminating the need for manual data entry, potential benefits include faster and more frequent data updates, diminished human error, and improved workflow efficiency.

Unified interoperability is the property of a system that allows for the integration of real-time and non-real time communications, activities, data, and information services and the display and coordination of those services across systems and devices. Unified interoperability provides the capability to communicate and exchange processing across different applications, data, and infrastructure.

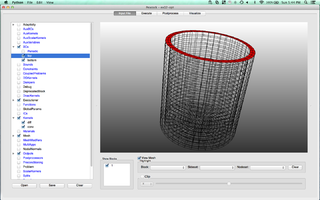

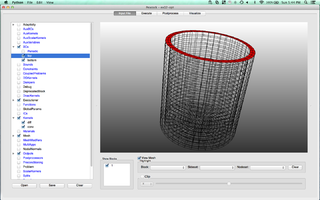

MOOSE is an object-oriented C++ finite element framework for the development of tightly coupled multiphysics solvers from Idaho National Laboratory. MOOSE makes use of the PETSc non-linear solver package and libmesh to provide the finite element discretization.

System-level simulation (SLS) is a collection of practical methods used in the field of systems engineering, in order to simulate, with a computer, the global behavior of large cyber-physical systems.

The CAPE-OPEN Interface Standard consists of a series of specifications to expand the range of application of process simulation technologies. The CAPE-OPEN specifications define a set of software interfaces that allow plug and play inter-operability between a given Process Modelling Environment and a third-party Process Modelling Component.

In numerical analysis, multi-time-step integration, also referred to as multiple-step or asynchronous time integration, is a numerical time-integration method that uses different time-steps or time-integrators for different parts of the problem. There are different approaches to multi-time-step integration. They are based on domain decomposition and can be classified into strong (monolithic) or weak (staggered) schemes. Using different time-steps or time-integrators in the context of a weak algorithm is rather straightforward, because the numerical solvers operate independently. However, this is not the case in a strong algorithm. In the past few years a number of research articles have addressed the development of strong multi-time-step algorithms. In either case, strong or weak, the numerical accuracy and stability needs to be carefully studied. Other approaches to multi-time-step integration in the context of operator splitting methods have also been developed; i.e., multi-rate GARK method and multi-step methods for molecular dynamics simulations.