In information theory, the Hamming distance between two strings or vectors of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of substitutions required to change one string into the other, or equivalently, the minimum number of errors that could have transformed one string into the other. In a more general context, the Hamming distance is one of several string metrics for measuring the edit distance between two sequences. It is named after the American mathematician Richard Hamming.

In coding theory, block codes are a large and important family of error-correcting codes that encode data in blocks. There is a vast number of examples for block codes, many of which have a wide range of practical applications. The abstract definition of block codes is conceptually useful because it allows coding theorists, mathematicians, and computer scientists to study the limitations of all block codes in a unified way. Such limitations often take the form of bounds that relate different parameters of the block code to each other, such as its rate and its ability to detect and correct errors.

In coding theory, the Kraft–McMillan inequality gives a necessary and sufficient condition for the existence of a prefix code or a uniquely decodable code for a given set of codeword lengths. Its applications to prefix codes and trees often find use in computer science and information theory. The prefix code can contain either finitely many or infinitely many codewords.

In coding theory, a linear code is an error-correcting code for which any linear combination of codewords is also a codeword. Linear codes are traditionally partitioned into block codes and convolutional codes, although turbo codes can be seen as a hybrid of these two types. Linear codes allow for more efficient encoding and decoding algorithms than other codes.

In coding theory, the Singleton bound, named after Richard Collom Singleton, is a relatively crude upper bound on the size of an arbitrary block code with block length , size and minimum distance . It is also known as the Joshibound. proved by Joshi (1958) and even earlier by Komamiya (1953).

In mathematics and computer science, in the field of coding theory, the Hamming bound is a limit on the parameters of an arbitrary block code: it is also known as the sphere-packing bound or the volume bound from an interpretation in terms of packing balls in the Hamming metric into the space of all possible words. It gives an important limitation on the efficiency with which any error-correcting code can utilize the space in which its code words are embedded. A code that attains the Hamming bound is said to be a perfect code.

In coding theory, the Gilbert–Varshamov bound is a bound on the size of a code. It is occasionally known as the Gilbert–Shannon–Varshamov bound, but the name "Gilbert–Varshamov bound" is by far the most popular. Varshamov proved this bound by using the probabilistic method for linear codes. For more about that proof, see Gilbert–Varshamov bound for linear codes.

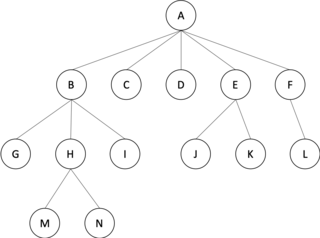

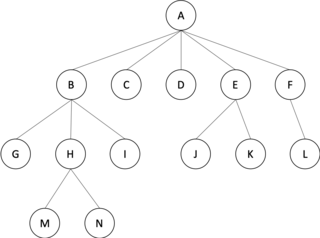

In graph theory, an m-ary tree is an arborescence in which each node has no more than m children. A binary tree is an important case where m = 2; similarly, a ternary tree is one where m = 3.

In statistics and coding theory, a Hamming space is usually the set of all binary strings of length N. It is used in the theory of coding signals and transmission.

In coding theory, a cyclic code is a block code, where the circular shifts of each codeword gives another word that belongs to the code. They are error-correcting codes that have algebraic properties that are convenient for efficient error detection and correction.

In coding theory, a constant-weight code, also called an m-of-n code, is an error detection and correction code where all codewords share the same Hamming weight. The one-hot code and the balanced code are two widely used kinds of constant-weight code.

In coding theory, list decoding is an alternative to unique decoding of error-correcting codes for large error rates. The notion was proposed by Elias in the 1950s. The main idea behind list decoding is that the decoding algorithm instead of outputting a single possible message outputs a list of possibilities one of which is correct. This allows for handling a greater number of errors than that allowed by unique decoding.

A locally testable code is a type of error-correcting code for which it can be determined if a string is a word in that code by looking at a small number of bits of the string. In some situations, it is useful to know if the data is corrupted without decoding all of it so that appropriate action can be taken in response. For example, in communication, if the receiver encounters a corrupted code, it can request the data be re-sent, which could increase the accuracy of said data. Similarly, in data storage, these codes can allow for damaged data to be recovered and rewritten properly.

DNA code construction refers to the application of coding theory to the design of nucleic acid systems for the field of DNA–based computation.

The Elias Bassalygo bound is a mathematical limit used in coding theory for error correction during data transmission or communications.

In coding theory, the Wozencraft ensemble is a set of linear codes in which most of codes satisfy the Gilbert-Varshamov bound. It is named after John Wozencraft, who proved its existence. The ensemble is described by Massey (1963), who attributes it to Wozencraft. Justesen (1972) used the Wozencraft ensemble as the inner codes in his construction of strongly explicit asymptotically good code.

The Gilbert–Varshamov bound for linear codes is related to the general Gilbert–Varshamov bound, which gives a lower bound on the maximal number of elements in an error-correcting code of a given block length and minimum Hamming weight over a field . This may be translated into a statement about the maximum rate of a code with given length and minimum distance. The Gilbert–Varshamov bound for linear codes asserts the existence of q-ary linear codes for any relative minimum distance less than the given bound that simultaneously have high rate. The existence proof uses the probabilistic method, and thus is not constructive. The Gilbert–Varshamov bound is the best known in terms of relative distance for codes over alphabets of size less than 49. For larger alphabets, algebraic geometry codes sometimes achieve an asymptotically better rate vs. distance tradeoff than is given by the Gilbert–Varshamov bound.

In coding theory, the Zyablov bound is a lower bound on the rate and relative distance that are achievable by concatenated codes.

In coding theory, folded Reed–Solomon codes are like Reed–Solomon codes, which are obtained by mapping Reed–Solomon codewords over a larger alphabet by careful bundling of codeword symbols.

Permutation codes are a family of error correction codes that were introduced first by Slepian in 1965. and have been widely studied both in Combinatorics and Information theory due to their applications related to Flash memory and Power-line communication.