Related Research Articles

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

A point cloud is a discrete set of data points in space. The points may represent a 3D shape or object. Each point position has its set of Cartesian coordinates. Point clouds are generally produced by 3D scanners or by photogrammetry software, which measure many points on the external surfaces of objects around them. As the output of 3D scanning processes, point clouds are used for many purposes, including to create 3D computer-aided design (CAD) models for manufactured parts, for metrology and quality inspection, and for a multitude of visualizing, animating, rendering, and mass customization applications.

Solid modeling is a consistent set of principles for mathematical and computer modeling of three-dimensional shapes (solids). Solid modeling is distinguished within the broader related areas of geometric modeling and computer graphics, such as 3D modeling, by its emphasis on physical fidelity. Together, the principles of geometric and solid modeling form the foundation of 3D-computer-aided design and in general support the creation, exchange, visualization, animation, interrogation, and annotation of digital models of physical objects.

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects. The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

Face detection is a computer technology being used in a variety of applications that identifies human faces in digital images. Face detection also refers to the psychological process by which humans locate and attend to faces in a visual scene.

The scale-invariant feature transform (SIFT) is a computer vision algorithm to detect, describe, and match local features in images, invented by David Lowe in 1999. Applications include object recognition, robotic mapping and navigation, image stitching, 3D modeling, gesture recognition, video tracking, individual identification of wildlife and match moving.

Scale-space theory is a framework for multi-scale signal representation developed by the computer vision, image processing and signal processing communities with complementary motivations from physics and biological vision. It is a formal theory for handling image structures at different scales, by representing an image as a one-parameter family of smoothed images, the scale-space representation, parametrized by the size of the smoothing kernel used for suppressing fine-scale structures. The parameter in this family is referred to as the scale parameter, with the interpretation that image structures of spatial size smaller than about have largely been smoothed away in the scale-space level at scale .

In computer graphics and computer vision, image-based modeling and rendering (IBMR) methods rely on a set of two-dimensional images of a scene to generate a three-dimensional model and then render some novel views of this scene.

Activity recognition aims to recognize the actions and goals of one or more agents from a series of observations on the agents' actions and the environmental conditions. Since the 1980s, this research field has captured the attention of several computer science communities due to its strength in providing personalized support for many different applications and its connection to many different fields of study such as medicine, human-computer interaction, or sociology.

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance.

In computer vision and computer graphics, 3D reconstruction is the process of capturing the shape and appearance of real objects. This process can be accomplished either by active or passive methods. If the model is allowed to change its shape in time, this is referred to as non-rigid or spatio-temporal reconstruction.

A structured-light 3D scanner is a 3D scanning device for measuring the three-dimensional shape of an object using projected light patterns and a camera system.

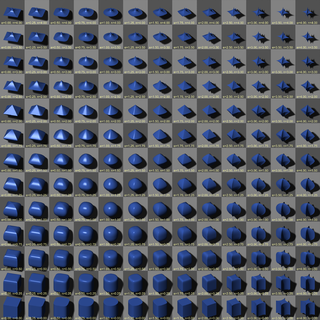

In mathematics, a superellipsoid is a solid whose horizontal sections are superellipses with the same squareness parameter , and whose vertical sections through the center are superellipses with the squareness parameter . It is a generalization of an ellipsoid, which is a special case when .

Convolutional neural network (CNN) is a regularized type of feed-forward neural network that learns feature engineering by itself via filters optimization. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by using regularized weights over fewer connections. For example, for each neuron in the fully-connected layer 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded convolution kernels, only 25 neurons are required to process 5x5-sized tiles. Higher-layer features are extracted from wider context windows, compared to lower-layer features.

In computer vision, rigid motion segmentation is the process of separating regions, features, or trajectories from a video sequence into coherent subsets of space and time. These subsets correspond to independent rigidly moving objects in the scene. The goal of this segmentation is to differentiate and extract the meaningful rigid motion from the background and analyze it. Image segmentation techniques labels the pixels to be a part of pixels with certain characteristics at a particular time. Here, the pixels are segmented depending on its relative movement over a period of time i.e. the time of the video sequence.

In computer vision and computer graphics, 4D reconstruction is the process of capturing the shape and appearance of real objects along a temporal dimension. This process can be accomplished by methods such as depth camera imaging, photometric stereo, or structure from motion, and is also referred to as spatio-temporal reconstruction.

Egocentric vision or first-person vision is a sub-field of computer vision that entails analyzing images and videos captured by a wearable camera, which is typically worn on the head or on the chest and naturally approximates the visual field of the camera wearer. Consequently, visual data capture the part of the scene on which the user focuses to carry out the task at hand and offer a valuable perspective to understand the user's activities and their context in a naturalistic setting.

In computer vision, object co-segmentation is a special case of image segmentation, which is defined as jointly segmenting semantically similar objects in multiple images or video frames.

Dynamic texture is the texture with motion which can be found in videos of sea-waves, fire, smoke, wavy trees, etc. Dynamic texture has a spatially repetitive pattern with time-varying visual pattern. Modeling and analyzing dynamic texture is a topic of images processing and pattern recognition in computer vision.

Video super-resolution (VSR) is the process of generating high-resolution video frames from the given low-resolution video frames. Unlike single-image super-resolution (SISR), the main goal is not only to restore more fine details while saving coarse ones, but also to preserve motion consistency.

References

- ↑ P. Dollár; V. Rabaud; G. Cottrell; S. Belongie (October 2005). Behavior Recognition via Sparse Spatio-Temporal Features. 2nd Joint IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance. pp. 65–72. doi:10.1109/VSPETS.2005.1570899.

- 1 2 3 Xiao, Jianxiong; Russell, Bryan C.; Torralba, Antonio (2012). "Localizing 3D Cuboids in Single-view Images". Proceedings of the 25th International Conference on Neural Information Processing Systems - Volume 1. NIPS'12. USA: Curran Associates Inc.: 746–754.

- 1 2 3 4 Aggarwal, J. K.; Xia, Lu (2013). "Spatio-temporal Depth Cuboid Similarity Feature for Activity Recognition Using Depth Camera": 2834–2841.

{{cite journal}}: Cite journal requires|journal=(help) - 1 2 3 Mishima, Masashi; Uchiyama, Hideaki; Thomas, Diego; Taniguchi, Rin-ichiro; Roberto, Rafael; Lima, João Paulo; Teichrieb, Veronica (2019-01-06). "Incremental 3D Cuboid Modeling with Drift Compensation". Sensors (Basel, Switzerland). 19 (1): 178. Bibcode:2019Senso..19..178M. doi: 10.3390/s19010178 . ISSN 1424-8220. PMC 6339002 . PMID 30621340.

- ↑ and, and (September 1999). "New calibration-free approach for augmented reality based on parameterized cuboid structure". Proceedings of the Seventh IEEE International Conference on Computer Vision. Vol. 1. pp. 30–37 vol.1. doi:10.1109/ICCV.1999.791194. ISBN 0-7695-0164-8. S2CID 45247014.

- ↑ Wang, Z.; Wang, G.; Ji, S.; Wan, Y.; Yuan, Q. (December 2007). "Optimal design of a linear Delta robot for the prescribed cuboid dexterous workspace". 2007 IEEE International Conference on Robotics and Biomimetics (ROBIO). pp. 2183–2188. doi:10.1109/ROBIO.2007.4522508. ISBN 978-1-4244-1761-2. S2CID 2186542.