Rendering is the process of generating a photorealistic or non-photorealistic image from input data such as 3D models. The word "rendering" originally meant the task performed by an artist when depicting a real or imaginary thing. Today, to "render" commonly means to generate an image or video from a precise description using a computer program.

The Moving Picture Experts Group (MPEG) is an alliance of working groups established jointly by ISO and IEC that sets standards for media coding, including compression coding of audio, video, graphics, and genomic data; and transmission and file formats for various applications. Together with JPEG, MPEG is organized under ISO/IEC JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information.

MPEG-4 is a group of international standards for the compression of digital audio and visual data, multimedia systems, and file storage formats. It was originally introduced in late 1998 as a group of audio and video coding formats and related technology agreed upon by the ISO/IEC Moving Picture Experts Group (MPEG) under the formal standard ISO/IEC 14496 – Coding of audio-visual objects. Uses of MPEG-4 include compression of audiovisual data for Internet video and CD distribution, voice and broadcast television applications. The MPEG-4 standard was developed by a group led by Touradj Ebrahimi and Fernando Pereira.

Advanced Audio Coding (AAC) is an audio coding standard for lossy digital audio compression. It was designed to be the successor of the MP3 format and generally achieves higher sound quality than MP3 at the same bit rate.

X3D is a set of royalty-free ISO/IEC standards for declaratively representing 3D computer graphics. X3D includes multiple graphics file formats, programming-language API definitions, and run-time specifications for both delivery and integration of interactive network-capable 3D data. X3D version 4.0 has been approved by Web3D Consortium, and is under final review by ISO/IEC as a revised International Standard (IS).

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

H.262 or MPEG-2 Part 2 is a video coding format standardised and jointly maintained by ITU-T Study Group 16 Video Coding Experts Group (VCEG) and ISO/IEC Moving Picture Experts Group (MPEG), and developed with the involvement of many companies. It is the second part of the ISO/IEC MPEG-2 standard. The ITU-T Recommendation H.262 and ISO/IEC 13818-2 documents are identical.

In computer vision and image processing, motion estimation is the process of determining motion vectors that describe the transformation from one 2D image to another; usually from adjacent frames in a video sequence. It is an ill-posed problem as the motion happens in three dimensions (3D) but the images are a projection of the 3D scene onto a 2D plane. The motion vectors may relate to the whole image or specific parts, such as rectangular blocks, arbitrary shaped patches or even per pixel. The motion vectors may be represented by a translational model or many other models that can approximate the motion of a real video camera, such as rotation and translation in all three dimensions and zoom.

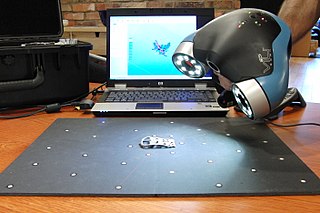

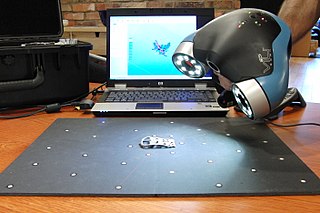

Iterative closest point (ICP) is a point cloud registration algorithm employed to minimize the difference between two clouds of points. ICP is often used to reconstruct 2D or 3D surfaces from different scans, to localize robots and achieve optimal path planning, to co-register bone models, etc.

Geometry processing is an area of research that uses concepts from applied mathematics, computer science and engineering to design efficient algorithms for the acquisition, reconstruction, analysis, manipulation, simulation and transmission of complex 3D models. As the name implies, many of the concepts, data structures, and algorithms are directly analogous to signal processing and image processing. For example, where image smoothing might convolve an intensity signal with a blur kernel formed using the Laplace operator, geometric smoothing might be achieved by convolving a surface geometry with a blur kernel formed using the Laplace-Beltrami operator.

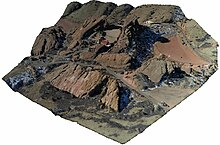

3D scanning is the process of analyzing a real-world object or environment to collect three dimensional data of its shape and possibly its appearance. The collected data can then be used to construct digital 3D models.

The Video Coding Experts Group or Visual Coding Experts Group is a working group of the ITU Telecommunication Standardization Sector (ITU-T) concerned with standards for compression coding of video, images, audio signals, biomedical waveforms, and other signals. It is responsible for standardization of the "H.26x" line of video coding standards, the "T.8xx" line of image coding standards, and related technologies.

Image-based meshing is the automated process of creating computer models for computational fluid dynamics (CFD) and finite element analysis (FEA) from 3D image data. Although a wide range of mesh generation techniques are currently available, these were usually developed to generate models from computer-aided design (CAD), and therefore have difficulties meshing from 3D imaging data.

In 3D computer graphics, 3D modeling is the process of developing a mathematical coordinate-based representation of a surface of an object in three dimensions via specialized software by manipulating edges, vertices, and polygons in a simulated 3D space.

The Point Cloud Library (PCL) is an open-source library of algorithms for point cloud processing tasks and 3D geometry processing, such as occur in three-dimensional computer vision. The library contains algorithms for filtering, feature estimation, surface reconstruction, 3D registration, model fitting, object recognition, and segmentation. Each module is implemented as a smaller library that can be compiled separately. PCL has its own data format for storing point clouds - PCD, but also allows datasets to be loaded and saved in many other formats. It is written in C++ and released under the BSD license.

3D reconstruction from multiple images is the creation of three-dimensional models from a set of images. It is the reverse process of obtaining 2D images from 3D scenes.

A digital outcrop model (DOM), also called a virtual outcrop model, is a digital 3D representation of the outcrop surface, mostly in a form of textured polygon mesh.

CloudCompare is a 3D point cloud processing software. It can also handle triangular meshes and calibrated images.

Volumetric capture or volumetric video is a technique that captures a three-dimensional space, such as a location or performance. This type of volumography acquires data that can be viewed on flat screens as well as using 3D displays and VR goggles. Consumer-facing formats are numerous and the required motion capture techniques lean on computer graphics, photogrammetry, and other computation-based methods. The viewer generally experiences the result in a real-time engine and has direct input in exploring the generated volume.

JPEG XS is an interoperable, visually lossless, low-latency and lightweight image and video coding system used in professional applications. Target applications of the standard include streaming high-quality content for professional video over IP in broadcast and other applications, virtual reality, drones, autonomous vehicles using cameras, gaming. Although there is not an official acronym definition, XS was chosen to highlight the extra small and extra speed characteristics of the codec.