Related Research Articles

Artificial intelligence (AI) is intelligence demonstrated by machines, as opposed to natural intelligence displayed by animals including humans. Leading AI textbooks define the field as the study of "intelligent agents": any system that perceives its environment and takes actions that maximize its chance of achieving its goals. Some popular accounts use the term "artificial intelligence" to describe machines that mimic "cognitive" functions that humans associate with the human mind, such as "learning" and "problem solving", however, this definition is rejected by major AI researchers.

In artificial intelligence, an expert system is a computer system emulating the decision-making ability of a human expert. Expert systems are designed to solve complex problems by reasoning through bodies of knowledge, represented mainly as if–then rules rather than through conventional procedural code. The first expert systems were created in the 1970s and then proliferated in the 1980s. Expert systems were among the first truly successful forms of artificial intelligence (AI) software. An expert system is divided into two subsystems: the inference engine and the knowledge base. The knowledge base represents facts and rules. The inference engine applies the rules to the known facts to deduce new facts. Inference engines can also include explanation and debugging abilities.

The history of the graphical user interface, understood as the use of graphic icons and a pointing device to control a computer, covers a five-decade span of incremental refinements, built on some constant core principles. Several vendors have created their own windowing systems based on independent code, but with basic elements in common that define the WIMP "window, icon, menu and pointing device" paradigm.

Lisp machines are general-purpose computers designed to efficiently run Lisp as their main software and programming language, usually via hardware support. They are an example of a high-level language computer architecture, and in a sense, they were the first commercial single-user workstations. Despite being modest in number Lisp machines commercially pioneered many now-commonplace technologies, including effective garbage collection, laser printing, windowing systems, computer mice, high-resolution bit-mapped raster graphics, computer graphic rendering, and networking innovations such as Chaosnet. Several firms built and sold Lisp machines in the 1980s: Symbolics, Lisp Machines Incorporated, Texas Instruments, and Xerox. The operating systems were written in Lisp Machine Lisp, Interlisp (Xerox), and later partly in Common Lisp.

Mesa is a programming language developed in the late 1970s at the Xerox Palo Alto Research Center in Palo Alto, California, United States. The language name was a pun based upon the programming language catchphrases of the time, because Mesa is a "high level" programming language.

The Xerox Alto is the first computer designed from its inception to support an operating system based on a graphical user interface (GUI), later using the desktop metaphor. The first machines were introduced on 1 March 1973, a decade before mass-market GUI machines became available.

Handwriting recognition (HWR), also known as Handwritten Text Recognition (HTR), is the ability of a computer to receive and interpret intelligible handwritten input from sources such as paper documents, photographs, touch-screens and other devices. The image of the written text may be sensed "off line" from a piece of paper by optical scanning or intelligent word recognition. Alternatively, the movements of the pen tip may be sensed "on line", for example by a pen-based computer screen surface, a generally easier task as there are more clues available. A handwriting recognition system handles formatting, performs correct segmentation into characters, and finds the most plausible words.

Interlisp is a programming environment built around a version of the programming language Lisp. Interlisp development began in 1966 at Bolt, Beranek and Newman in Cambridge, Massachusetts with Lisp implemented for the Digital Equipment Corporation (DEC) PDP-1 computer by Danny Bobrow and D. L. Murphy. In 1970, Alice K. Hartley implemented BBN LISP, which ran on PDP-10 machines running the operating system TENEX. In 1973, when Danny Bobrow, Warren Teitelman and Ronald Kaplan moved from BBN to the Xerox Palo Alto Research Center (PARC), it was renamed Interlisp. Interlisp became a popular Lisp development tool for artificial intelligence (AI) researchers at Stanford University and elsewhere in the community of the Defense Advanced Research Projects Agency (DARPA). Interlisp was notable for integrating interactive development tools into an integrated development environment (IDE), such as a debugger, an automatic correction tool for simple errors (via do what I mean software design, and analysis tools.

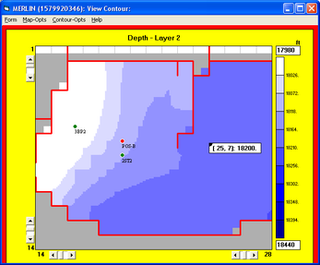

Well logging, also known as borehole logging is the practice of making a detailed record of the geologic formations penetrated by a borehole. The log may be based either on visual inspection of samples brought to the surface or on physical measurements made by instruments lowered into the hole. Some types of geophysical well logs can be done during any phase of a well's history: drilling, completing, producing, or abandoning. Well logging is performed in boreholes drilled for the oil and gas, groundwater, mineral and geothermal exploration, as well as part of environmental and geotechnical studies.

In video games, artificial intelligence (AI) is used to generate responsive, adaptive or intelligent behaviors primarily in non-player characters (NPCs) similar to human-like intelligence. Artificial intelligence has been an integral part of video games since their inception in the 1950s. AI in video games is a distinct subfield and differs from academic AI. It serves to improve the game-player experience rather than machine learning or decision making. During the golden age of arcade video games the idea of AI opponents was largely popularized in the form of graduated difficulty levels, distinct movement patterns, and in-game events dependent on the player's input. Modern games often implement existing techniques such as pathfinding and decision trees to guide the actions of NPCs. AI is often used in mechanisms which are not immediately visible to the user, such as data mining and procedural-content generation.

Legal informatics is an area within information science.

In the history of artificial intelligence, an AI winter is a period of reduced funding and interest in artificial intelligence research. The term was coined by analogy to the idea of a nuclear winter. The field has experienced several hype cycles, followed by disappointment and criticism, followed by funding cuts, followed by renewed interest years or decades later.

Reservoir simulation is an area of reservoir engineering in which computer models are used to predict the flow of fluids through porous media.

Metaphor Computer Systems (1982–1994) was a Xerox PARC spin-off that created an advanced workstation, database gateway, a unique graphical office interface, and software applications that "seamlessly integrate" data from both internal and external sources. The Metaphor machine, which pre-dated Apple's Macintosh, was one of the first commercial workstations to offer a complete hardware/software package and a GUI, including "a wireless mouse and a wireless five-function key pad." Although the company achieved some commercial success, it never achieved the fame of either the Apple Macintosh or Microsoft Windows.

Frames are an artificial intelligence data structure used to divide knowledge into substructures by representing "stereotyped situations". They were proposed by Marvin Minsky in his 1974 article "A Framework for Representing Knowledge". Frames are the primary data structure used in artificial intelligence frame language; they are stored as ontologies of sets.

Artificial intelligence, the intelligence exhibited by machines, has been used to develop thousands of applications to solve specific problems throughout industry and academia. It is an essential part of the most lucrative products in e-commerce. AI, like electricity or the steam engine, is a general purpose technology — there is no consensus on which tasks AI will excel at, now or in the future.

The fields of marketing and artificial intelligence converge in systems which assist in areas such as market forecasting, and automation of processes and decision making, along with increased efficiency of tasks which would usually be performed by humans. The science behind these systems can be explained through neural networks and expert systems, computer programs that process input and provide valuable output for marketers.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

References

- ↑ "The Economist". January 9, 1982.

- ↑ "On the Development of Commercial Expert Systems" . Retrieved October 1, 2011.

- ↑ "University of Southern California Department of Engineering". Archived from the original on June 21, 2010. Retrieved July 20, 2010.

- ↑ "AITopics – Applications of Artificial Intelligence – Petroleum Industry". Archived from the original on January 2, 2012. Retrieved October 1, 2011.

- ↑ Stefan M. Luthi, retrieved July 20, 2010 (10 April 2001). Geological Well Logs: Their use in Reservoir Modeling. ISBN 9783540678403.CS1 maint: multiple names: authors list (link)