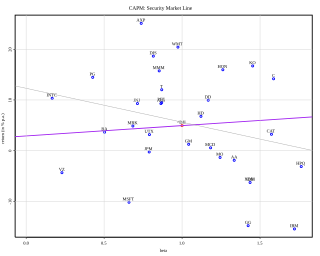

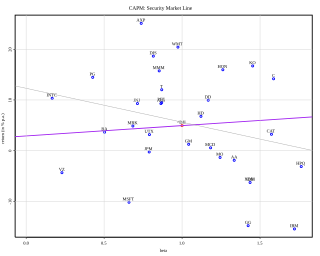

In finance, the capital asset pricing model (CAPM) is a model used to determine a theoretically appropriate required rate of return of an asset, to make decisions about adding assets to a well-diversified portfolio.

In economics and finance, risk aversion is the tendency of people to prefer outcomes with low uncertainty to those outcomes with high uncertainty, even if the average outcome of the latter is equal to or higher in monetary value than the more certain outcome.

In economics, time preference is the current relative valuation placed on receiving a good or some cash at an earlier date compared with receiving it at a later date.

In economics and econometrics, the Cobb–Douglas production function is a particular functional form of the production function, widely used to represent the technological relationship between the amounts of two or more inputs and the amount of output that can be produced by those inputs. The Cobb–Douglas form was developed and tested against statistical evidence by Charles Cobb and Paul Douglas between 1927 and 1947; according to Douglas, the functional form itself was developed earlier by Philip Wicksteed.

The expected utility hypothesis is a foundational assumption in mathematical economics concerning decision making under uncertainty. It postulates that rational agents maximize utility, meaning the subjective desirability of their actions. Rational choice theory, a cornerstone of microeconomics, builds this postulate to model aggregate social behaviour.

In economics, hyperbolic discounting is a time-inconsistent model of delay discounting. It is one of the cornerstones of behavioral economics and its brain-basis is actively being studied by neuroeconomics researchers.

Utility maximization was first developed by utilitarian philosophers Jeremy Bentham and John Stuart Mill. In microeconomics, the utility maximization problem is the problem consumers face: "How should I spend my money in order to maximize my utility?" It is a type of optimal decision problem. It consists of choosing how much of each available good or service to consume, taking into account a constraint on total spending (income), the prices of the goods and their preferences.

In microeconomics, a consumer's Marshallian demand function is the quantity they demand of a particular good as a function of its price, their income, and the prices of other goods, a more technical exposition of the standard demand function. It is a solution to the utility maximization problem of how the consumer can maximize their utility for given income and prices. A synonymous term is uncompensated demand function, because when the price rises the consumer is not compensated with higher nominal income for the fall in their real income, unlike in the Hicksian demand function. Thus the change in quantity demanded is a combination of a substitution effect and a wealth effect. Although Marshallian demand is in the context of partial equilibrium theory, it is sometimes called Walrasian demand as used in general equilibrium theory.

The equity premium puzzle refers to the inability of an important class of economic models to explain the average equity risk premium (ERP) provided by a diversified portfolio of equities over that of government bonds, which has been observed for more than 100 years. There is a significant disparity between returns produced by stocks compared to returns produced by government treasury bills. The equity premium puzzle addresses the difficulty in understanding and explaining this disparity. This disparity is calculated using the equity risk premium:

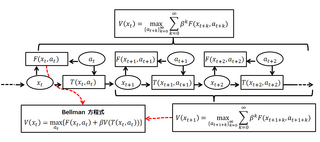

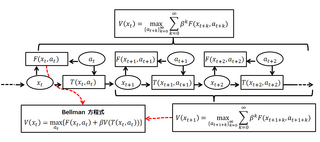

A Bellman equation, named after Richard E. Bellman, is a necessary condition for optimality associated with the mathematical optimization method known as dynamic programming. It writes the "value" of a decision problem at a certain point in time in terms of the payoff from some initial choices and the "value" of the remaining decision problem that results from those initial choices. This breaks a dynamic optimization problem into a sequence of simpler subproblems, as Bellman's “principle of optimality" prescribes. The equation applies to algebraic structures with a total ordering; for algebraic structures with a partial ordering, the generic Bellman's equation can be used.

In economics, dynamic inconsistency or time inconsistency is a situation in which a decision-maker's preferences change over time in such a way that a preference can become inconsistent at another point in time. This can be thought of as there being many different "selves" within decision makers, with each "self" representing the decision-maker at a different point in time; the inconsistency occurs when not all preferences are aligned.

In game theory, folk theorems are a class of theorems describing an abundance of Nash equilibrium payoff profiles in repeated games. The original Folk Theorem concerned the payoffs of all the Nash equilibria of an infinitely repeated game. This result was called the Folk Theorem because it was widely known among game theorists in the 1950s, even though no one had published it. Friedman's (1971) Theorem concerns the payoffs of certain subgame-perfect Nash equilibria (SPE) of an infinitely repeated game, and so strengthens the original Folk Theorem by using a stronger equilibrium concept: subgame-perfect Nash equilibria rather than Nash equilibria.

In economics exponential discounting is a specific form of the discount function, used in the analysis of choice over time. Formally, exponential discounting occurs when total utility is given by

In economics, discrete choice models, or qualitative choice models, describe, explain, and predict choices between two or more discrete alternatives, such as entering or not entering the labor market, or choosing between modes of transport. Such choices contrast with standard consumption models in which the quantity of each good consumed is assumed to be a continuous variable. In the continuous case, calculus methods can be used to determine the optimum amount chosen, and demand can be modeled empirically using regression analysis. On the other hand, discrete choice analysis examines situations in which the potential outcomes are discrete, such that the optimum is not characterized by standard first-order conditions. Thus, instead of examining "how much" as in problems with continuous choice variables, discrete choice analysis examines "which one". However, discrete choice analysis can also be used to examine the chosen quantity when only a few distinct quantities must be chosen from, such as the number of vehicles a household chooses to own and the number of minutes of telecommunications service a customer decides to purchase. Techniques such as logistic regression and probit regression can be used for empirical analysis of discrete choice.

In economics, Epstein–Zin preferences refers to a specification of recursive utility.

Consumption smoothing is an economic concept for the practice of optimizing a person's standard of living through an appropriate balance between savings and consumption over time. An optimal consumption rate should be relatively similar at each stage of a person's life rather than fluctuate wildly. Luxurious consumption at an old age does not compensate for an impoverished existence at other stages in one's life.

Mixed logit is a fully general statistical model for examining discrete choices. It overcomes three important limitations of the standard logit model by allowing for random taste variation across choosers, unrestricted substitution patterns across choices, and correlation in unobserved factors over time. Mixed logit can choose any distribution for the random coefficients, unlike probit which is limited to the normal distribution. It is called "mixed logit" because the choice probability is a mixture of logits, with as the mixing distribution. It has been shown that a mixed logit model can approximate to any degree of accuracy any true random utility model of discrete choice, given appropriate specification of variables and the coefficient distribution.

In economics, elasticity of intertemporal substitution is a measure of responsiveness of the growth rate of consumption to the real interest rate. If the real interest rate rises, current consumption may decrease due to increased return on savings; but current consumption may also increase as the household decides to consume more immediately, as it is feeling richer. The net effect on current consumption is the elasticity of intertemporal substitution.

In economics and finance, an intertemporal budget constraint is a constraint faced by a decision maker who is making choices for both the present and the future. The term intertemporal is used to describe any relationship between past, present and future events or conditions. In its general form, the intertemporal budget constraint says that the present value of current and future cash outflows cannot exceed the present value of currently available funds and future cash inflows. Typically this is expressed as

In statistics and econometrics, the maximum score estimator is a nonparametric estimator for discrete choice models developed by Charles Manski in 1975. Unlike the multinomial probit and multinomial logit estimators, it makes no assumptions about the distribution of the unobservable part of utility. However, its statistical properties are more complicated than the multinomial probit and logit models, making statistical inference difficult. To address these issues, Joel Horowitz proposed a variant, called the smoothed maximum score estimator.