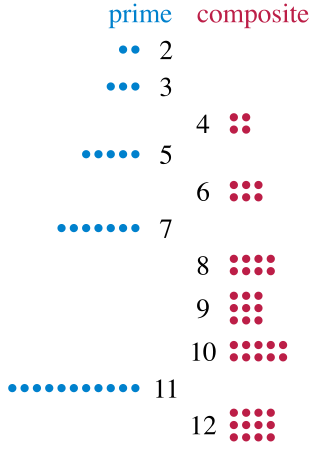

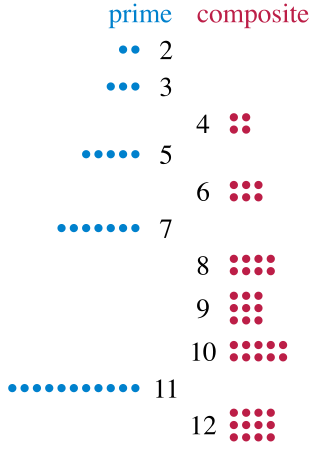

In number theory, integer factorization is the decomposition of a positive integer into a product of integers. Every positive integer greater than 1 is either the product of two or more integer factors, in which case it is called a composite number, or it is not, in which case it is called a prime number. For example, 15 is a composite number because 15 = 3 · 5, but 7 is a prime number because it cannot be decomposed in this way. If one of the factors is composite, it can in turn be written as a product of smaller factors, for example 60 = 3 · 20 = 3 · (5 · 4). Continuing this process until every factor is prime is called prime factorization; the result is always unique up to the order of the factors by the prime factorization theorem.

A prime number is a natural number greater than 1 that is not a product of two smaller natural numbers. A natural number greater than 1 that is not prime is called a composite number. For example, 5 is prime because the only ways of writing it as a product, 1 × 5 or 5 × 1, involve 5 itself. However, 4 is composite because it is a product (2 × 2) in which both numbers are smaller than 4. Primes are central in number theory because of the fundamental theorem of arithmetic: every natural number greater than 1 is either a prime itself or can be factorized as a product of primes that is unique up to their order.

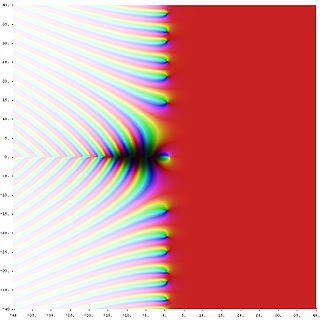

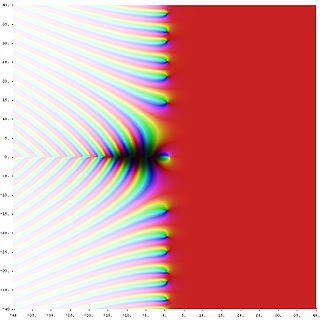

The Riemann hypothesis is one of the most important conjectures in mathematics. It is a statement about the zeros of the Riemann zeta function. Various geometrical and arithmetical objects can be described by so-called global L-functions, which are formally similar to the Riemann zeta-function. One can then ask the same question about the zeros of these L-functions, yielding various generalizations of the Riemann hypothesis. Many mathematicians believe these generalizations of the Riemann hypothesis to be true. The only cases of these conjectures which have been proven occur in the algebraic function field case.

A primality test is an algorithm for determining whether an input number is prime. Among other fields of mathematics, it is used for cryptography. Unlike integer factorization, primality tests do not generally give prime factors, only stating whether the input number is prime or not. Factorization is thought to be a computationally difficult problem, whereas primality testing is comparatively easy. Some primality tests prove that a number is prime, while others like Miller–Rabin prove that a number is composite. Therefore, the latter might more accurately be called compositeness tests instead of primality tests.

The Miller–Rabin primality test or Rabin–Miller primality test is a probabilistic primality test: an algorithm which determines whether a given number is likely to be prime, similar to the Fermat primality test and the Solovay–Strassen primality test.

In machine learning, early stopping is a form of regularization used to avoid overfitting when training a learner with an iterative method, such as gradient descent. Such methods update the learner so as to make it better fit the training data with each iteration. Up to a point, this improves the learner's performance on data outside of the training set. Past that point, however, improving the learner's fit to the training data comes at the expense of increased generalization error. Early stopping rules provide guidance as to how many iterations can be run before the learner begins to over-fit. Early stopping rules have been employed in many different machine learning methods, with varying amounts of theoretical foundation.

In mathematics, analytic number theory is a branch of number theory that uses methods from mathematical analysis to solve problems about the integers. It is often said to have begun with Peter Gustav Lejeune Dirichlet's 1837 introduction of Dirichlet L-functions to give the first proof of Dirichlet's theorem on arithmetic progressions. It is well known for its results on prime numbers and additive number theory.

Michael Oser Rabin is an Israeli mathematician, computer scientist, and recipient of the Turing Award.

In mathematics, the prime-counting function is the function counting the number of prime numbers less than or equal to some real number x. It is denoted by π(x) (unrelated to the number π).

The AKS primality test is a deterministic primality-proving algorithm created and published by Manindra Agrawal, Neeraj Kayal, and Nitin Saxena, computer scientists at the Indian Institute of Technology Kanpur, on August 6, 2002, in an article titled "PRIMES is in P". The algorithm was the first one which is able to determine in polynomial time, whether a given number is prime or composite and this without relying on mathematical conjectures such as the generalized Riemann hypothesis. The proof is also notable for not relying on the field of analysis. In 2006 the authors received both the Gödel Prize and Fulkerson Prize for their work.

In number theory, the Mertens function is defined for all positive integers n as

In mathematics and computer science, computational number theory, also known as algorithmic number theory, is the study of computational methods for investigating and solving problems in number theory and arithmetic geometry, including algorithms for primality testing and integer factorization, finding solutions to diophantine equations, and explicit methods in arithmetic geometry. Computational number theory has applications to cryptography, including RSA, elliptic curve cryptography and post-quantum cryptography, and is used to investigate conjectures and open problems in number theory, including the Riemann hypothesis, the Birch and Swinnerton-Dyer conjecture, the ABC conjecture, the modularity conjecture, the Sato-Tate conjecture, and explicit aspects of the Langlands program.

For supervised learning applications in machine learning and statistical learning theory, generalization error is a measure of how accurately an algorithm is able to predict outcome values for previously unseen data. Because learning algorithms are evaluated on finite samples, the evaluation of a learning algorithm may be sensitive to sampling error. As a result, measurements of prediction error on the current data may not provide much information about predictive ability on new data. Generalization error can be minimized by avoiding overfitting in the learning algorithm. The performance of a machine learning algorithm is visualized by plots that show values of estimates of the generalization error through the learning process, which are called learning curves.

In mathematics and computer science, a primality certificate or primality proof is a succinct, formal proof that a number is prime. Primality certificates allow the primality of a number to be rapidly checked without having to run an expensive or unreliable primality test. "Succinct" usually means that the proof should be at most polynomially larger than the number of digits in the number itself.

In theoretical computer science, circuit complexity is a branch of computational complexity theory in which Boolean functions are classified according to the size or depth of the Boolean circuits that compute them. A related notion is the circuit complexity of a recursive language that is decided by a uniform family of circuits .

In applied mathematics, topological data analysis (TDA) is an approach to the analysis of datasets using techniques from topology. Extraction of information from datasets that are high-dimensional, incomplete and noisy is generally challenging. TDA provides a general framework to analyze such data in a manner that is insensitive to the particular metric chosen and provides dimensionality reduction and robustness to noise. Beyond this, it inherits functoriality, a fundamental concept of modern mathematics, from its topological nature, which allows it to adapt to new mathematical tools.

Gary Lee Miller is a professor of Computer Science at Carnegie Mellon University, Pittsburgh, United States. In 2003 he won the ACM Paris Kanellakis Award for the Miller–Rabin primality test. He was made an ACM Fellow in 2002 and won the Knuth Prize in 2013.

In mathematics, the Riemann hypothesis is the conjecture that the Riemann zeta function has its zeros only at the negative even integers and complex numbers with real part 1/2. Many consider it to be the most important unsolved problem in pure mathematics. It is of great interest in number theory because it implies results about the distribution of prime numbers. It was proposed by Bernhard Riemann (1859), after whom it is named.

The Hurwitz quaternion order is a specific order in a quaternion algebra over a suitable number field. The order is of particular importance in Riemann surface theory, in connection with surfaces with maximal symmetry, namely the Hurwitz surfaces. The Hurwitz quaternion order was studied in 1967 by Goro Shimura, but first explicitly described by Noam Elkies in 1998. For an alternative use of the term, see Hurwitz quaternion.