Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, textures, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Global illumination (GI), or indirect illumination, is a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting to 3D scenes. Such algorithms take into account not only the light that comes directly from a light source, but also subsequent cases in which light rays from the same source are reflected by other surfaces in the scene, whether reflective or not.

In digital signal processing, spatial anti-aliasing is a technique for minimizing the distortion artifacts (aliasing) when representing a high-resolution image at a lower resolution. Anti-aliasing is used in digital photography, computer graphics, digital audio, and many other applications.

A distance transform, also known as distance map or distance field, is a derived representation of a digital image. The choice of the term depends on the point of view on the object in question: whether the initial image is transformed into another representation, or it is simply endowed with an additional map or field.

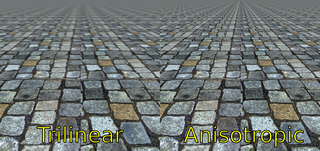

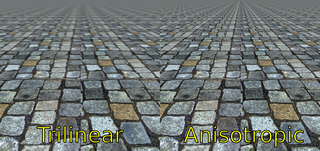

In 3D computer graphics, anisotropic filtering is a method of enhancing the image quality of textures on surfaces of computer graphics that are at oblique viewing angles with respect to the camera where the projection of the texture appears to be non-orthogonal.

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

In computer graphics, texture filtering or texture smoothing is the method used to determine the texture color for a texture mapped pixel, using the colors of nearby texels.

Computational photography refers to digital image capture and processing techniques that use digital computation instead of optical processes. Computational photography can improve the capabilities of a camera, or introduce features that were not possible at all with film-based photography, or reduce the cost or size of camera elements. Examples of computational photography include in-camera computation of digital panoramas, high-dynamic-range images, and light field cameras. Light field cameras use novel optical elements to capture three dimensional scene information which can then be used to produce 3D images, enhanced depth-of-field, and selective de-focusing. Enhanced depth-of-field reduces the need for mechanical focusing systems. All of these features use computational imaging techniques.

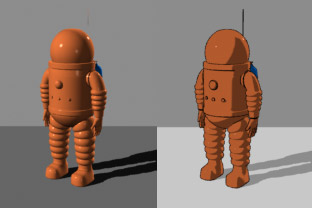

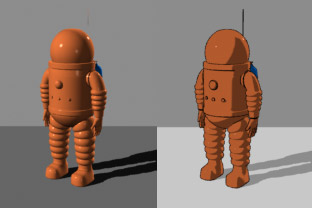

Non-photorealistic rendering (NPR) is an area of computer graphics that focuses on enabling a wide variety of expressive styles for digital art, in contrast to traditional computer graphics, which focuses on photorealism. NPR is inspired by other artistic modes such as painting, drawing, technical illustration, and animated cartoons. NPR has appeared in movies and video games in the form of cel-shaded animation as well as in scientific visualization, architectural illustration and experimental animation.

Tone mapping is a technique used in image processing and computer graphics to map one set of colors to another to approximate the appearance of high-dynamic-range (HDR) images in a medium that has a more limited dynamic range. Print-outs, CRT or LCD monitors, and projectors all have a limited dynamic range that is inadequate to reproduce the full range of light intensities present in natural scenes. Tone mapping addresses the problem of strong contrast reduction from the scene radiance to the displayable range while preserving the image details and color appearance important to appreciate the original scene content.

Supersampling or supersampling anti-aliasing (SSAA) is a spatial anti-aliasing method, i.e. a method used to remove aliasing from images rendered in computer games or other computer programs that generate imagery. Aliasing occurs because unlike real-world objects, which have continuous smooth curves and lines, a computer screen shows the viewer a large number of small squares. These pixels all have the same size, and each one has a single color. A line can only be shown as a collection of pixels, and therefore appears jagged unless it is perfectly horizontal or vertical. The aim of supersampling is to reduce this effect. Color samples are taken at several instances inside the pixel, and an average color value is calculated. This is achieved by rendering the image at a much higher resolution than the one being displayed, then shrinking it to the desired size, using the extra pixels for calculation. The result is a downsampled image with smoother transitions from one line of pixels to another along the edges of objects. The number of samples determines the quality of the output.

Texture compression is a specialized form of image compression designed for storing texture maps in 3D computer graphics rendering systems. Unlike conventional image compression algorithms, texture compression algorithms are optimized for random access.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

Fluid animation refers to computer graphics techniques for generating realistic animations of fluids such as water and smoke. Fluid animations are typically focused on emulating the qualitative visual behavior of a fluid, with less emphasis placed on rigorously correct physical results, although they often still rely on approximate solutions to the Euler equations or Navier–Stokes equations that govern real fluid physics. Fluid animation can be performed with different levels of complexity, ranging from time-consuming, high-quality animations for films, or visual effects, to simple and fast animations for real-time animations like computer games.

Seam carving is an algorithm for content-aware image resizing, developed by Shai Avidan, of Mitsubishi Electric Research Laboratories (MERL), and Ariel Shamir, of the Interdisciplinary Center and MERL. It functions by establishing a number of seams in an image and automatically removes seams to reduce image size or inserts seams to extend it. Seam carving also allows manually defining areas in which pixels may not be modified, and features the ability to remove whole objects from photographs.

A bilateral filter is a non-linear, edge-preserving, and noise-reducing smoothing filter for images. It replaces the intensity of each pixel with a weighted average of intensity values from nearby pixels. This weight can be based on a Gaussian distribution. Crucially, the weights depend not only on Euclidean distance of pixels, but also on the radiometric differences. This preserves sharp edges.

In scientific visualization, line integral convolution (LIC) is a method to visualize a vector field, such as fluid motion.

Femto-photography is a technique for recording the propagation of ultrashort pulses of light through a scene at a very high speed (up to 1013 frames per second). A femto-photograph is equivalent to an optical impulse response of a scene and has also been denoted by terms such as a light-in-flight recording or transient image. Femto-photography of macroscopic objects was first demonstrated using a holographic process in the 1970s by Nils Abramsson at the Royal Institute of Technology (Sweden). A research team at the MIT Media Lab led by Ramesh Raskar, together with contributors from the Graphics and Imaging Lab at the Universidad de Zaragoza, Spain, more recently achieved a significant increase in image quality using a streak camera synchronized to a pulsed laser and modified to obtain 2D images instead of just a single scanline.

Michael F. Cohen is an American computer scientist and researcher in computer graphics. He is currently a Senior Fellow at Meta in their Generative AI Group. He was a senior research scientist at Microsoft Research for 21 years until he joined Facebook in 2015. In 1998, he received the ACM SIGGRAPH CG Achievement Award for his work in developing radiosity methods for realistic image synthesis. He was elected a Fellow of the Association for Computing Machinery in 2007 for his "contributions to computer graphics and computer vision." In 2019, he received the ACM SIGGRAPH Steven A. Coons Award for Outstanding Creative Contributions to Computer Graphics for “his groundbreaking work in numerous areas of research—radiosity, motion simulation & editing, light field rendering, matting & compositing, and computational photography”.

Video matting is a technique for separating the video into two or more layers, usually foreground and background, and generating alpha mattes which determine blending of the layers. The technique is very popular in video editing because it allows to substitute the background, or process the layers individually.