Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and to the principles of information theory. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change, and information systems including the transmission of information in telecommunication.

Frequentist probability or frequentism is an interpretation of probability; it defines an event's probability as the limit of its relative frequency in many trials. Probabilities can be found by a repeatable objective process. The continued use of frequentist methods in scientific inference, however, has been called into question.

Information theory is the mathematical study of the quantification, storage, and communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field, in applied mathematics, is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering, and electrical engineering.

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable , which takes values in the alphabet and is distributed according to :

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. It does not assume or postulate any natural laws, but explains the macroscopic behavior of nature from the behavior of such ensembles.

The second law of thermodynamics is a physical law based on universal experience concerning heat and energy interconversions. One simple statement of the law is that heat always moves from hotter objects to colder objects, unless energy in some form is supplied to reverse the direction of heat flow. Another definition is: "Not all heat energy can be converted into work in a cyclic process."

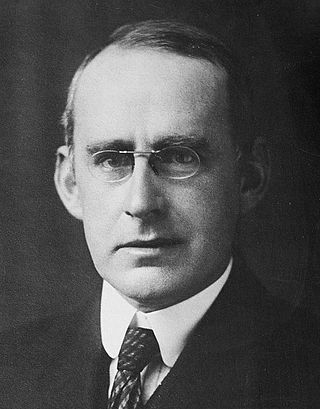

Edwin Thompson Jaynes was the Wayman Crow Distinguished Professor of Physics at Washington University in St. Louis. He wrote extensively on statistical mechanics and on foundations of probability and statistical inference, initiating in 1957 the maximum entropy interpretation of thermodynamics as being a particular application of more general Bayesian/information theory techniques. Jaynes strongly promoted the interpretation of probability theory as an extension of logic.

The arrow of time, also called time's arrow, is the concept positing the "one-way direction" or "asymmetry" of time. It was developed in 1927 by the British astrophysicist Arthur Eddington, and is an unsolved general physics question. This direction, according to Eddington, could be determined by studying the organization of atoms, molecules, and bodies, and might be drawn upon a four-dimensional relativistic map of the world.

In the history of science, Laplace's demon was a notable published articulation of causal determinism on a scientific basis by Pierre-Simon Laplace in 1814. According to determinism, if someone knows the precise location and momentum of every atom in the universe, their past and future values for any given time are entailed; they can be calculated from the laws of classical mechanics.

The laws of thermodynamics are a set of scientific laws which define a group of physical quantities, such as temperature, energy, and entropy, that characterize thermodynamic systems in thermodynamic equilibrium. The laws also use various parameters for thermodynamic processes, such as thermodynamic work and heat, and establish relationships between them. They state empirical facts that form a basis of precluding the possibility of certain phenomena, such as perpetual motion. In addition to their use in thermodynamics, they are important fundamental laws of physics in general and are applicable in other natural sciences.

The Fabric of the Cosmos: Space, Time, and the Texture of Reality (2004) is the second book on theoretical physics, cosmology, and string theory written by Brian Greene, professor and co-director of Columbia's Institute for Strings, Cosmology, and Astroparticle Physics (ISCAP).

The history of thermodynamics is a fundamental strand in the history of physics, the history of chemistry, and the history of science in general. Owing to the relevance of thermodynamics in much of science and technology, its history is finely woven with the developments of classical mechanics, quantum mechanics, magnetism, and chemical kinetics, to more distant applied fields such as meteorology, information theory, and biology (physiology), and to technological developments such as the steam engine, internal combustion engine, cryogenics and electricity generation. The development of thermodynamics both drove and was driven by atomic theory. It also, albeit in a subtle manner, motivated new directions in probability and statistics; see, for example, the timeline of thermodynamics.

In physics, maximum entropy thermodynamics views equilibrium thermodynamics and statistical mechanics as inference processes. More specifically, MaxEnt applies inference techniques rooted in Shannon information theory, Bayesian probability, and the principle of maximum entropy. These techniques are relevant to any situation requiring prediction from incomplete or insufficient data. MaxEnt thermodynamics began with two papers by Edwin T. Jaynes published in the 1957 Physical Review.

The mathematical expressions for thermodynamic entropy in the statistical thermodynamics formulation established by Ludwig Boltzmann and J. Willard Gibbs in the 1870s are similar to the information entropy by Claude Shannon and Ralph Hartley, developed in the 1940s.

The concept of entropy developed in response to the observation that a certain amount of functional energy released from combustion reactions is always lost to dissipation or friction and is thus not transformed into useful work. Early heat-powered engines such as Thomas Savery's (1698), the Newcomen engine (1712) and the Cugnot steam tricycle (1769) were inefficient, converting less than two percent of the input energy into useful work output; a great deal of useful energy was dissipated or lost. Over the next two centuries, physicists investigated this puzzle of lost energy; the result was the concept of entropy.

In thermodynamics, entropy is a numerical quantity that shows that many physical processes can go in only one direction in time. For example, cream and coffee can be mixed together, but cannot be "unmixed"; a piece of wood can be burned, but cannot be "unburned". The word 'entropy' has entered popular usage to refer a lack of order or predictability, or of a gradual decline into disorder. A more physical interpretation of thermodynamic entropy refers to spread of energy or matter, or to extent and diversity of microscopic motion.

Research concerning the relationship between the thermodynamic quantity entropy and both the origin and evolution of life began around the turn of the 20th century. In 1910, American historian Henry Adams printed and distributed to university libraries and history professors the small volume A Letter to American Teachers of History proposing a theory of history based on the second law of thermodynamics and on the principle of entropy.

The Human Use of Human Beings is a book by Norbert Wiener, the founding thinker of cybernetics theory and an influential advocate of automation; it was first published in 1950 and revised in 1954. The text argues for the benefits of automation to society; it analyzes the meaning of productive communication and discusses ways for humans and machines to cooperate, with the potential to amplify human power and release people from the repetitive drudgery of manual labor, in favor of more creative pursuits in knowledge work and the arts. The risk that such changes might harm society is explored, and suggestions are offered on how to avoid such risk.

Decoding Reality: The Universe as Quantum Information is a popular science book by Vlatko Vedral published by Oxford University Press in 2010. Vedral examines information theory and proposes information as the most fundamental building block of reality. He argues what a useful framework this is for viewing all natural and physical phenomena. In building out this framework the books touches upon the origin of information, the idea of entropy, the roots of this thinking in thermodynamics, the replication of DNA, development of social networks, quantum behaviour at the micro and macro level, and the very role of indeterminism in the universe. The book finishes by considering the answer to the ultimate question: where did all of the information in the Universe come from? The ideas address concepts related to the nature of particles, time, determinism, and of reality itself.

Cybernetics: Or Control and Communication in the Animal and the Machine is a book written by Norbert Wiener and published in 1948. It is the first public usage of the term "cybernetics" to refer to self-regulating mechanisms. The book laid the theoretical foundation for servomechanisms, automatic navigation, analog computing, artificial intelligence, neuroscience, and reliable communications.