In mathematics, a self-adjoint operator on a complex vector space V with inner product is a linear map A that is its own adjoint. If V is finite-dimensional with a given orthonormal basis, this is equivalent to the condition that the matrix of A is a Hermitian matrix, i.e., equal to its conjugate transpose A∗. By the finite-dimensional spectral theorem, V has an orthonormal basis such that the matrix of A relative to this basis is a diagonal matrix with entries in the real numbers. This article deals with applying generalizations of this concept to operators on Hilbert spaces of arbitrary dimension.

In mathematics, specifically functional analysis, a trace-class operator is a linear operator for which a trace may be defined, such that the trace is a finite number independent of the choice of basis used to compute the trace. This trace of trace-class operators generalizes the trace of matrices studied in linear algebra. All trace-class operators are compact operators.

In mathematics, specifically functional analysis, Mercer's theorem is a representation of a symmetric positive-definite function on a square as a sum of a convergent sequence of product functions. This theorem, presented in, is one of the most notable results of the work of James Mercer (1883–1932). It is an important theoretical tool in the theory of integral equations; it is used in the Hilbert space theory of stochastic processes, for example the Karhunen–Loève theorem; and it is also used in the reproducing kernel Hilbert space theory where it characterizes a symmetric positive-definite kernel as a reproducing kernel.

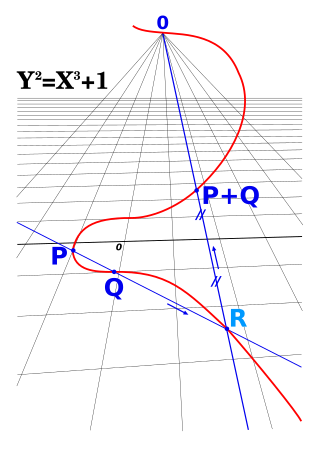

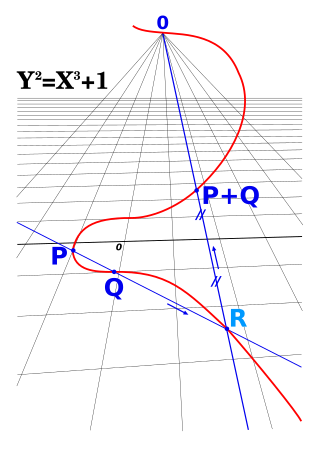

In algebraic geometry, a projective variety is an algebraic variety that is a closed subvariety of a projective space. That is, it is the zero-locus in of some finite family of homogeneous polynomials that generate a prime ideal, the defining ideal of the variety.

In mathematics, the Hessian matrix, Hessian or Hesse matrix is a square matrix of second-order partial derivatives of a scalar-valued function, or scalar field. It describes the local curvature of a function of many variables. The Hessian matrix was developed in the 19th century by the German mathematician Ludwig Otto Hesse and later named after him. Hesse originally used the term "functional determinants". The Hessian is sometimes denoted by H or, ambiguously, by ∇2.

In mathematics, the Borel–Weil–Bott theorem is a basic result in the representation theory of Lie groups, showing how a family of representations can be obtained from holomorphic sections of certain complex vector bundles, and, more generally, from higher sheaf cohomology groups associated to such bundles. It is built on the earlier Borel–Weil theorem of Armand Borel and André Weil, dealing just with the space of sections, the extension to higher cohomology groups being provided by Raoul Bott. One can equivalently, through Serre's GAGA, view this as a result in complex algebraic geometry in the Zariski topology.

In mathematics, a Cartan subalgebra, often abbreviated as CSA, is a nilpotent subalgebra of a Lie algebra that is self-normalising. They were introduced by Élie Cartan in his doctoral thesis. It controls the representation theory of a semi-simple Lie algebra over a field of characteristic .

In mathematics, geometric invariant theory is a method for constructing quotients by group actions in algebraic geometry, used to construct moduli spaces. It was developed by David Mumford in 1965, using ideas from the paper in classical invariant theory.

In linear algebra, an eigenvector or characteristic vector is a vector that has its direction unchanged by a given linear transformation. More precisely, an eigenvector, , of a linear transformation, , is scaled by a constant factor, , when the linear transformation is applied to it: . The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor .

In mathematics, Schur polynomials, named after Issai Schur, are certain symmetric polynomials in n variables, indexed by partitions, that generalize the elementary symmetric polynomials and the complete homogeneous symmetric polynomials. In representation theory they are the characters of polynomial irreducible representations of the general linear groups. The Schur polynomials form a linear basis for the space of all symmetric polynomials. Any product of Schur polynomials can be written as a linear combination of Schur polynomials with non-negative integral coefficients; the values of these coefficients is given combinatorially by the Littlewood–Richardson rule. More generally, skew Schur polynomials are associated with pairs of partitions and have similar properties to Schur polynomials.

In mathematics, the theta representation is a particular representation of the Heisenberg group of quantum mechanics. It gains its name from the fact that the Jacobi theta function is invariant under the action of a discrete subgroup of the Heisenberg group. The representation was popularized by David Mumford.

In mathematics, Macdonald polynomialsPλ(x; t,q) are a family of orthogonal symmetric polynomials in several variables, introduced by Macdonald in 1987. He later introduced a non-symmetric generalization in 1995. Macdonald originally associated his polynomials with weights λ of finite root systems and used just one variable t, but later realized that it is more natural to associate them with affine root systems rather than finite root systems, in which case the variable t can be replaced by several different variables t=(t1,...,tk), one for each of the k orbits of roots in the affine root system. The Macdonald polynomials are polynomials in n variables x=(x1,...,xn), where n is the rank of the affine root system. They generalize many other families of orthogonal polynomials, such as Jack polynomials and Hall–Littlewood polynomials and Askey–Wilson polynomials, which in turn include most of the named 1-variable orthogonal polynomials as special cases. Koornwinder polynomials are Macdonald polynomials of certain non-reduced root systems. They have deep relationships with affine Hecke algebras and Hilbert schemes, which were used to prove several conjectures made by Macdonald about them.

In operator theory, a bounded operator T: X → Y between normed vector spaces X and Y is said to be a contraction if its operator norm ||T || ≤ 1. This notion is a special case of the concept of a contraction mapping, but every bounded operator becomes a contraction after suitable scaling. The analysis of contractions provides insight into the structure of operators, or a family of operators. The theory of contractions on Hilbert space is largely due to Béla Szőkefalvi-Nagy and Ciprian Foias.

In mathematics, the spectral theory of ordinary differential equations is the part of spectral theory concerned with the determination of the spectrum and eigenfunction expansion associated with a linear ordinary differential equation. In his dissertation, Hermann Weyl generalized the classical Sturm–Liouville theory on a finite closed interval to second order differential operators with singularities at the endpoints of the interval, possibly semi-infinite or infinite. Unlike the classical case, the spectrum may no longer consist of just a countable set of eigenvalues, but may also contain a continuous part. In this case the eigenfunction expansion involves an integral over the continuous part with respect to a spectral measure, given by the Titchmarsh–Kodaira formula. The theory was put in its final simplified form for singular differential equations of even degree by Kodaira and others, using von Neumann's spectral theorem. It has had important applications in quantum mechanics, operator theory and harmonic analysis on semisimple Lie groups.

In algebraic geometry, a stable curve is an algebraic curve that is asymptotically stable in the sense of geometric invariant theory.

In mathematics, Kostka polynomials, named after the mathematician Carl Kostka, are families of polynomials that generalize the Kostka numbers. They are studied primarily in algebraic combinatorics and representation theory.

In algebraic geometry, the Quot scheme is a scheme parametrizing sheaves on a projective scheme. More specifically, if X is a projective scheme over a Noetherian scheme S and if F is a coherent sheaf on X, then there is a scheme whose set of T-points is the set of isomorphism classes of the quotients of that are flat over T. The notion was introduced by Alexander Grothendieck.

In mathematics, a linear recurrence with constant coefficients sets equal to 0 a polynomial that is linear in the various iterates of a variable—that is, in the values of the elements of a sequence. The polynomial's linearity means that each of its terms has degree 0 or 1. A linear recurrence denotes the evolution of some variable over time, with the current time period or discrete moment in time denoted as t, one period earlier denoted as t − 1, one period later as t + 1, etc.

In mathematics, and especially differential and algebraic geometry, K-stability is an algebro-geometric stability condition, for complex manifolds and complex algebraic varieties. The notion of K-stability was first introduced by Gang Tian and reformulated more algebraically later by Simon Donaldson. The definition was inspired by a comparison to geometric invariant theory (GIT) stability. In the special case of Fano varieties, K-stability precisely characterises the existence of Kähler–Einstein metrics. More generally, on any compact complex manifold, K-stability is conjectured to be equivalent to the existence of constant scalar curvature Kähler metrics.

Tau functions are an important ingredient in the modern mathematical theory of integrable systems, and have numerous applications in a variety of other domains. They were originally introduced by Ryogo Hirota in his direct method approach to soliton equations, based on expressing them in an equivalent bilinear form.