Measurement is the quantification of attributes of an object or event, which can be used to compare with other objects or events. In other words, measurement is a process of determining how large or small a physical quantity is as compared to a basic reference quantity of the same kind. The scope and application of measurement are dependent on the context and discipline. In natural sciences and engineering, measurements do not apply to nominal properties of objects or events, which is consistent with the guidelines of the International vocabulary of metrology published by the International Bureau of Weights and Measures. However, in other fields such as statistics as well as the social and behavioural sciences, measurements can have multiple levels, which would include nominal, ordinal, interval and ratio scales.

Observational error is the difference between a measured value of a quantity and its unknown true value. Such errors are inherent in the measurement process; for example lengths measured with a ruler calibrated in whole centimeters will have a measurement error of several millimeters. The error or uncertainty of a measurement can be estimated, and is specified with the measurement as, for example, 32.3 ± 0.5 cm.

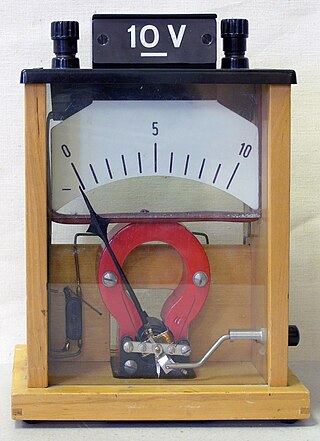

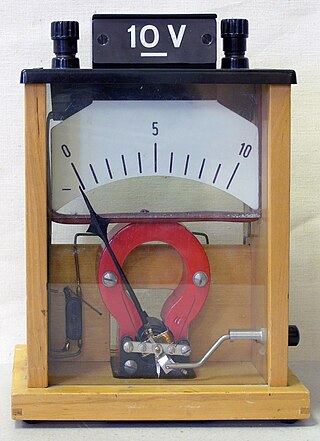

A voltmeter is an instrument used for measuring electric potential difference between two points in an electric circuit. It is connected in parallel. It usually has a high resistance so that it takes negligible current from the circuit.

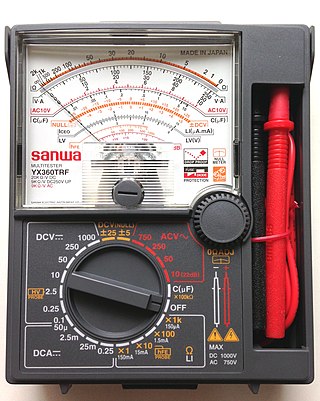

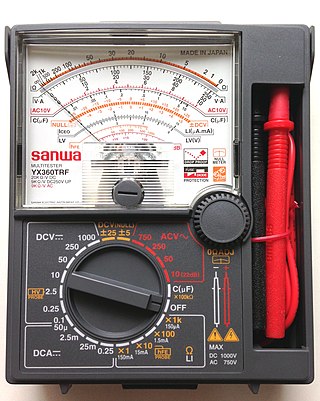

A multimeter is a measuring instrument that can measure multiple electrical properties. A typical multimeter can measure voltage, resistance, and current, in which case can be used as a voltmeter, ohmmeter, and ammeter. Some feature the measurement of additional properties such as temperature and capacitance.

Accuracy and precision are two measures of observational error. Accuracy is how close a given set of measurements are to their true value. Precision is how close the measurements are to each other.

A micrometer, sometimes known as a micrometer screw gauge (MSG), is a device incorporating a calibrated screw widely used for accurate measurement of components in mechanical engineering and machining as well as most mechanical trades, along with other metrological instruments such as dial, vernier, and digital calipers. Micrometers are usually, but not always, in the form of calipers (opposing ends joined by a frame). The spindle is a very accurately machined screw and the object to be measured is placed between the spindle and the anvil. The spindle is moved by turning the ratchet knob or thimble until the object to be measured is lightly touched by both the spindle and the anvil.

In measurement technology and metrology, calibration is the comparison of measurement values delivered by a device under test with those of a calibration standard of known accuracy. Such a standard could be another measurement device of known accuracy, a device generating the quantity to be measured such as a voltage, a sound tone, or a physical artifact, such as a meter ruler.

Uncertainty or incertitude refers to situations involving imperfect or unknown information. It applies to predictions of future events, to physical measurements that are already made, or to the unknown. Uncertainty arises in partially observable or stochastic environments, as well as due to ignorance, indolence, or both. It arises in any number of fields, including insurance, philosophy, physics, statistics, economics, finance, medicine, psychology, sociology, engineering, metrology, meteorology, ecology and information science.

A hygrometer is an instrument which measures the humidity of air or some other gas: that is, how much of it is water vapor. Humidity measurement instruments usually rely on measurements of some other quantities such as temperature, pressure, mass, and mechanical or electrical changes in a substance as moisture is absorbed. By calibration and calculation, these measured quantities can be used to indicate the humidity. Modern electronic devices use the temperature of condensation, or they sense changes in electrical capacitance or resistance.

A torque wrench is a tool used to apply a specific torque to a fastener such as a nut, bolt, or lag screw. It is usually in the form of a socket wrench with an indicating scale, or an internal mechanism which will indicate when a specified (adjustable) torque value has been reached during application.

A scale or balance is a device used to measure weight or mass. These are also known as mass scales, weight scales, mass balances, massometers, and weight balances.

A pyranometer is a type of actinometer used for measuring solar irradiance on a planar surface and it is designed to measure the solar radiation flux density (W/m2) from the hemisphere above within a wavelength range 0.3 μm to 3 μm.

A spectroradiometer is a light measurement tool that is able to measure both the wavelength and amplitude of the light emitted from a light source. Spectrometers discriminate the wavelength based on the position the light hits at the detector array allowing the full spectrum to be obtained with a single acquisition. Most spectrometers have a base measurement of counts which is the un-calibrated reading and is thus impacted by the sensitivity of the detector to each wavelength. By applying a calibration, the spectrometer is then able to provide measurements of spectral irradiance, spectral radiance and/or spectral flux. This data is also then used with built in or PC software and numerous algorithms to provide readings or Irradiance (W/cm2), Illuminance, Radiance (W/sr), Luminance (cd), Flux, Chromaticity, Color Temperature, Peak and Dominant Wavelength. Some more complex spectrometer software packages also allow calculation of PAR μmol/m2/s, Metamerism, and candela calculations based on distance and include features like 2- and 20-degree observer, baseline overlay comparisons, transmission and reflectance.

The wattmeter is an instrument for measuring the electric active power in watts of any given circuit. Electromagnetic wattmeters are used for measurement of utility frequency and audio frequency power; other types are required for radio frequency measurements.

The limit of detection is the lowest signal, or the lowest corresponding quantity to be determined from the signal, that can be observed with a sufficient degree of confidence or statistical significance. However, the exact threshold used to decide when a signal significantly emerges above the continuously fluctuating background noise remains arbitrary and is a matter of policy and often of debate among scientists, statisticians and regulators depending on the stakes in different fields.

In metrology, measurement uncertainty is the expression of the statistical dispersion of the values attributed to a quantity measured on an interval or ratio scale.

A network analyzer is an instrument that measures the network parameters of electrical networks. Today, network analyzers commonly measure s–parameters because reflection and transmission of electrical networks are easy to measure at high frequencies, but there are other network parameter sets such as y-parameters, z-parameters, and h-parameters. Network analyzers are often used to characterize two-port networks such as amplifiers and filters, but they can be used on networks with an arbitrary number of ports.

A heat flux sensor is a transducer that generates an electrical signal proportional to the total heat rate applied to the surface of the sensor. The measured heat rate is divided by the surface area of the sensor to determine the heat flux.

Industrial process data validation and reconciliation, or more briefly, process data reconciliation (PDR), is a technology that uses process information and mathematical methods in order to automatically ensure data validation and reconciliation by correcting measurements in industrial processes. The use of PDR allows for extracting accurate and reliable information about the state of industry processes from raw measurement data and produces a single consistent set of data representing the most likely process operation.