OpenMP is an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran, on most platforms, instruction set architectures and operating systems, including Solaris, AIX, HP-UX, Linux, macOS, and Windows. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior.

POSIX Threads, usually referred to as pthreads, is an execution model that exists independently from a language, as well as a parallel execution model. It allows a program to control multiple different flows of work that overlap in time. Each flow of work is referred to as a thread, and creation and control over these flows is achieved by making calls to the POSIX Threads API. POSIX Threads is an API defined by the standard POSIX.1c, Threads extensions .

In computer science, a deterministic algorithm is an algorithm which, given a particular input, will always produce the same output, with the underlying machine always passing through the same sequence of states. Deterministic algorithms are by far the most studied and familiar kind of algorithm, as well as one of the most practical, since they can be run on real machines efficiently.

Cilk, Cilk++ and Cilk Plus are general-purpose programming languages designed for multithreaded parallel computing. They are based on the C and C++ programming languages, which they extend with constructs to express parallel loops and the fork–join idiom.

Intel C++ Compiler, also known as icc or icl, is a group of C and C++ compilers from Intel available for Windows, Mac, Linux, FreeBSD and Intel-based Android devices.

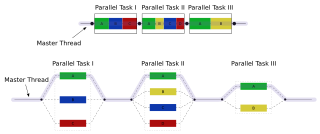

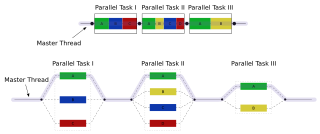

Task parallelism is a form of parallelization of computer code across multiple processors in parallel computing environments. Task parallelism focuses on distributing tasks—concurrently performed by processes or threads—across different processors. In contrast to data parallelism which involves running the same task on different components of data, task parallelism is distinguished by running many different tasks at the same time on the same data. A common type of task parallelism is pipelining which consists of moving a single set of data through a series of separate tasks where each task can execute independently of the others.

RapidMind Inc. was a privately held company founded and headquartered in Waterloo, Ontario, Canada, acquired by Intel in 2009. It provided a software product that aims to make it simpler for software developers to target multi-core processors and accelerators such as graphics processing units (GPUs).

The Sieve C++ Parallel Programming System is a C++ compiler and parallel runtime designed and released by Codeplay that aims to simplify the parallelization of code so that it may run efficiently on multi-processor or multi-core systems. It is an alternative to other well-known parallelisation methods such as OpenMP, the RapidMind Development Platform and Threading Building Blocks (TBB).

Intel Ct is a programming model developed by Intel to ease the exploitation of its future multicore chips, as demonstrated by the Tera-Scale research program.

Intel Fortran Compiler, also known as IFORT, is a group of Fortran compilers from Intel for Windows, OS X, and Linux.

Parallel Extensions was the development name for a managed concurrency library developed by a collaboration between Microsoft Research and the CLR team at Microsoft. The library was released in version 4.0 of the .NET Framework. It is composed of two parts: Parallel LINQ (PLINQ) and Task Parallel Library (TPL). It also consists of a set of coordination data structures (CDS) – sets of data structures used to synchronize and co-ordinate the execution of concurrent tasks.

Concurrent Collections is a programming model for software frameworks to expose parallelism in applications. The Concurrent Collections conception originated from tagged stream processing development with HP TStreams.

Intel Inspector is a memory and thread checking and debugging tool to increase the reliability, security, and accuracy of C/C++ and Fortran applications.

The following outline is provided as an overview of and topical guide to C++:

Intel Advisor is a SIMD vectorization optimization and shared memory threading assistance tool for C, C++, C# and Fortran software developers and architects. This product is available on Windows and Linux operating systems in form of Standalone GUI tool, Microsoft Visual Studio plug-in or command line interface.

In parallel computing, the fork–join model is a way of setting up and executing parallel programs, such that execution branches off in parallel at designated points in the program, to "join" (merge) at a subsequent point and resume sequential execution. Parallel sections may fork recursively until a certain task granularity is reached. Fork–join can be considered a parallel design pattern. It was formulated as early as 1963.