The Fourier transform (FT) decomposes a function of time into its constituent frequencies. This is similar to the way a musical chord can be expressed in terms of the volumes and frequencies of its constituent notes. The term Fourier transform refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of time. The Fourier transform of a function of time is itself a complex-valued function of frequency, whose magnitude component represents the amount of that frequency present in the original function, and whose complex argument is the phase offset of the basic sinusoid in that frequency. The Fourier transform is not limited to functions of time, but the domain of the original function is commonly referred to as the time domain. There is also an inverse Fourier transform that mathematically synthesizes the original function from its frequency domain representation.

In geometry, a solid angle is a measure of the amount of the field of view from some particular point that a given object covers. That is, it is a measure of how large the object appears to an observer looking from that point. The point from which the object is viewed is called the apex of the solid angle, and the object is said to subtend its solid angle from that point.

The moment of inertia, otherwise known as the angular mass or rotational inertia, of a rigid body is a quantity that determines the torque needed for a desired angular acceleration about a rotational axis; similar to how mass determines the force needed for a desired acceleration. It depends on the body's mass distribution and the axis chosen, with larger moments requiring more torque to change the body's rotation rate. It is an extensive (additive) property: for a point mass the moment of inertia is just the mass times the square of the perpendicular distance to the rotation axis. The moment of inertia of a rigid composite system is the sum of the moments of inertia of its component subsystems. Its simplest definition is the second moment of mass with respect to distance from an axis. For bodies constrained to rotate in a plane, only their moment of inertia about an axis perpendicular to the plane, a scalar value, matters. For bodies free to rotate in three dimensions, their moments can be described by a symmetric 3 × 3 matrix, with a set of mutually perpendicular principal axes for which this matrix is diagonal and torques around the axes act independently of each other.

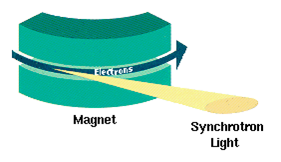

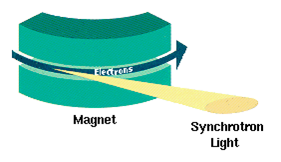

Synchrotron radiation is the electromagnetic radiation emitted when charged particles are accelerated radially, i.e., when they are subject to an acceleration perpendicular to their velocity. It is produced, for example, in synchrotrons using bending magnets, undulators and/or wigglers. If the particle is non-relativistic, then the emission is called cyclotron emission. If, on the other hand, the particles are relativistic, sometimes referred to as ultrarelativistic, the emission is called synchrotron emission. Synchrotron radiation may be achieved artificially in synchrotrons or storage rings, or naturally by fast electrons moving through magnetic fields. The radiation produced in this way has a characteristic polarization and the frequencies generated can range over the entire electromagnetic spectrum which is also called continuum radiation.

The power spectrum of a time series describes the distribution of power into frequency components composing that signal. According to Fourier analysis, any physical signal can be decomposed into a number of discrete frequencies, or a spectrum of frequencies over a continuous range. The statistical average of a certain signal or sort of signal as analyzed in terms of its frequency content, is called its spectrum.

In probability theory, the total variation distance is a distance measure for probability distributions. It is an example of a statistical distance metric, and is sometimes called the statistical distance or variational distance.

In oceanography, a sea state is the general condition of the free surface on a large body of water—with respect to wind waves and swell—at a certain location and moment. A sea state is characterized by statistics, including the wave height, period, and power spectrum. The sea state varies with time, as the wind conditions or swell conditions change. The sea state can either be assessed by an experienced observer, like a trained mariner, or through instruments like weather buoys, wave radar or remote sensing satellites.

In mathematics, the stationary phase approximation is a basic principle of asymptotic analysis, applying to the limit as .

In probability theory and statistics, the Jensen–Shannon divergence is a method of measuring the similarity between two probability distributions. It is also known as information radius (IRad) or total divergence to the average. It is based on the Kullback–Leibler divergence, with some notable differences, including that it is symmetric and it always has a finite value. The square root of the Jensen–Shannon divergence is a metric often referred to as Jensen-Shannon distance.

The autocorrelation technique is a method for estimating the dominating frequency in a complex signal, as well as its variance. Specifically, it calculates the first two moments of the power spectrum, namely the mean and variance. It is also known as the pulse-pair algorithm in radar theory.

Zero sound is the name given by Lev Landau to the unique quantum vibrations in quantum Fermi liquids.

In probability theory and statistics, Fisher's noncentral hypergeometric distribution is a generalization of the hypergeometric distribution where sampling probabilities are modified by weight factors. It can also be defined as the conditional distribution of two or more binomially distributed variables dependent upon their fixed sum.

In statistical signal processing, the goal of spectral density estimation (SDE) is to estimate the spectral density of a random signal from a sequence of time samples of the signal. Intuitively speaking, the spectral density characterizes the frequency content of the signal. One purpose of estimating the spectral density is to detect any periodicities in the data, by observing peaks at the frequencies corresponding to these periodicities.

The log-spectral distance (LSD), also referred to as log-spectral distortion, is a distance measure between two spectra. The log-spectral distance between spectra and is defined as:

In probability theory and statistics, the skew normal distribution is a continuous probability distribution that generalises the normal distribution to allow for non-zero skewness.

The narrow escape problem is a ubiquitous problem in biology, biophysics and cellular biology.

In mathematics, especially spectral theory, Weyl's law describes the asymptotic behavior of eigenvalues of the Laplace–Beltrami operator. This description was discovered in 1911 by Hermann Weyl for eigenvalues for the Laplace–Beltrami operator acting on functions that vanish at the boundary of a bounded domain . In particular, he proved that the number, , of Dirichlet eigenvalues less than or equal to satisfies

In machine learning, the kernel embedding of distributions comprises a class of nonparametric methods in which a probability distribution is represented as an element of a reproducing kernel Hilbert space (RKHS). A generalization of the individual data-point feature mapping done in classical kernel methods, the embedding of distributions into infinite-dimensional feature spaces can preserve all of the statistical features of arbitrary distributions, while allowing one to compare and manipulate distributions using Hilbert space operations such as inner products, distances, projections, linear transformations, and spectral analysis. This learning framework is very general and can be applied to distributions over any space on which a sensible kernel function may be defined. For example, various kernels have been proposed for learning from data which are: vectors in , discrete classes/categories, strings, graphs/networks, images, time series, manifolds, dynamical systems, and other structured objects. The theory behind kernel embeddings of distributions has been primarily developed by Alex Smola, Le Song , Arthur Gretton, and Bernhard Schölkopf. A review of recent works on kernel embedding of distributions can be found in.

Ramsey interferometry, also known as Ramsey–Bordé interferometry or the separated oscillating fields method, is a form of atom interferometry that uses the phenomenon of magnetic resonance to measure transition frequencies of atoms. It was developed in 1949 by Norman Ramsey, who built upon the ideas of his mentor, Isidor Isaac Rabi, who initially developed a technique for measuring atomic transition frequencies. Ramsey's method is used today in atomic clocks and in the S.I. definition of the second. Most precision atomic measurements, such as modern atom interferometers and quantum logic gates, have a Ramsey-type configuration. A modern interferometer using a Ramsey configuration was developed by French physicist Christian Bordé and is known as the Ramsey–Bordé interferometer. Bordé's main idea was to use atomic recoil to create a beam splitter of different geometries for an atom-wave. The Ramsey–Bordé interferometer specifically uses two pairs of counter-propagating interaction waves, and another method named the "photon-echo" uses two co-propagating pairs of interaction waves.