In computing, floating-point arithmetic (FP) is arithmetic using formulaic representation of real numbers as an approximation to support a trade-off between range and precision. For this reason, floating-point computation is often used in systems with very small and very large real numbers that require fast processing times. In general, a floating-point number is represented approximately with a fixed number of significant digits and scaled using an exponent in some fixed base; the base for the scaling is normally two, ten, or sixteen. A number that can be represented exactly is of the following form:

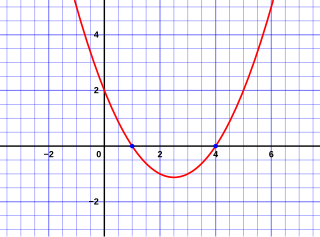

In algebra, a quadratic equation is any equation that can be rearranged in standard form as

In mathematics, a square root of a number x is a number y such that y2 = x; in other words, a number y whose square (the result of multiplying the number by itself, or y ⋅ y) is x. For example, 4 and −4 are square roots of 16, because 42 = (−4)2 = 16. Every nonnegative real number x has a unique nonnegative square root, called the principal square root, which is denoted by where the symbol is called the radical sign or radix. For example, the principal square root of 9 is 3, which is denoted by because 32 = 3 ⋅ 3 = 9 and 3 is nonnegative. The term (or number) whose square root is being considered is known as the radicand. The radicand is the number or expression underneath the radical sign, in this case 9.

In elementary algebra, the quadratic formula is a formula that provides the solution(s) to a quadratic equation. There are other ways of solving a quadratic equation instead of using the quadratic formula, such as factoring, completing the square, graphing and others.

In mathematics, tables of trigonometric functions are useful in a number of areas. Before the existence of pocket calculators, trigonometric tables were essential for navigation, science and engineering. The calculation of mathematical tables was an important area of study, which led to the development of the first mechanical computing devices.

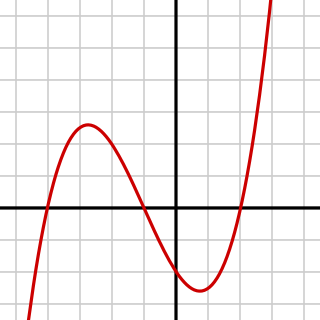

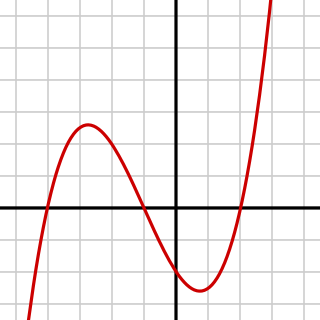

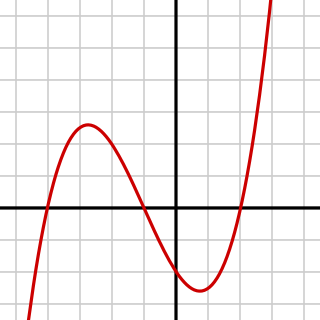

In algebra, a cubic equation in one variable is an equation of the form

In mathematics, a cubic function is a function of the form

In mathematics, a quadratic irrational number is an irrational number that is the solution to some quadratic equation with rational coefficients which is irreducible over the rational numbers. Since fractions in the coefficients of a quadratic equation can be cleared by multiplying both sides by their least common denominator, a quadratic irrational is an irrational root of some quadratic equation with integer coefficients. The quadratic irrational numbers, a subset of the complex numbers, are algebraic numbers of degree 2, and can therefore be expressed as

In algebra, a quartic function is a function of the form

In mathematics, an algebraic equation or polynomial equation is an equation of the form

A roundoff error, also called rounding error, is the difference between the result produced by a given algorithm using exact arithmetic and the result produced by the same algorithm using finite-precision, rounded arithmetic. Rounding errors are due to inexactness in the representation of real numbers and the arithmetic operations done with them. This is a form of quantization error. When using approximation equations or algorithms, especially when using finitely many digits to represent real numbers, one of the goals of numerical analysis is to estimate computation errors. Computation errors, also called numerical errors, include both truncation errors and roundoff errors.

In relativity, proper time along a timelike world line is defined as the time as measured by a clock following that line. It is thus independent of coordinates, and is a Lorentz scalar. The proper time interval between two events on a world line is the change in proper time. This interval is the quantity of interest, since proper time itself is fixed only up to an arbitrary additive constant, namely the setting of the clock at some event along the world line.

Significance arithmetic is a set of rules for approximating the propagation of uncertainty in scientific or statistical calculations. These rules can be used to find the appropriate number of significant figures to use to represent the result of a calculation. If a calculation is done without analysis of the uncertainty involved, a result that is written with too many significant figures can be taken to imply a higher precision than is known, and a result that is written with too few significant figures results in an avoidable loss of precision. Understanding these rules requires a good understanding of the concept of significant and insignificant figures.

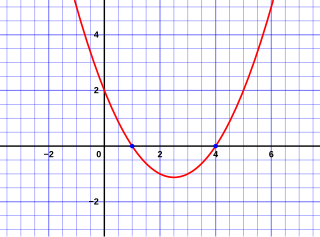

Methods of computing square roots are numerical analysis algorithms for finding the principal, or non-negative, square root of a real number. Arithmetically, it means given S, a procedure for finding a number which when multiplied by itself, yields S; algebraically, it means a procedure for finding the non-negative root of the equation x2 - S = 0; geometrically, it means given the area of a square, a procedure for constructing a side of the square.

In algebraic number theory, a fundamental unit is a generator for the unit group of the ring of integers of a number field, when that group has rank 1. Dirichlet's unit theorem shows that the unit group has rank 1 exactly when the number field is a real quadratic field, a complex cubic field, or a totally imaginary quartic field. When the unit group has rank ≥ 1, a basis of it modulo its torsion is called a fundamental system of units. Some authors use the term fundamental unit to mean any element of a fundamental system of units, not restricting to the case of rank 1.

In numerical analysis, Aitken's delta-squared process or Aitken Extrapolation is a series acceleration method, used for accelerating the rate of convergence of a sequence. It is named after Alexander Aitken, who introduced this method in 1926. Its early form was known to Seki Kōwa and was found for rectification of the circle, i.e. the calculation of π. It is most useful for accelerating the convergence of a sequence that is converging linearly.

In mathematics, an infinite periodic continued fraction is a continued fraction that can be placed in the form

In mathematics, Pythagorean addition is the following binary operation on the real numbers:

In numerical analysis, catastrophic cancellation is the phenomenon that subtracting good approximations to two nearby numbers may yield a very bad approximation to the difference of the original numbers.

As with other spreadsheets, Microsoft Excel works only to limited accuracy because it retains only a certain number of figures to describe numbers. With some exceptions regarding erroneous values, infinities, and denormalized numbers, Excel calculates in double-precision floating-point format from the IEEE 754 specification. Although Excel can display 30 decimal places, its precision for a specified number is confined to 15 significant figures, and calculations may have an accuracy that is even less due to five issues: round off, truncation, and binary storage, accumulation of the deviations of the operands in calculations, and worst: cancellation at subtractions resp. 'Catastrophic cancellation' at subtraction of values with similar magnitude.