The GUI, or graphical user interface, is a form of user interface that allows users to interact with electronic devices through graphical icons and audio indicator such as primary notation, instead of text-based UIs, typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of CLIs, which require commands to be typed on a computer keyboard.

The history of the graphical user interface, understood as the use of graphic icons and a pointing device to control a computer, covers a five-decade span of incremental refinements, built on some constant core principles. Several vendors have created their own windowing systems based on independent code, but with basic elements in common that define the WIMP "window, icon, menu and pointing device" paradigm.

Adobe Photoshop is a raster graphics editor developed and published by Adobe Inc. for Windows and macOS. It was originally created in 1987 by Thomas and John Knoll. Since then, the software has become the most used tool for professional digital art, especially in raster graphics editing. The software's name is often colloquially used as a verb although Adobe discourages such use.

Ubiquitous computing is a concept in software engineering, hardware engineering and computer science where computing is made to appear anytime and everywhere. In contrast to desktop computing, ubiquitous computing can occur using any device, in any location, and in any format. A user interacts with the computer, which can exist in many different forms, including laptop computers, tablets, smart phones and terminals in everyday objects such as a refrigerator or a pair of glasses. The underlying technologies to support ubiquitous computing include Internet, advanced middleware, operating system, mobile code, sensors, microprocessors, new I/O and user interfaces, computer networks, mobile protocols, location and positioning, and new materials.

Genera is a commercial operating system and integrated development environment for Lisp machines created by Symbolics. It is essentially a fork of an earlier operating system originating on the Massachusetts Institute of Technology (MIT) AI Lab's Lisp machines which Symbolics had used in common with Lisp Machines, Inc. (LMI), and Texas Instruments (TI). Genera was also sold by Symbolics as Open Genera, which runs Genera on computers based on a Digital Equipment Corporation (DEC) Alpha processor using Tru64 UNIX. In 2021 a new version was released as Portable Genera which runs on DEC Alpha Tru64 UNIX, x86_64 and Arm64 Linux, x86_64 and Apple M1 macOS. It is released and licensed as proprietary software.

Lego Mindstorms was a hardware and software structure which develops programmable robots based on Lego building blocks. Each version includes computer Lego bricks, a set of modular sensors and motors, and parts from the Lego Technic line to create the mechanical systems. The system is controlled by the Lego bricks.

The Aspen Movie Map was a hypermedia system developed at MIT by a team working with Andrew Lippman in 1978 with funding from ARPA.

The MIT Media Lab is a research laboratory at the Massachusetts Institute of Technology, growing out of MIT's Architecture Machine Group in the School of Architecture. Its research does not restrict to fixed academic disciplines, but draws from technology, media, science, art, and design. As of 2014, Media Lab's research groups include neurobiology, biologically inspired fabrication, socially engaging robots, emotive computing, bionics, and hyperinstruments.

In computing, a zooming user interface or zoomable user interface is a graphical environment where users can change the scale of the viewed area in order to see more detail or less, and browse through different documents. A ZUI is a type of graphical user interface (GUI). Information elements appear directly on an infinite virtual desktop, instead of in windows. Users can pan across the virtual surface in two dimensions and zoom into objects of interest. For example, as you zoom into a text object it may be represented as a small dot, then a thumbnail of a page of text, then a full-sized page and finally a magnified view of the page.

Gesture recognition is a topic in computer science and language technology with the goal of interpreting human gestures via mathematical algorithms. It is a subdiscipline of computer vision. Gestures can originate from any bodily motion or state, but commonly originate from the face or hand. Focuses in the field include emotion recognition from face and hand gesture recognition since they are all expressions. Users can make simple gestures to control or interact with devices without physically touching them. Many approaches have been made using cameras and computer vision algorithms to interpret sign language, however, the identification and recognition of posture, gait, proxemics, and human behaviors is also the subject of gesture recognition techniques. Gesture recognition can be seen as a way for computers to begin to understand human body language, thus building a better bridge between machines and humans than older text user interfaces or even GUIs, which still limit the majority of input to keyboard and mouse and interact naturally without any mechanical devices.

Windows Presentation Foundation (WPF) is a free and open-source graphical subsystem originally developed by Microsoft for rendering user interfaces in Windows-based applications. WPF, previously known as "Avalon", was initially released as part of .NET Framework 3.0 in 2006. WPF uses DirectX and attempts to provide a consistent programming model for building applications. It separates the user interface from business logic, and resembles similar XML-oriented object models, such as those implemented in XUL and SVG.

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development is to empower collaboration, learning, and design by giving physical forms to digital information, thus taking advantage of the human ability to grasp and manipulate physical objects and materials.

In computing, ambient intelligence (AmI) refers to electronic environments that are sensitive and responsive to the presence of people. Ambient intelligence is a projection on the future of consumer electronics, telecommunications and computing originally developed in the late 1990s by Eli Zelkha and his team at Palo Alto Ventures for the time frame 2010–2020. This concept is intended to enable devices to work in concert with people in carrying out their everyday life activities, tasks, and rituals, in an intuitive way by using information and intelligence that is hidden in the network connecting these devices. It is theorized that as these devices grow smaller, more connected and more integrated into our environment, the technological framework behind them would disappear into our surroundings until only the user interface remains perceivable by users.

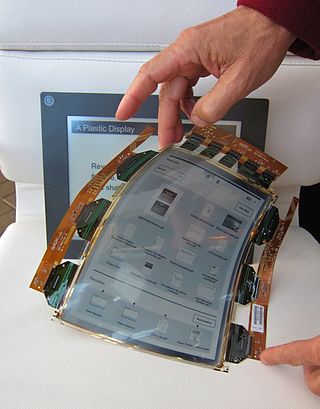

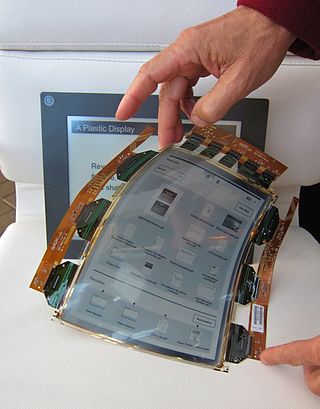

A flexible display or rollable display is an electronic visual display which is flexible in nature, as opposed to the traditional flat screen displays used in most electronic devices. In recent years there has been a growing interest from numerous consumer electronics manufacturers to apply this display technology in e-readers, mobile phones and other consumer electronics. Such screens can be rolled up like a scroll without the image or text being distorted. Technologies involved in building a rollable display include electronic ink, Gyricon, Organic LCD, and OLED.

In computing, multi-touch is technology that enables a surface to recognize the presence of more than one point of contact with the surface at the same time. The origins of multitouch began at CERN, MIT, University of Toronto, Carnegie Mellon University and Bell Labs in the 1970s. CERN started using multi-touch screens as early as 1976 for the controls of the Super Proton Synchrotron. Capacitive multi-touch displays were popularized by Apple's iPhone in 2007. Plural-point awareness may be used to implement additional functionality, such as pinch to zoom or to activate certain subroutines attached to predefined gestures.

Microsoft PixelSense was an interactive surface computing platform that allowed one or more people to use and touch real-world objects, and share digital content at the same time. The PixelSense platform consists of software and hardware products that combine vision based multitouch PC hardware, 360-degree multiuser application design, and Windows software to create a natural user interface (NUI).

A holographic display is a type of 3D display that utilizes light diffraction to display a three-dimensional image to the viewer. Holographic displays are distinguished from other forms of 3D displays in that they do not require the viewer to wear any special glasses or use external equipment to be able to see the image, and do not cause the vergence-accommodation conflict.

CE-HTML is an XHTML-based standard for designing webpages with remote user interfaces for consumer electronic devices on Universal Plug and Play networks. The standard is intended for defining user interfaces that can gracefully scale on a variety of screen sizes and geometries, including those of mobile devices to high definition television sets.

MIT App Inventor is a web application integrated development environment originally provided by Google, and now maintained by the Massachusetts Institute of Technology (MIT). It allows newcomers to computer programming to create application software (apps) for two operating systems (OS): Android and iOS, which, as of 20 January 2023, is in final beta testing. It is free and open-source software released under dual licensing: a Creative Commons Attribution ShareAlike 3.0 Unported license and an Apache License 2.0 for the source code.

Dhairya Dand FRSA is an Indian-born, American inventor and artist based in New York City.