Related Research Articles

A concept is defined as an abstract idea. It is understood to be a fundamental building block underlying principles, thoughts, and beliefs. Concepts play an important role in all aspects of cognition. As such, concepts are studied within such disciplines as linguistics, psychology, and philosophy, and these disciplines are interested in the logical and psychological structure of concepts, and how they are put together to form thoughts and sentences. The study of concepts has served as an important flagship of an emerging interdisciplinary approach, cognitive science.

Semantics is the study of linguistic meaning. It examines what meaning is, how words get their meaning, and how the meaning of a complex expression depends on its parts. Part of this process involves the distinction between sense and reference. Sense is given by the ideas and concepts associated with an expression while reference is the object to which an expression points. Semantics contrasts with syntax, which studies the rules that dictate how to create grammatically correct sentences, and pragmatics, which investigates how people use language in communication.

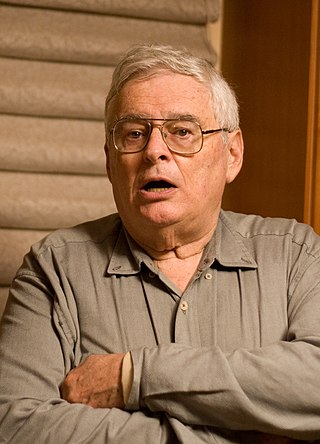

Jerry Alan Fodor was an American philosopher and the author of many crucial works in the fields of philosophy of mind and cognitive science. His writings in these fields laid the groundwork for the modularity of mind and the language of thought hypotheses, and he is recognized as having had "an enormous influence on virtually every portion of the philosophy of mind literature since 1960." At the time of his death in 2017, he held the position of State of New Jersey Professor of Philosophy, Emeritus, at Rutgers University, and had taught previously at the City University of New York Graduate Center and MIT.

The study of how language influences thought, and vice-versa, has a long history in a variety of fields. There are two bodies of thought forming around this debate. One body of thought stems from linguistics and is known as the Sapir–Whorf hypothesis. There is a strong and a weak version of the hypothesis which argue for more or less influence of language on thought. The strong version, linguistic determinism, argues that without language there is and can be no thought, while the weak version, linguistic relativity, supports the idea that there are some influences from language on thought. And on the opposing side, there are 'language of thought' theories (LOTH) which believe that public language is inessential to private thought. LOTH theories address the debate of whether thought is possible without language which is related to the question of whether language evolved for thought. These ideas are difficult to study because it proves challenging to parse the effects of culture versus thought versus language in all academic fields.

Ray Jackendoff is an American linguist. He is professor of philosophy, Seth Merrin Chair in the Humanities and, with Daniel Dennett, co-director of the Center for Cognitive Studies at Tufts University. He has always straddled the boundary between generative linguistics and cognitive linguistics, committed to both the existence of an innate universal grammar and to giving an account of language that is consistent with the current understanding of the human mind and cognition.

Inferential role semantics is an approach to the theory of meaning that identifies the meaning of an expression with its relationship to other expressions, in contradistinction to denotationalism, according to which denotations are the primary sort of meaning.

Conceptual semantics is a framework for semantic analysis developed mainly by Ray Jackendoff in 1976. Its aim is to provide a characterization of the conceptual elements by which a person understands words and sentences, and thus to provide an explanatory semantic representation. Explanatory in this sense refers to the ability of a given linguistic theory to describe how a component of language is acquired by a child.

Prototype theory is a theory of categorization in cognitive science, particularly in psychology and cognitive linguistics, in which there is a graded degree of belonging to a conceptual category, and some members are more central than others. It emerged in 1971 with the work of psychologist Eleanor Rosch, and it has been described as a "Copernican Revolution" in the theory of categorization for its departure from the traditional Aristotelian categories. It has been criticized by those that still endorse the traditional theory of categories, like linguist Eugenio Coseriu and other proponents of the structural semantics paradigm.

Construction grammar is a family of theories within the field of cognitive linguistics which posit that constructions, or learned pairings of linguistic patterns with meanings, are the fundamental building blocks of human language. Constructions include words, morphemes, fixed expressions and idioms, and abstract grammatical rules such as the passive voice or the ditransitive. Any linguistic pattern is considered to be a construction as long as some aspect of its form or its meaning cannot be predicted from its component parts, or from other constructions that are recognized to exist. In construction grammar, every utterance is understood to be a combination of multiple different constructions, which together specify its precise meaning and form.

Cognitive semantics is part of the cognitive linguistics movement. Semantics is the study of linguistic meaning. Cognitive semantics holds that language is part of a more general human cognitive ability, and can therefore only describe the world as people conceive of it. It is implicit that different linguistic communities conceive of simple things and processes in the world differently, not necessarily some difference between a person's conceptual world and the real world.

Frame semantics is a theory of linguistic meaning developed by Charles J. Fillmore that extends his earlier case grammar. It relates linguistic semantics to encyclopedic knowledge. The basic idea is that one cannot understand the meaning of a single word without access to all the essential knowledge that relates to that word. For example, one would not be able to understand the word "sell" without knowing anything about the situation of commercial transfer, which also involves, among other things, a seller, a buyer, goods, money, the relation between the money and the goods, the relations between the seller and the goods and the money, the relation between the buyer and the goods and the money and so on. Thus, a word activates, or evokes, a frame of semantic knowledge relating to the specific concept to which it refers.

In philosophy—more specifically, in its sub-fields semantics, semiotics, philosophy of language, metaphysics, and metasemantics—meaning "is a relationship between two sorts of things: signs and the kinds of things they intend, express, or signify".

A mental representation, in philosophy of mind, cognitive psychology, neuroscience, and cognitive science, is a hypothetical internal cognitive symbol that represents external reality or its abstractions.

Computational semantics is the study of how to automate the process of constructing and reasoning with meaning representations of natural language expressions. It consequently plays an important role in natural-language processing and computational linguistics.

The linguistics wars were extended disputes among American theoretical linguists that occurred mostly during the 1960s and 1970s, stemming from a disagreement between Noam Chomsky and several of his associates and students. The debates started in 1967 when linguists Paul Postal, John R. Ross, George Lakoff, and James D. McCawley —self-dubbed the "Four Horsemen of the Apocalypse"—proposed an alternative approach in which the relation between semantics and syntax is viewed differently, which treated deep structures as meaning rather than syntactic objects. While Chomsky and other generative grammarians argued that meaning is driven by an underlying syntax, generative semanticists posited that syntax is shaped by an underlying meaning. This intellectual divergence led to two competing frameworks in generative semantics and interpretive semantics.

Aspects of the Theory of Syntax is a book on linguistics written by American linguist Noam Chomsky, first published in 1965. In Aspects, Chomsky presented a deeper, more extensive reformulation of transformational generative grammar (TGG), a new kind of syntactic theory that he had introduced in the 1950s with the publication of his first book, Syntactic Structures. Aspects is widely considered to be the foundational document and a proper book-length articulation of Chomskyan theoretical framework of linguistics. It presented Chomsky's epistemological assumptions with a view to establishing linguistic theory-making as a formal discipline comparable to physical sciences, i.e. a domain of inquiry well-defined in its nature and scope. From a philosophical perspective, it directed mainstream linguistic research away from behaviorism, constructivism, empiricism and structuralism and towards mentalism, nativism, rationalism and generativism, respectively, taking as its main object of study the abstract, inner workings of the human mind related to language acquisition and production.

Formal semantics is the study of grammatical meaning in natural languages using formal tools from logic, mathematics and theoretical computer science. It is an interdisciplinary field, sometimes regarded as a subfield of both linguistics and philosophy of language. It provides accounts of what linguistic expressions mean and how their meanings are composed from the meanings of their parts. The enterprise of formal semantics can be thought of as that of reverse-engineering the semantic components of natural languages' grammars.

Ideasthesia is a neuropsychological phenomenon in which activations of concepts (inducers) evoke perception-like sensory experiences (concurrents). The name comes from the Ancient Greek ἰδέα and αἴσθησις, meaning 'sensing concepts' or 'sensing ideas'. The notion was introduced by neuroscientist Danko Nikolić as an alternative explanation for a set of phenomena traditionally covered by synesthesia.

The Integrational theory of language is the general theory of language that has been developed within the general linguistic approach of integrational linguistics.

Semantic folding theory describes a procedure for encoding the semantics of natural language text in a semantically grounded binary representation. This approach provides a framework for modelling how language data is processed by the neocortex.

References

- ↑ Jackendoff, Ray (1988). "Conceptual Semantics". In Umberto Eco; Marco Santambrogio; Patrizia Violi (eds.). Meaning and mental representations. Indiana University Press. pp. 81–97. ISBN 978-0-253-33724-5.