A superscalar processor is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor that can execute at most one single instruction per clock cycle, a superscalar processor can execute more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to different execution units on the processor. It therefore allows for more throughput than would otherwise be not possible at a given clock rate. Each execution unit is not a separate processor, but an execution resource within a single CPU such as an arithmetic logic unit.

Very long instruction word (VLIW) refers to instruction set architectures designed to exploit instruction level parallelism (ILP). Whereas conventional central processing units mostly allow programs to specify instructions to execute in sequence only, a VLIW processor allows programs to explicitly specify instructions to execute in parallel. This design is intended to allow higher performance without the complexity inherent in some other designs.

A digital signal processor (DSP) is a specialized microprocessor, with its architecture optimized for the operational needs of digital signal processing.

Explicitly parallel instruction computing (EPIC) is a term coined in 1997 by the HP–Intel alliance to describe a computing paradigm that researchers had been investigating since the early 1980s. This paradigm is also called Independence architectures. It was the basis for Intel and HP development of the Intel Itanium architecture, and HP later asserted that "EPIC" was merely an old term for the Itanium architecture. EPIC permits microprocessors to execute software instructions in parallel by using the compiler, rather than complex on-die circuitry, to control parallel instruction execution. This was intended to allow simple performance scaling without resorting to higher clock frequencies.

Multiflow Computer, Inc., founded in April, 1984 near New Haven, Connecticut, USA, was a manufacturer and seller of minisupercomputer hardware and software embodying the VLIW design style. Multiflow, incorporated in Delaware, ended operations in March, 1990, after selling about 125 VLIW minisupercomputers in the United States, Europe, and Japan.

In computing, hardware acceleration is the use of computer hardware specially made to perform some functions more efficiently than is possible in software running on a general-purpose CPU. Any transformation of data or routine that can be computed, can be calculated purely in software running on a generic CPU, purely in custom-made hardware, or in some mix of both. An operation can be computed faster in application-specific hardware designed or programmed to compute the operation than specified in software and performed on a general-purpose computer processor. Each approach has advantages and disadvantages. The implementation of computing tasks in hardware to decrease latency and increase throughput is known as hardware acceleration.

In computer architecture, a transport triggered architecture (TTA) is a kind of processor design in which programs directly control the internal transport buses of a processor. Computation happens as a side effect of data transports: writing data into a triggering port of a functional unit triggers the functional unit to start a computation. This is similar to what happens in a systolic array. Due to its modular structure, TTA is an ideal processor template for application-specific instruction-set processors (ASIP) with customized datapath but without the inflexibility and design cost of fixed function hardware accelerators.

The Fujitsu FR-V is one of the very few processors ever able to process both a very long instruction word (VLIW) and vector processor instructions at the same time, increasing throughput with high parallel computing while increasing performance per watt and hardware efficiency. The family was presented in 1999. Its design was influenced by the VPP500/5000 models of the Fujitsu VP/2000 vector processor supercomputer line.

A multi-core processor is a single computing component with two or more independent processing units called cores, which read and execute program instructions. The instructions are ordinary CPU instructions but the single processor can run multiple instructions on separate cores at the same time, increasing overall speed for programs amenable to parallel computing. Manufacturers typically integrate the cores onto a single integrated circuit die or onto multiple dies in a single chip package. The microprocessors currently used in almost all personal computers are multi-core.

Scratchpad memory (SPM), also known as scratchpad, scratchpad RAM or local store in computer terminology, is a high-speed internal memory used for temporary storage of calculations, data, and other work in progress. In reference to a microprocessor ("CPU"), scratchpad refers to a special high-speed memory circuit used to hold small items of data for rapid retrieval. It is similar to the usage and size of a scratchpad in life: a pad of paper for preliminary notes or sketches or writings, etc.

No instruction set computing (NISC) is a computing architecture and compiler technology for designing highly efficient custom processors and hardware accelerators by allowing a compiler to have low-level control of hardware resources.

Intel Tera-Scale is a research program by Intel that focuses on development in Intel processors and platforms that utilize the inherent parallelism of emerging visual-computing applications. Such applications require teraFLOPS of parallel computing performance to process terabytes of data quickly. Parallelism is the concept of performing multiple tasks simultaneously. Utilizing parallelism will not only increase the efficiency of computer processing units (CPUs), but also increase the bytes of data analyzed each second. In order to appropriately apply parallelism, the CPU must be able to handle multiple threads and to do so the CPU must consist of multiple cores. The conventional amount of cores in consumer grade computers are 2–8 cores while workstation grade computers can have even greater amounts. However, even the current amount of cores aren't great enough to perform at teraFLOPS performance leading to an even greater amount of cores that must be added. As a result of the program, two prototypes have been manufactured that were used to test the feasibility of having many more cores than the conventional amount and proved to be successful.

Stream Processors, Inc was a Silicon Valley-based fabless semiconductor company specializing in the design and manufacture of high-performance digital signal processors for applications including video surveillance, multi-function printers and video conferencing. The company ceased operations in 2009.

TriMedia is a family of very long instruction word media processors from NXP Semiconductors. TriMedia is a Harvard architecture CPU that features many DSP and SIMD operations to efficiently process audio and video data streams. For TriMedia processor optimal performance can be achieved by only programming in C/C++ as opposed to most other VLIW/DSP processors which require assembly language programming to achieve optimal performance. High-level programmability of TriMedia relies on the large uniform register file and the orthogonal instruction set, in which RISC-like operations can be scheduled independently of each other in the VLIW issue slots. Furthermore, TriMedia processors boast advanced caches supporting unaligned accesses without performance penalty, hardware and software data/instruction prefetch, allocate-on-write-miss, as well as collapsed load operations combining a traditional load with a 2-taps filter function. TriMedia development has been supported by various research studies on hardware cache coherency, multithreading and diverse accelerators to build scalable shared memory multiprocessor systems.

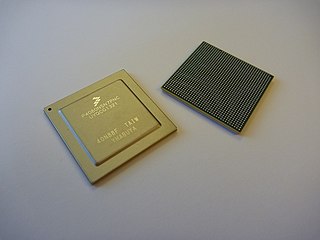

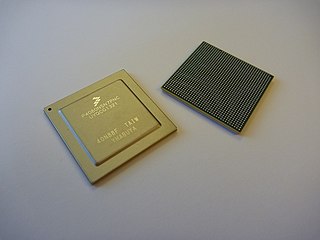

QorIQ is a brand of ARM-based and Power ISA-based communications microprocessors from NXP Semiconductors. It is the evolutionary step from the PowerQUICC platform and initial products were built around one or more e500mc cores and came in five different product platforms, P1, P2, P3, P4 and P5, segmented by performance and functionality. The platform keeps software compatibility with older PowerPC products such as the PowerQUICC platform. In 2012 Freescale announced ARM based QorIQ offerings beginning in 2013.

The Multicore Association was founded in 2005. Multicore Association is a member-funded, non-profit, industry consortium focused on the creation of open standard APIs, specifications, and guidelines that allow system developers and programmers to more readily adopt multicore technology into their applications.

Manycore processors are specialist multi-core processors designed for a high degree of parallel processing, containing a large number of simpler, independent processor cores. Manycore processors are used extensively in embedded computers and high-performance computing. As of November 2018, the world's third fastest supercomputer, the Chinese Sunway TaihuLight, obtains its performance from 40,960 SW26010 manycore processors, each containing 256 cores.

The Collective Tuning Initiative is a community-driven initiative started by Grigori Fursin to develop free collaborative open-source research tools with unified API for code and architecture characterization, optimization and co-design. This enables the sharing of benchmarks, data sets and optimization cases from the community in the open optimization repository through unified web services to predict better optimizations or architecture designs. Using common research-and-development tools should help to improve the quality and reproducibility of research into code, architecture design and optimization, encouraging innovation in this area. This approach helped establish Artifact Evaluation at several ACM-sponsored conferences to encourage sharing of artifacts and validation of experimental results from accepted papers.

For several years parallel hardware was only available for distributed computing but recently it is becoming available for the low end computers as well. Hence it has become inevitable for software programmers to start writing parallel applications. It is quite natural for programmers to think sequentially and hence they are less acquainted with writing multi-threaded or parallel processing applications. Parallel programming requires handling various issues such as synchronization and deadlock avoidance. Programmers require added expertise for writing such applications apart from their expertise in the application domain. Hence programmers prefer to write sequential code and most of the popular programming languages support it. This allows them to concentrate more on the application. Therefore, there is a need to convert such sequential applications to parallel applications with the help of automated tools. The need is also non-trivial because large amount of legacy code written over the past few decades needs to be reused and parallelized.