Transhumanism is a philosophical and intellectual movement that advocates the enhancement of the human condition by developing and making widely available new and future technologies that can greatly enhance longevity, cognition, and well-being.

Max Erik Tegmark is a Swedish-American physicist, machine learning researcher and author. He is best known for his book Life 3.0 about what the world might look like as artificial intelligence continues to improve. Tegmark is a professor at the Massachusetts Institute of Technology and the president of the Future of Life Institute.

David Pearce is a British transhumanist philosopher. He is the co-founder of the World Transhumanist Association, currently rebranded and incorporated as Humanity+. Pearce approaches ethical issues from a lexical negative utilitarian perspective.

Nick Bostrom is a philosopher known for his work on existential risk, the anthropic principle, human enhancement ethics, whole brain emulation, superintelligence risks, and the reversal test. He was the founding director of the now dissolved Future of Humanity Institute at the University of Oxford and is now Principal Researcher at the Macrostrategy Research Initiative.

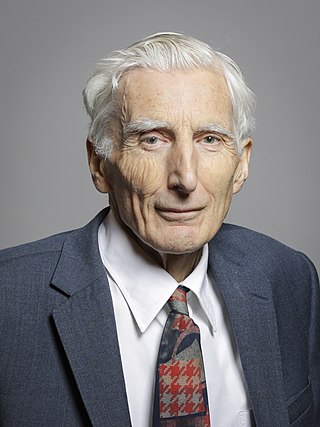

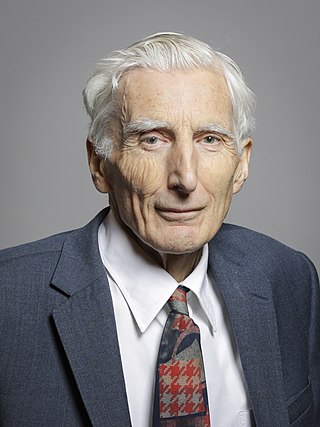

Martin John Rees, Baron Rees of Ludlow, is a British cosmologist and astrophysicist. He is the fifteenth Astronomer Royal, appointed in 1995, and was Master of Trinity College, Cambridge, from 2004 to 2012 and President of the Royal Society between 2005 and 2010. He has received various physics awards including the Wolf Prize in Physics in 2024 for fundamental contributions to high-energy astrophysics, galaxies and structure formation, and cosmology.

An AI takeover is an imagined scenario in which artificial intelligence (AI) emerges as the dominant form of intelligence on Earth and computer programs or robots effectively take control of the planet away from the human species, which relies on human intelligence. Possible scenarios include replacement of the entire human workforce due to automation, takeover by a superintelligent AI (ASI), and the notion of a robot uprising. Stories of AI takeovers have been popular throughout science fiction, but recent advancements have made the threat more real. Some public figures, such as Stephen Hawking and Elon Musk, have advocated research into precautionary measures to ensure future superintelligent machines remain under human control.

Space and survival is the idea that the long-term survival of the human species and technological civilization requires the building of a spacefaring civilization that utilizes the resources of outer space, and that not doing this might lead to human extinction. A related observation is that the window of opportunity for doing this may be limited due to the decreasing amount of surplus resources that will be available over time as a result of an ever-growing population.

Human extinction is the hypothetical end of the human species, either by population decline due to extraneous natural causes, such as an asteroid impact or large-scale volcanism, or via anthropogenic destruction (self-extinction), for example by sub-replacement fertility.

Anders Sandberg is a Swedish researcher, futurist and transhumanist. He holds a PhD in computational neuroscience from Stockholm University, and is a former senior research fellow at the Future of Humanity Institute at the University of Oxford.

Our Final Hour is a 2003 book by the British Astronomer Royal Sir Martin Rees. The full title of the book is Our Final Hour: A Scientist's Warning: How Terror, Error, and Environmental Disaster Threaten Humankind's Future In This Century—On Earth and Beyond. It was published in the United Kingdom under the title Our Final Century: Will the Human Race Survive the Twenty-first Century?.

The Future of Humanity Institute (FHI) was an interdisciplinary research centre at the University of Oxford investigating big-picture questions about humanity and its prospects. It was founded in 2005 as part of the Faculty of Philosophy and the Oxford Martin School. Its director was philosopher Nick Bostrom, and its research staff included futurist Anders Sandberg and Giving What We Can founder Toby Ord.

A global catastrophic risk or a doomsday scenario is a hypothetical event that could damage human well-being on a global scale, even endangering or destroying modern civilization. An event that could cause human extinction or permanently and drastically curtail humanity's existence or potential is known as an "existential risk".

The Centre for the Study of Existential Risk (CSER) is a research centre at the University of Cambridge, intended to study possible extinction-level threats posed by present or future technology. The co-founders of the centre are Huw Price, Martin Rees and Jaan Tallinn.

Jim Holt is an American journalist, popular-science author, and essayist. He has contributed to The New York Times, The New York Times Magazine, The New York Review of Books, The New Yorker, The American Scholar, and Slate. In 1997 he was editor of The New Leader, a political magazine. His book Why Does the World Exist? was a 2013 New York Times bestseller.

The Future of Life Institute (FLI) is a nonprofit organization which aims to steer transformative technology towards benefiting life and away from large-scale risks, with a focus on existential risk from advanced artificial intelligence (AI). FLI's work includes grantmaking, educational outreach, and advocacy within the United Nations, United States government, and European Union institutions.

Existential risk from AI refers to the idea that substantial progress in artificial general intelligence (AGI) could lead to human extinction or an irreversible global catastrophe.

Brief Answers to the Big Questions is a popular science book written by physicist Stephen Hawking, and published by Hodder & Stoughton (hardcover) and Bantam Books (paperback) on 16 October 2018. The book examines some of the universe's greatest mysteries, and promotes the view that science is very important in helping to solve problems on planet Earth. The publisher describes the book as "a selection of [Hawking's] most profound, accessible, and timely reflections from his personal archive", and is based on, according to a book reviewer, "half a million or so words" from his essays, lectures and keynote speeches.

The Precipice: Existential Risk and the Future of Humanity is a 2020 non-fiction book by the Australian philosopher Toby Ord, a senior research fellow at the Future of Humanity Institute in Oxford. It argues that humanity faces unprecedented risks over the next few centuries and examines the moral significance of safeguarding humanity's future.

End Times: A Brief Guide to the End of the World is a 2019 non-fiction book by journalist Bryan Walsh. The book discusses various risks of human extinction, including asteroids, volcanoes, nuclear war, global warming, pathogens, biotech, AI, and extraterrestrial intelligence. The book includes interviews with astronomers, anthropologists, biologists, climatologists, geologists, and other scholars. The book advocates strongly for greater action.

Scenarios in which a global catastrophic risk creates harm have been widely discussed. Some sources of catastrophic risk are anthropogenic, such as global warming, environmental degradation, and nuclear war. Others are non-anthropogenic or natural, such as meteor impacts or supervolcanoes. The impact of these scenarios can vary widely, depending on the cause and the severity of the event, ranging from temporary economic disruption to human extinction. Many societal collapses have already happened throughout human history.