Information theory is the mathematical study of the quantification, storage, and communication of information. The field was originally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field, in applied mathematics, is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering, and electrical engineering.

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable , which takes values in the alphabet and is distributed according to , the entropy is

In digital transmission, the number of bit errors is the number of received bits of a data stream over a communication channel that have been altered due to noise, interference, distortion or bit synchronization errors.

In information theory, the Shannon–Hartley theorem tells the maximum rate at which information can be transmitted over a communications channel of a specified bandwidth in the presence of noise. It is an application of the noisy-channel coding theorem to the archetypal case of a continuous-time analog communications channel subject to Gaussian noise. The theorem establishes Shannon's channel capacity for such a communication link, a bound on the maximum amount of error-free information per time unit that can be transmitted with a specified bandwidth in the presence of the noise interference, assuming that the signal power is bounded, and that the Gaussian noise process is characterized by a known power or power spectral density. The law is named after Claude Shannon and Ralph Hartley.

Rayleigh fading is a statistical model for the effect of a propagation environment on a radio signal, such as that used by wireless devices.

In wireless communications, fading refers to the variation of signal attenuation over variables like time, geographical position, and radio frequency. Fading is often modeled as a random process. In wireless systems, fading may either be due to multipath propagation, referred to as multipath-induced fading, weather, or shadowing from obstacles affecting the wave propagation, sometimes referred to as shadow fading.

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used for information transfer of, for example, a digital bit stream, from one or several senders to one or several receivers. A channel has a certain capacity for transmitting information, often measured by its bandwidth in Hz or its data rate in bits per second.

Additive white Gaussian noise (AWGN) is a basic noise model used in information theory to mimic the effect of many random processes that occur in nature. The modifiers denote specific characteristics:

Channel capacity, in electrical engineering, computer science, and information theory, is the theoretical maximum rate at which information can be reliably transmitted over a communication channel.

Detection theory or signal detection theory is a means to measure the ability to differentiate between information-bearing patterns and random patterns that distract from the information.

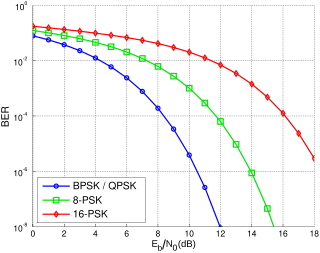

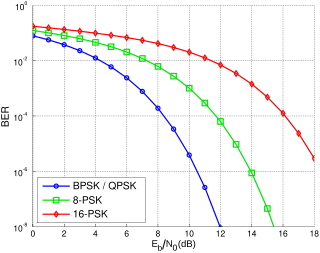

In digital communication or data transmission, is a normalized signal-to-noise ratio (SNR) measure, also known as the "SNR per bit". It is especially useful when comparing the bit error rate (BER) performance of different digital modulation schemes without taking bandwidth into account.

In information theory, the noisy-channel coding theorem, establishes that for any given degree of noise contamination of a communication channel, it is possible to communicate discrete data nearly error-free up to a computable maximum rate through the channel. This result was presented by Claude Shannon in 1948 and was based in part on earlier work and ideas of Harry Nyquist and Ralph Hartley.

In digital communications shaping codes are a method of encoding that changes the distribution of signals to improve efficiency.

Dynamic Markov compression (DMC) is a lossless data compression algorithm developed by Gordon Cormack and Nigel Horspool. It uses predictive arithmetic coding similar to prediction by partial matching (PPM), except that the input is predicted one bit at a time. DMC has a good compression ratio and moderate speed, similar to PPM, but requires somewhat more memory and is not widely implemented. Some recent implementations include the experimental compression programs hook by Nania Francesco Antonio, ocamyd by Frank Schwellinger, and as a submodel in paq8l by Matt Mahoney. These are based on the 1993 implementation in C by Gordon Cormack.

Zero-forcing precoding is a method of spatial signal processing by which a multiple antenna transmitter can null the multiuser interference in a multi-user MIMO wireless communication system. When the channel state information is perfectly known at the transmitter, the zero-forcing precoder is given by the pseudo-inverse of the channel matrix. Zero-forcing has been used in LTE mobile networks.

Recognised by John Wozencraft, sequential decoding is a limited memory technique for decoding tree codes. Sequential decoding is mainly used as an approximate decoding algorithm for long constraint-length convolutional codes. This approach may not be as accurate as the Viterbi algorithm but can save a substantial amount of computer memory. It was used to decode a convolutional code in 1968 Pioneer 9 mission.

In mathematics and telecommunications, stochastic geometry models of wireless networks refer to mathematical models based on stochastic geometry that are designed to represent aspects of wireless networks. The related research consists of analyzing these models with the aim of better understanding wireless communication networks in order to predict and control various network performance metrics. The models require using techniques from stochastic geometry and related fields including point processes, spatial statistics, geometric probability, percolation theory, as well as methods from more general mathematical disciplines such as geometry, probability theory, stochastic processes, queueing theory, information theory, and Fourier analysis.

In information theory and telecommunication engineering, the signal-to-interference-plus-noise ratio (SINR) is a quantity used to give theoretical upper bounds on channel capacity in wireless communication systems such as networks. Analogous to the signal-to-noise ratio (SNR) used often in wired communications systems, the SINR is defined as the power of a certain signal of interest divided by the sum of the interference power and the power of some background noise. If the power of noise term is zero, then the SINR reduces to the signal-to-interference ratio (SIR). Conversely, zero interference reduces the SINR to the SNR, which is used less often when developing mathematical models of wireless networks such as cellular networks.

The shannon is a unit of information named after Claude Shannon, the founder of information theory. IEC 80000-13 defines the shannon as the information content associated with an event when the probability of the event occurring is 1/2. It is understood as such within the realm of information theory, and is conceptually distinct from the bit, a term used in data processing and storage to denote a single instance of a binary signal. A sequence of n binary symbols is properly described as consisting of n bits, but the information content of those n symbols may be more or less than n shannons depending on the a priori probability of the actual sequence of symbols.

In radio propagation, two-wave with diffuse power (TWDP) fading is a model that explains why a signal strengthens or weakens at certain locations or times. TWDP models fading due to the interference of two strong radio signals and numerous smaller, diffuse signals.