Related Research Articles

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. It does not assume or postulate any natural laws, but explains the macroscopic behavior of nature from the behavior of such ensembles.

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution.

In physics, specifically statistical mechanics, an ensemble is an idealization consisting of a large number of virtual copies of a system, considered all at once, each of which represents a possible state that the real system might be in. In other words, a statistical ensemble is a set of systems of particles used in statistical mechanics to describe a single system. The concept of an ensemble was introduced by J. Willard Gibbs in 1902.

Simulated annealing (SA) is a probabilistic technique for approximating the global optimum of a given function. Specifically, it is a metaheuristic to approximate global optimization in a large search space for an optimization problem. For large numbers of local optima, SA can find the global optima. It is often used when the search space is discrete. For problems where finding an approximate global optimum is more important than finding a precise local optimum in a fixed amount of time, simulated annealing may be preferable to exact algorithms such as gradient descent or branch and bound.

In statistics, Markov chain Monte Carlo (MCMC) methods comprise a class of algorithms for sampling from a probability distribution. By constructing a Markov chain that has the desired distribution as its equilibrium distribution, one can obtain a sample of the desired distribution by recording states from the chain. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Various algorithms exist for constructing chains, including the Metropolis–Hastings algorithm.

The fluctuation theorem (FT), which originated from statistical mechanics, deals with the relative probability that the entropy of a system which is currently away from thermodynamic equilibrium will increase or decrease over a given amount of time. While the second law of thermodynamics predicts that the entropy of an isolated system should tend to increase until it reaches equilibrium, it became apparent after the discovery of statistical mechanics that the second law is only a statistical one, suggesting that there should always be some nonzero probability that the entropy of an isolated system might spontaneously decrease; the fluctuation theorem precisely quantifies this probability.

Global optimization is a branch of applied mathematics and numerical analysis that attempts to find the global minima or maxima of a function or a set of functions on a given set. It is usually described as a minimization problem because the maximization of the real-valued function is equivalent to the minimization of the function .

Importance sampling is a Monte Carlo method for evaluating properties of a particular distribution, while only having samples generated from a different distribution than the distribution of interest. Its introduction in statistics is generally attributed to a paper by Teun Kloek and Herman K. van Dijk in 1978, but its precursors can be found in statistical physics as early as 1949. Importance sampling is also related to umbrella sampling in computational physics. Depending on the application, the term may refer to the process of sampling from this alternative distribution, the process of inference, or both.

In physics, maximum entropy thermodynamics views equilibrium thermodynamics and statistical mechanics as inference processes. More specifically, MaxEnt applies inference techniques rooted in Shannon information theory, Bayesian probability, and the principle of maximum entropy. These techniques are relevant to any situation requiring prediction from incomplete or insufficient data. MaxEnt thermodynamics began with two papers by Edwin T. Jaynes published in the 1957 Physical Review.

Path integral Monte Carlo (PIMC) is a quantum Monte Carlo method used to solve quantum statistical mechanics problems numerically within the path integral formulation. The application of Monte Carlo methods to path integral simulations of condensed matter systems was first pursued in a key paper by John A. Barker.

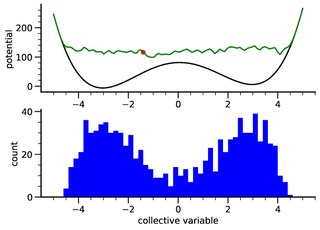

Umbrella sampling is a technique in computational physics and chemistry, used to improve sampling of a system where ergodicity is hindered by the form of the system's energy landscape. It was first suggested by Torrie and Valleau in 1977. It is a particular physical application of the more general importance sampling in statistics.

Thermodynamic integration is a method used to compare the difference in free energy between two given states whose potential energies and have different dependences on the spatial coordinates. Because the free energy of a system is not simply a function of the phase space coordinates of the system, but is instead a function of the Boltzmann-weighted integral over phase space, the free energy difference between two states cannot be calculated directly from the potential energy of just two coordinate sets. In thermodynamic integration, the free energy difference is calculated by defining a thermodynamic path between the states and integrating over ensemble-averaged enthalpy changes along the path. Such paths can either be real chemical processes or alchemical processes. An example alchemical process is the Kirkwood's coupling parameter method.

Reuven Rubinstein (1938-2012)(Hebrew: ראובן רובינשטיין) was an Israeli scientist known for his contributions to Monte Carlo simulation, applied probability, stochastic modeling and stochastic optimization, having authored more than one hundred papers and six books.

Transition path sampling (TPS) is a rare-event sampling method used in computer simulations of rare events: physical or chemical transitions of a system from one stable state to another that occur too rarely to be observed on a computer timescale. Examples include protein folding, chemical reactions and nucleation. Standard simulation tools such as molecular dynamics can generate the dynamical trajectories of all the atoms in the system. However, because of the gap in accessible time-scales between simulation and reality, even present supercomputers might require years of simulations to show an event that occurs once per millisecond without some kind of acceleration.

For robot control, Stochastic roadmap simulation is inspired by probabilistic roadmap methods (PRM) developed for robot motion planning.

Metadynamics is a computer simulation method in computational physics, chemistry and biology. It is used to estimate the free energy and other state functions of a system, where ergodicity is hindered by the form of the system's energy landscape. It was first suggested by Alessandro Laio and Michele Parrinello in 2002 and is usually applied within molecular dynamics simulations. MTD closely resembles a number of newer methods such as adaptively biased molecular dynamics, adaptive reaction coordinate forces and local elevation umbrella sampling. More recently, both the original and well-tempered metadynamics were derived in the context of importance sampling and shown to be a special case of the adaptive biasing potential setting. MTD is related to the Wang–Landau sampling.

Stochastic-process rare event sampling (SPRES) is a rare-event sampling method in computer simulation, designed specifically for non-equilibrium calculations, including those for which the rare-event rates are time-dependent. To treat systems in which there is time dependence in the dynamics, due either to variation of an external parameter or to evolution of the system itself, the scheme for branching paths must be devised so as to achieve sampling which is distributed evenly in time and which takes account of changing fluxes through different regions of the phase space.

Subset simulation is a method used in reliability engineering to compute small failure probabilities encountered in engineering systems. The basic idea is to express a small failure probability as a product of larger conditional probabilities by introducing intermediate failure events. This conceptually converts the original rare-event problem into a series of frequent-event problems that are easier to solve. In the actual implementation, samples conditional on intermediate failure events are adaptively generated to gradually populate from the frequent to rare event region. These 'conditional samples' provide information for estimating the complementary cumulative distribution function (CCDF) of the quantity of interest, covering the high as well as the low probability regions. They can also be used for investigating the cause and consequence of failure events. The generation of conditional samples is not trivial but can be performed efficiently using Markov chain Monte Carlo (MCMC).

Rosalind Jane Allen is a soft matter physicist and Professor of Theoretical Microbial Ecology at the Biological Physics at the Friedrich-Schiller University of Jena, Germany, and (part-time) Professor of Biological Physics at the University of Edinburgh, Scotland She is a member of the centre for synthetic biology and systems biology where her research investigates the organisation of microbe populations.

Dirk Pieter Kroese is a Dutch-Australian mathematician and statistician, and Professor at the University of Queensland. He is known for several contributions to applied probability, kernel density estimation, Monte Carlo methods and rare event simulation. He is, with Reuven Rubinstein, a pioneer of the Cross-Entropy (CE) method.

References

- ↑ Morio, J.; Balesdent, M. (2014). "A survey of rare event simulation methods for static input–output models" (PDF). Simulation Modelling Practice and Theory. 49 (4): 287–304. doi:10.1016/j.simpat.2014.10.007.

- ↑ Dellago, Christoph; Bolhuis, Peter G.; Geissler, Phillip L. (2002). Transition Path Sampling. pp. 1–84. doi:10.1002/0471231509.ch1. ISBN 978-0-471-21453-3.

{{cite book}}:|journal=ignored (help) - ↑ Riccardi, Enrico; Dahlen, Oda; van Erp, Titus S. (2017-09-06). "Fast Decorrelating Monte Carlo Moves for Efficient Path Sampling". The Journal of Physical Chemistry Letters. 8 (18): 4456–4460. doi:10.1021/acs.jpclett.7b01617. hdl: 11250/2491276 . ISSN 1948-7185. PMID 28857565.

- ↑ Villén-Altamirano, Manuel; Villén-Altamirano, José (1994). "Restart: a straightforward method for fast simulation of rare events". Written at San Diego, CA, USA. Proceedings of the 26th Winter simulation conference. WSC '94. Orlando, Florida, United States: Society for Computer Simulation International. pp. 282–289. ISBN 0-7803-2109-X. acmid 194044.

- ↑ Allen, Rosalind J.; Warren, Patrick B.; ten Wolde, Pieter Rein (2005). "Sampling Rare Switching Events in Biochemical Networks". Physical Review Letters. 94 (1): 018104. arXiv: q-bio/0406006 . Bibcode:2005PhRvL..94a8104A. doi:10.1103/PhysRevLett.94.018104. PMID 15698138. S2CID 7998065.

- ↑ Allen, Rosalind J.; ten Wolde, Pieter Rein; Rein Ten Wolde, Pieter (2009). "Forward flux sampling for rare event simulations". Journal of Physics: Condensed Matter. 21 (46): 463102. arXiv: 0906.4758 . Bibcode:2009JPCM...21T3102A. doi:10.1088/0953-8984/21/46/463102. PMID 21715864. S2CID 10222109.

- ↑ Botev, Z. I.; Kroese, D. P. (2008). "Efficient Monte Carlo simulation via the generalized splitting method". Methodology and Computing in Applied Probability. 10 (4): 471–505. CiteSeerX 10.1.1.399.7912 . doi:10.1007/s11009-008-9073-7. S2CID 1147040.

- ↑ Botev, Z. I.; Kroese, D. P. (2012). "Efficient Monte Carlo simulation via the generalized splitting method". Statistics and Computing. 22 (1): 1–16. doi:10.1007/s11222-010-9201-4. S2CID 14970946.

- ↑ Cerou., Frédéric; Arnaud Guyader (2005). Adaptive multilevel splitting for rare event analysis (Technical report). INRIA. RR-5710.

- ↑ Berryman, Joshua T.; Schilling, Tanja (2010). "Sampling rare events in nonequilibrium and nonstationary systems". The Journal of Chemical Physics. 133 (24): 244101. arXiv: 1001.2456 . Bibcode:2010JChPh.133x4101B. doi:10.1063/1.3525099. PMID 21197970. S2CID 34154184.

- ↑ Schueller, G. I.; Pradlwarter, H. J.; Koutsourelakis, P. (2004). "A critical appraisal of reliability estimation procedures for high dimensions". Probabilistic Engineering Mechanics. 19 (4): 463–474. doi:10.1016/j.probengmech.2004.05.004.

- ↑ Au, S.K.; Beck, James L. (October 2001). "Estimation of small failure probabilities in high dimensions by subset simulation". Probabilistic Engineering Mechanics. 16 (4): 263–277. CiteSeerX 10.1.1.131.1941 . doi:10.1016/S0266-8920(01)00019-4.

- ↑ Zuckerman, Daniel M.; Chong, Lillian T. (2017-05-22). "Weighted Ensemble Simulation: Review of Methodology, Applications, and Software". Annual Review of Biophysics. 46 (1): 43–57. doi:10.1146/annurev-biophys-070816-033834. ISSN 1936-122X. PMC 5896317 . PMID 28301772.

- ↑ Huber, G.A.; Kim, S. (January 1996). "Weighted-ensemble Brownian dynamics simulations for protein association reactions". Biophysical Journal. 70 (1): 97–110. Bibcode:1996BpJ....70...97H. doi:10.1016/S0006-3495(96)79552-8. PMC 1224912 . PMID 8770190.

- ↑ Kahn, H.; Harris, T.E. (1951). "Estimation of particle transmission by random sampling". National Bureau of Standards Appl. Math. Series. 12: 27–30.

- ↑ Riccardi, Enrico; Anders, Lervik; van Erp, Titus S. (2020). "PyRETIS 2: An improbability drive for rare events". Journal of Computational Chemistry. 41 (4): 379–377. doi: 10.1002/jcc.26112 . PMID 31742744.

- ↑ Aarøen, Ola; Kiær, Henrik; Riccardi, Enrico (2020). "PyVisA: Visualization and Analysis of path sampling trajectories". Journal of Computational Chemistry. 42 (6): 435–446. doi:10.1002/jcc.26467. PMID 33314210. S2CID 229179978.