In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices which are Hermitian, involutory and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries.

Controllability is an important property of a control system and plays a crucial role in many control problems, such as stabilization of unstable systems by feedback, or optimal control.

In linear algebra, the singular value decomposition (SVD) is a factorization of a real or complex matrix. It generalizes the eigendecomposition of a square normal matrix with an orthonormal eigenbasis to any matrix. It is related to the polar decomposition.

Ray transfer matrix analysis is a mathematical form for performing ray tracing calculations in sufficiently simple problems which can be solved considering only paraxial rays. Each optical element is described by a 2×2 ray transfer matrix which operates on a vector describing an incoming light ray to calculate the outgoing ray. Multiplication of the successive matrices thus yields a concise ray transfer matrix describing the entire optical system. The same mathematics is also used in accelerator physics to track particles through the magnet installations of a particle accelerator, see electron optics.

In mathematics, particularly in linear algebra, a skew-symmetricmatrix is a square matrix whose transpose equals its negative. That is, it satisfies the condition

In probability theory and statistics, a covariance matrix is a square matrix giving the covariance between each pair of elements of a given random vector.

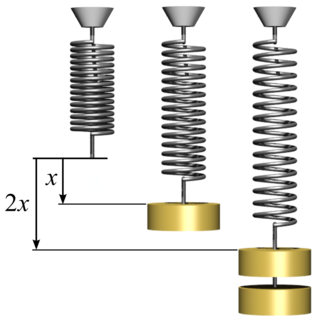

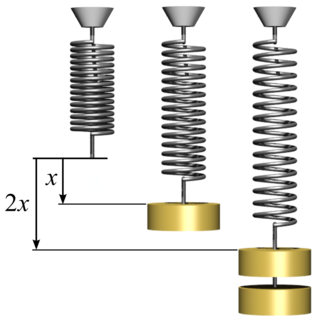

In physics, Hooke's law is an empirical law which states that the force needed to extend or compress a spring by some distance scales linearly with respect to that distance—that is, Fs = kx, where k is a constant factor characteristic of the spring, and x is small compared to the total possible deformation of the spring. The law is named after 17th-century British physicist Robert Hooke. He first stated the law in 1676 as a Latin anagram. He published the solution of his anagram in 1678 as: ut tensio, sic vis. Hooke states in the 1678 work that he was aware of the law since 1660.

In quantum field theory, the Dirac spinor is the spinor that describes all known fundamental particles that are fermions, with the possible exception of neutrinos. It appears in the plane-wave solution to the Dirac equation, and is a certain combination of two Weyl spinors, specifically, a bispinor that transforms "spinorially" under the action of the Lorentz group.

In control systems, sliding mode control (SMC) is a nonlinear control method that alters the dynamics of a nonlinear system by applying a discontinuous control signal that forces the system to "slide" along a cross-section of the system's normal behavior. The state-feedback control law is not a continuous function of time. Instead, it can switch from one continuous structure to another based on the current position in the state space. Hence, sliding mode control is a variable structure control method. The multiple control structures are designed so that trajectories always move toward an adjacent region with a different control structure, and so the ultimate trajectory will not exist entirely within one control structure. Instead, it will slide along the boundaries of the control structures. The motion of the system as it slides along these boundaries is called a sliding mode and the geometrical locus consisting of the boundaries is called the sliding (hyper)surface. In the context of modern control theory, any variable structure system, like a system under SMC, may be viewed as a special case of a hybrid dynamical system as the system both flows through a continuous state space but also moves through different discrete control modes.

In linear algebra and functional analysis, a projection is a linear transformation from a vector space to itself such that . That is, whenever is applied twice to any vector, it gives the same result as if it were applied once. It leaves its image unchanged. This definition of "projection" formalizes and generalizes the idea of graphical projection. One can also consider the effect of a projection on a geometrical object by examining the effect of the projection on points in the object.

In control engineering and system identification, a state-space representation is a mathematical model of a physical system specified as a set of input, output and variables related by first-order differential equations or difference equations. Such variables, called state variables, evolve over time in a way that depends on the values they have at any given instant and on the externally imposed values of input variables. Output variables’ values depend on the values of the state variables and may also depend on the values of the input variables.

In mathematics, the matrix exponential is a matrix function on square matrices analogous to the ordinary exponential function. It is used to solve systems of linear differential equations. In the theory of Lie groups, the matrix exponential gives the exponential map between a matrix Lie algebra and the corresponding Lie group.

In applied statistics, total least squares is a type of errors-in-variables regression, a least squares data modeling technique in which observational errors on both dependent and independent variables are taken into account. It is a generalization of Deming regression and also of orthogonal regression, and can be applied to both linear and non-linear models.

In Hamiltonian mechanics, the linear canonical transformation (LCT) is a family of integral transforms that generalizes many classical transforms. It has 4 parameters and 1 constraint, so it is a 3-dimensional family, and can be visualized as the action of the special linear group SL2(R) on the time–frequency plane (domain). As this defines the original function up to a sign, this translates into an action of its double cover on the original function space.

Full state feedback (FSF), or pole placement, is a method employed in feedback control system theory to place the closed-loop poles of a plant in pre-determined locations in the s-plane. Placing poles is desirable because the location of the poles corresponds directly to the eigenvalues of the system, which control the characteristics of the response of the system. The system must be considered controllable in order to implement this method.

In statistics, Bayesian multivariate linear regression is a Bayesian approach to multivariate linear regression, i.e. linear regression where the predicted outcome is a vector of correlated random variables rather than a single scalar random variable. A more general treatment of this approach can be found in the article MMSE estimator.

Stokes' theorem, also known as the Kelvin–Stokes theorem after Lord Kelvin and George Stokes, the fundamental theorem for curls or simply the curl theorem, is a theorem in vector calculus on . Given a vector field, the theorem relates the integral of the curl of the vector field over some surface, to the line integral of the vector field around the boundary of the surface. The classical theorem of Stokes can be stated in one sentence: The line integral of a vector field over a loop is equal to its curl through the enclosed surface. It is illustrated in the figure, where the direction of positive circulation of the bounding contour ∂Σ, and the direction n of positive flux through the surface Σ, are related by a right-hand-rule. For the right hand the fingers circulate along ∂Σand the thumb is directed along n.

Kernel methods are a well-established tool to analyze the relationship between input data and the corresponding output of a function. Kernels encapsulate the properties of functions in a computationally efficient way and allow algorithms to easily swap functions of varying complexity.

In statistics, machine learning and algorithms, a tensor sketch is a type of dimensionality reduction that is particularly efficient when applied to vectors that have tensor structure. Such a sketch can be used to speed up explicit kernel methods, bilinear pooling in neural networks and is a cornerstone in many numerical linear algebra algorithms.

In mathematics, the Khatri–Rao product of matrices is defined as