Related Research Articles

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there have existed supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

In computational intelligence (CI), an evolutionary algorithm (EA) is a subset of evolutionary computation, a generic population-based metaheuristic optimization algorithm. An EA uses mechanisms inspired by biological evolution, such as reproduction, mutation, recombination, and selection. Candidate solutions to the optimization problem play the role of individuals in a population, and the fitness function determines the quality of the solutions. Evolution of the population then takes place after the repeated application of the above operators.

In information science, formal concept analysis (FCA) is a principled way of deriving a concept hierarchy or formal ontology from a collection of objects and their properties. Each concept in the hierarchy represents the objects sharing some set of properties; and each sub-concept in the hierarchy represents a subset of the objects in the concepts above it. The term was introduced by Rudolf Wille in 1981, and builds on the mathematical theory of lattices and ordered sets that was developed by Garrett Birkhoff and others in the 1930s.

OpenMP is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, FreeBSD, HP-UX, Linux, macOS, and Windows. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior.

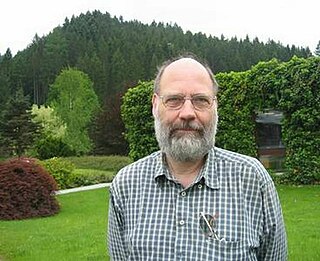

Jack Joseph Dongarra is an American computer scientist and mathematician. He is the American University Distinguished Professor of Computer Science in the Electrical Engineering and Computer Science Department at the University of Tennessee. He holds the position of a Distinguished Research Staff member in the Computer Science and Mathematics Division at Oak Ridge National Laboratory, Turing Fellowship in the School of Mathematics at the University of Manchester, and is an adjunct professor and teacher in the Computer Science Department at Rice University. He served as a faculty fellow at the Texas A&M University Institute for Advanced Study (2014–2018). Dongarra is the founding director of the Innovative Computing Laboratory at the University of Tennessee. He was the recipient of the Turing Award in 2021.

In software engineering, profiling is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the frequency and duration of function calls. Most commonly, profiling information serves to aid program optimization, and more specifically, performance engineering.

In computer programming, genetic representation is a way of presenting solutions/individuals in evolutionary computation methods. The term encompasses both the concrete data structures and data types used to realize the genetic material of the candidate solutions in the form of a genome, and the relationships between search space and problem space. In the simplest case, the search space corresponds to the problem space. The choice of problem representation is tied to the choice of genetic operators, both of which have a decisive effect on the efficiency of the optimization. Genetic representation can encode appearance, behavior, physical qualities of individuals. Difference in genetic representations is one of the major criteria drawing a line between known classes of evolutionary computation.

Aircrack-ng is a network software suite consisting of a detector, packet sniffer, WEP and WPA/WPA2-PSK cracker and analysis tool for 802.11 wireless LANs. It works with any wireless network interface controller whose driver supports raw monitoring mode and can sniff 802.11a, 802.11b and 802.11g traffic. Packages are released for Linux and Windows.

Chapel, the Cascade High Productivity Language, is a parallel programming language that was developed by Cray, and later by Hewlett Packard Enterprise which acquired Cray. It was being developed as part of the Cray Cascade project, a participant in DARPA's High Productivity Computing Systems (HPCS) program, which had the goal of increasing supercomputer productivity by 2010. It is being developed as an open source project, under version 2 of the Apache license.

Özalp Babaoğlu, is a Turkish computer scientist. He is currently professor of computer science at the University of Bologna, Italy. He received a Ph.D. in 1981 from the University of California at Berkeley. He is the recipient of 1982 Sakrison Memorial Award, 1989 UNIX InternationalRecognition Award and 1993 USENIX AssociationLifetime Achievement Award for his contributions to the UNIX system community and to Open Industry Standards. Before moving to Bologna in 1988, Babaoğlu was an associate professor in the Department of Computer Science at Cornell University. He has participated in several European research projects in distributed computing and complex systems. Babaoğlu is an ACM Fellow and has served as a resident fellow of the Institute of Advanced Studies at the University of Bologna and on the editorial boards for ACM Transactions on Computer Systems, ACM Transactions on Autonomous and Adaptive Systems and Springer-Verlag Distributed Computing.

Lis is a scalable parallel software library for solving discretized linear equations and eigenvalue problems that mainly arise in the numerical solution of partial differential equations by using iterative methods. Although it is designed for parallel computers, the library can be used without being conscious of parallel processing.

HPX, short for High Performance ParalleX, is a runtime system for high-performance computing. It is currently under active development by the STE||AR group at Louisiana State University. Focused on scientific computing, it provides an alternative execution model to conventional approaches such as MPI. HPX aims to overcome the challenges MPI faces with increasing large supercomputers by using asynchronous communication between nodes and lightweight control objects instead of global barriers, allowing application developers to exploit fine-grained parallelism.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software.

Wolfgang Hackbusch is a German mathematician, known for his pioneering research in multigrid methods and later hierarchical matrices, a concept generalizing the fast multipole method. He was a professor at the University of Kiel and is currently one of the directors of the Max Planck Institute for Mathematics in the Sciences in Leipzig.

The Slurm Workload Manager, formerly known as Simple Linux Utility for Resource Management (SLURM), or simply Slurm, is a free and open-source job scheduler for Linux and Unix-like kernels, used by many of the world's supercomputers and computer clusters.

Tachyon is a parallel/multiprocessor ray tracing software. It is a parallel ray tracing library for use on distributed memory parallel computers, shared memory computers, and clusters of workstations. Tachyon implements rendering features such as ambient occlusion lighting, depth-of-field focal blur, shadows, reflections, and others. It was originally developed for the Intel iPSC/860 by John Stone for his M.S. thesis at University of Missouri-Rolla. Tachyon subsequently became a more functional and complete ray tracing engine, and it is now incorporated into a number of other open source software packages such as VMD, and SageMath. Tachyon is released under a permissive license.

Approaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance. However, in time the demand for increased computational power ushered in the age of massively parallel systems.

The International Conference on Service Oriented Computing, short ICSOC, is an annual conference providing an outstanding forum for academics, industry researchers, developers, and practitioners to report and share groundbreaking work in service-oriented computing. ICSOC has an 'A' rating from the Excellence in Research in Australia (ERA). Calls for Papers are regularly published on WikiCFP and on the conference website. The conference is also listed in Elsevier's Global Events List.

OpenLB is an object-oriented implementation of the lattice Boltzmann methods (LBM). It is the first implementation of a generic platform for LBM programming, which is shared with the open source community (GPLv2). The code is written in C++ and is used by application programmers as well as developers, with the ability to implement custom models OpenLB supports complex data structures that allow simulations in complex geometries and parallel execution using MPI, OpenMP and CUDA on high-performance computers. The source code uses the concepts of interfaces and templates, so that efficient, direct and intuitive implementations of the LBM become possible. The efficiency and scalability has been checked and proved by code reviews. A user manual and a source code documentation by DoxyGen are available on the project page.

Hartmut Ehrig was a German computer scientist and professor of theoretical computer science and formal specification. He was a pioneer in algebraic specification of abstract data types, and in graph grammars.

References

- ↑ Geimer, Markus; et al. (25 April 2010). "The Scalasca performance toolset architecture". Concurrency and Computation: Practice and Experience. 22 (6): 702–719. CiteSeerX 10.1.1.183.3213 . doi:10.1002/cpe.1556. S2CID 14248376 . Retrieved 29 June 2016.

- ↑ "About". www.scalasca.org. Retrieved 2020-11-14.

- ↑ Knüpfer, Andreas; Rössel, Christian; Mey, Dieter an; Biersdorff, Scott; Diethelm, Kai; Eschweiler, Dominic; Geimer, Markus; Gerndt, Michael; Lorenz, Daniel (2012). "Score-P: A Joint Performance Measurement Run-Time Infrastructure for Periscope, Scalasca, TAU, and Vampir" (PDF). In Brunst, Holger; Müller, Matthias S.; Nagel, Wolfgang E.; Resch, Michael M. (eds.). Tools for High Performance Computing 2011. Berlin, Heidelberg: Springer. pp. 79–91. doi:10.1007/978-3-642-31476-6_7. ISBN 978-3-642-31476-6. S2CID 18004916.

- ↑ Wolf, Felix; Wylie, Brian J. N.; Ábrahám, Erika; Becker, Daniel; Frings, Wolfgang; Fürlinger, Karl; Geimer, Markus; Hermanns, Marc-André; Mohr, Bernd (2008). "Usage of the SCALASCA toolset for scalable performance analysis of large-scale parallel applications". In Resch, Michael; Keller, Rainer; Himmler, Valentin; Krammer, Bettina; Schulz, Alexander (eds.). Tools for High Performance Computing. Berlin, Heidelberg: Springer. pp. 157–167. doi:10.1007/978-3-540-68564-7_10. ISBN 978-3-540-68564-7.

- ↑ "Scalable performance analysis of large-scale parallel applications" (PDF). Retrieved 2020-11-14.

- ↑ "Performance Analysis with Scalasca" (PDF). Retrieved 2020-11-14.