Related Research Articles

Econometrics is an application of statistical methods to economic data in order to give empirical content to economic relationships. More precisely, it is "the quantitative analysis of actual economic phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference". An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships". Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used today.

Simultaneous equations models are a type of statistical model in which the dependent variables are functions of other dependent variables, rather than just independent variables. This means some of the explanatory variables are jointly determined with the dependent variable, which in economics usually is the consequence of some underlying equilibrium mechanism. Take the typical supply and demand model: whilst typically one would determine the quantity supplied and demanded to be a function of the price set by the market, it is also possible for the reverse to be true, where producers observe the quantity that consumers demand and then set the price.

Herman Ole Andreas Wold was a Norwegian-born econometrician and statistician who had a long career in Sweden. Wold was known for his work in mathematical economics, in time series analysis, and in econometric statistics.

Trygve Magnus Haavelmo, born in Skedsmo, Norway, was an economist whose research interests centered on econometrics. He received the Nobel Memorial Prize in Economic Sciences in 1989.

Econometric models are statistical models used in econometrics. An econometric model specifies the statistical relationship that is believed to hold between the various economic quantities pertaining to a particular economic phenomenon. An econometric model can be derived from a deterministic economic model by allowing for uncertainty, or from an economic model which itself is stochastic. However, it is also possible to use econometric models that are not tied to any specific economic theory.

In statistics, and particularly in econometrics, the reduced form of a system of equations is the result of solving the system for the endogenous variables. This gives the latter as functions of the exogenous variables, if any. In econometrics, the equations of a structural form model are estimated in their theoretically given form, while an alternative approach to estimation is to first solve the theoretical equations for the endogenous variables to obtain reduced form equations, and then to estimate the reduced form equations.

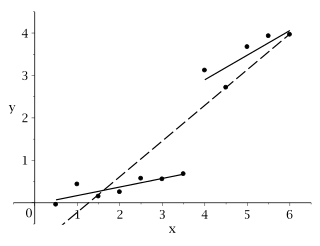

In statistics, econometrics, epidemiology and related disciplines, the method of instrumental variables (IV) is used to estimate causal relationships when controlled experiments are not feasible or when a treatment is not successfully delivered to every unit in a randomized experiment. Intuitively, IVs are used when an explanatory variable of interest is correlated with the error term, in which case ordinary least squares and ANOVA give biased results. A valid instrument induces changes in the explanatory variable but has no independent effect on the dependent variable, allowing a researcher to uncover the causal effect of the explanatory variable on the dependent variable.

In econometrics, endogeneity broadly refers to situations in which an explanatory variable is correlated with the error term. The distinction between endogenous and exogenous variables originated in simultaneous equations models, where one separates variables whose values are determined by the model from variables which are predetermined; ignoring simultaneity in the estimation leads to biased estimates as it violates the exogeneity assumption of the Gauss–Markov theorem. The problem of endogeneity is often ignored by researchers conducting non-experimental research and doing so precludes making policy recommendations. Instrumental variable techniques are commonly used to mitigate this problem.

Structural equation modeling (SEM) is a diverse set of methods used by scientists doing both observational and experimental research. SEM is used mostly in the social and behavioral sciences but it is also used in epidemiology, business, and other fields. A definition of SEM is difficult without reference to technical language, but a good starting place is the name itself.

Takeshi Amemiya is an economist specializing in econometrics and the economy of ancient Greece.

In economics and econometrics, the parameter identification problem arises when the value of one or more parameters in an economic model cannot be determined from observable variables. It is closely related to non-identifiability in statistics and econometrics, which occurs when a statistical model has more than one set of parameters that generate the same distribution of observations, meaning that multiple parameterizations are observationally equivalent.

The Cowles Foundation for Research in Economics is an economic research institute at Yale University. It was created as the Cowles Commission for Research in Economics at Colorado Springs in 1932 by businessman and economist Alfred Cowles. In 1939, the Cowles Commission moved to the University of Chicago under Theodore O. Yntema. Jacob Marschak directed it from 1943 until 1948, when Tjalling C. Koopmans assumed leadership. Increasing opposition to the Cowles Commission from the department of economics of the University of Chicago during the 1950s impelled Koopmans to persuade the Cowles family to move the commission to Yale University in 1955 where it became the Cowles Foundation.

Sir David Forbes Hendry, FBA CStat is a British econometrician, currently a professor of economics and from 2001 to 2007 was head of the economics department at the University of Oxford. He is also a professorial fellow at Nuffield College, Oxford.

In econometrics and statistics, a structural break is an unexpected change over time in the parameters of regression models, which can lead to huge forecasting errors and unreliability of the model in general. This issue was popularised by David Hendry, who argued that lack of stability of coefficients frequently caused forecast failure, and therefore we must routinely test for structural stability. Structural stability − i.e., the time-invariance of regression coefficients − is a central issue in all applications of linear regression models.

The Heckman correction is a statistical technique to correct bias from non-randomly selected samples or otherwise incidentally truncated dependent variables, a pervasive issue in quantitative social sciences when using observational data. Conceptually, this is achieved by explicitly modelling the individual sampling probability of each observation together with the conditional expectation of the dependent variable. The resulting likelihood function is mathematically similar to the tobit model for censored dependent variables, a connection first drawn by James Heckman in 1974. Heckman also developed a two-step control function approach to estimate this model, which avoids the computational burden of having to estimate both equations jointly, albeit at the cost of inefficiency. Heckman received the Nobel Memorial Prize in Economic Sciences in 2000 for his work in this field.

The methodology of econometrics is the study of the range of differing approaches to undertaking econometric analysis.

John Denis Sargan, FBA was a British econometrician who specialized in the analysis of economic time-series.

Following the development of Keynesian economics, applied economics began developing forecasting models based on economic data including national income and product accounting data. In contrast with typical textbook models, these large-scale macroeconometric models used large amounts of data and based forecasts on past correlations instead of theoretical relations. These models estimated the relations between different macroeconomic variables using regression analysis on time series data. These models grew to include hundreds or thousands of equations describing the evolution of hundreds or thousands of prices and quantities over time, making computers essential for their solution. While the choice of which variables to include in each equation was partly guided by economic theory, variable inclusion was mostly determined on purely empirical grounds. Large-scale macroeconometric model consists of systems of dynamic equations of the economy with the estimation of parameters using time-series data on a quarterly to yearly basis.

Causal inference is the process of determining the independent, actual effect of a particular phenomenon that is a component of a larger system. The main difference between causal inference and inference of association is that causal inference analyzes the response of an effect variable when a cause of the effect variable is changed. The science of why things occur is called etiology, and can be described using the language of scientific causal notation. Causal inference is said to provide the evidence of causality theorized by causal reasoning.

Control functions are statistical methods to correct for endogeneity problems by modelling the endogeneity in the error term. The approach thereby differs in important ways from other models that try to account for the same econometric problem. Instrumental variables, for example, attempt to model the endogenous variable X as an often invertible model with respect to a relevant and exogenous instrument Z. Panel analysis uses special data properties to difference out unobserved heterogeneity that is assumed to be fixed over time.

References

- Angrist, Joshua D; Pischke, Jörn-Steffen (2010). "The Credibility Revolution in Empirical Economics: How Better Research Design is Taking the Con out of Econometrics" (PDF). Journal of Economic Perspectives. American Economic Association. 24 (2): 3–30. doi: 10.1257/jep.24.2.3 . ISSN 0895-3309.

- Keane, Michael P. (2010). "Structural vs. atheoretic approaches to econometrics". Journal of Econometrics. Elsevier BV. 156 (1): 3–20. doi:10.1016/j.jeconom.2009.09.003. ISSN 0304-4076.

- Reiss, Peter C.; Wolak, Frank A. (2007). "Chapter 64 Structural Econometric Modeling: Rationales and Examples from Industrial Organization" (PDF). Handbook of Econometrics. Elsevier. doi:10.1016/s1573-4412(07)06064-3. ISBN 978-0-444-50631-3. ISSN 1573-4412.

- Rust, John (2014). "The Limits of Inference with Theory: A Review of Wolpin (2013)". Journal of Economic Literature. American Economic Association. 52 (3): 820–850. doi:10.1257/jel.52.3.820. ISSN 0022-0515.