Related Research Articles

Scalable Vector Graphics (SVG) is an XML-based vector image format for defining two-dimensional graphics, having support for interactivity and animation. The SVG specification is an open standard developed by the World Wide Web Consortium since 1999.

Closed captioning (CC) and subtitling are both processes of displaying text on a television, video screen, or other visual display to provide additional or interpretive information. Both are typically used as a transcription of the audio portion of a program as it occurs, sometimes including descriptions of non-speech elements. Other uses have included providing a textual alternative language translation of a presentation's primary audio language that is usually burned-in to the video and unselectable.

Digital Video Broadcasting (DVB) is a set of international open standards for digital television. DVB standards are maintained by the DVB Project, an international industry consortium, and are published by a Joint Technical Committee (JTC) of the European Telecommunications Standards Institute (ETSI), European Committee for Electrotechnical Standardization (CENELEC) and European Broadcasting Union (EBU).

Material Exchange Format (MXF) is a container format for professional digital video and audio media defined by a set of SMPTE standards. A typical example of its use is for delivering advertisements to TV stations and tapeless archiving of broadcast TV programs. It is also used as part of the Digital Cinema Package for delivering movies to commercial theaters.

MHEG-5, or ISO/IEC 13522–5, is part of a set of international standards relating to the presentation of multimedia information, standardised by the Multimedia and Hypermedia Experts Group (MHEG). It is most commonly used as a language to describe interactive television services.

CTA-708 is the standard for closed captioning for ATSC digital television (DTV) viewing in the United States and Canada. It was developed by the Consumer Electronics sector of the Electronic Industries Alliance, which became Consumer Technology Association.

1080p is a set of HDTV high-definition video modes characterized by 1,920 pixels displayed across the screen horizontally and 1,080 pixels down the screen vertically; the p stands for progressive scan, i.e. non-interlaced. The term usually assumes a widescreen aspect ratio of 16:9, implying a resolution of 2.1 megapixels. It is often marketed as Full HD or FHD, to contrast 1080p with 720p resolution screens. Although 1080p is sometimes referred to as 2K resolution, other sources differentiate between 1080p and (true) 2K resolution.

MPEG-4 Part 17, or MPEG-4 Timed Text (MP4TT), or MPEG-4 Streaming text format is the text-based subtitle format for MPEG-4, published as ISO/IEC 14496-17 in 2006. It was developed in response to the need for a generic method for coding of text as one of the multimedia components within audiovisual presentations.

These tables compare features of multimedia container formats, most often used for storing or streaming digital video or digital audio content. To see which multimedia players support which container format, look at comparison of media players.

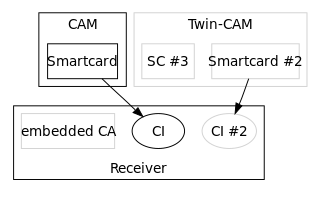

In Digital Video Broadcasting, the Common Interface is a technology which allows decryption of pay TV channels. Pay TV stations want to choose which encryption method to use. The Common Interface allows TV manufacturers to support many different pay TV stations, by allowing to plug in exchangeable conditional-access modules (CAM) for various encryption schemes.

SubRip is a free software program for Microsoft Windows which extracts subtitles and their timings from various video formats to a text file. It is released under the GNU GPL. Its subtitle format's file extension is .srt and is widely supported. Each .srt file is a human-readable file format where the subtitles are stored sequentially along with the timing information. Most subtitles distributed on the Internet are in this format.

In television technology, Active Format Description (AFD) is a standard set of codes that can be sent in the MPEG video stream or in the baseband SDI video signal that carries information about their aspect ratio and other active picture characteristics. It has been used by television broadcasters to enable both 4:3 and 16:9 television sets to optimally present pictures transmitted in either format. It has also been used by broadcasters to dynamically control how down-conversion equipment formats widescreen 16:9 pictures for 4:3 displays.

Timed text is the presentation of text media in synchrony with other media, such as audio and video.

GOM Player is a media player for Microsoft Windows, developed by GOM & Company. With more than 100 million downloads, it is also known as the most used player in South Korea. Its main features include the ability to play some broken media files and find missing codecs using a codec finder service.

Subtitles are texts representing the contents of the audio in a film, television show, opera or other audiovisual media. Subtitles might provide a transcription or translation of spoken dialogue. Although naming conventions can vary, captions are subtitles that include written descriptions of other elements of the audio, like music or sound effects. Captions are thus especially helpful to people who are deaf or hard-of-hearing. Subtitles may also add information that is not present in the audio. Localizing subtitles provide cultural context to viewers. For example, a subtitle could be used to explain to an audience unfamiliar with sake that it is a type of Japanese wine. Lastly, subtitles are sometimes used for humor, as in Annie Hall, where subtitles show the characters' inner thoughts, which contradict what they were saying in the audio.

Hybrid Broadcast Broadband TV (HbbTV) is both an industry standard and promotional initiative for hybrid digital TV to harmonise the broadcast, Internet Protocol Television (IPTV), and broadband delivery of entertainment to the end consumer through connected TVs and set-top boxes. The HbbTV Association, comprising digital broadcasting and Internet industry companies, has established a standard for the delivery of broadcast TV and broadband TV to the home, through a single user interface, creating an open platform as an alternative to proprietary technologies. Products and services using the HbbTV standard can operate over different broadcasting technologies, such as satellite, cable, or terrestrial networks.

IMSC may refer to:

The Entertainment Identifier Registry, or EIDR, is a global unique identifier system for a broad array of audiovisual objects, including motion pictures, television, and radio programs. The identification system resolves an identifier to a metadata record that is associated with top-level titles, edits, DVDs, encodings, clips, and mashups. EIDR also provides identifiers for video service providers, such as broadcast and cable networks.

WebVTT is a World Wide Web Consortium (W3C) standard for displaying timed text in connection with the HTML5 <track> element.

Interoperable Master Format (IMF) is a container format for the standardized digital delivery and storage of finished audio-visual masters, including movies, episodic content and advertisements.

References

- ↑ "Timed Text (TT) Authoring Format 1.0 – Distribution Format Exchange Profile (DFXP)" . Retrieved 2015-02-16.

- ↑ "Timed Interactive Multimedia Extensions for HTML (HTML+TIME)" . Retrieved 2019-08-09.

- ↑ "W3C Launches Timed Text Working Group" . Retrieved 2019-08-09.

- ↑ "Timed Text (TT) Authoring Format 1.0 – Distribution Format Exchange Profile (DFXP)" . Retrieved 2004-11-01.

- ↑ "WebVTT versus TTML: XML considered harmful for web captions?" . Retrieved 16 February 2015.

- ↑ "FCC Declares SMPTE Closed-Captioning Standard For Online Video Content As Safe Harbor Interchange, Delivery Format" . Retrieved 20 February 2015.

- ↑ "SMPTE Timed Text Format (SMPTE ST 2052-1:2010)" (PDF). 3 December 2010.

- ↑ "Part 1: EBU-TT Part 1 - Subtitle format definition (EBU Tech 3350)". 24 May 2017.

- ↑ "Part 1: EBU-TT Part 3 Live Subtitling (EBU Tech 3370)". 24 May 2017.

- ↑ "EBU-TT-D Subtitling Distribution Format (Tech3380)". 22 May 2018.