A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary processor in a given computer. Its electronic circuitry executes instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs).

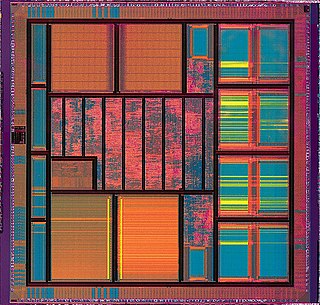

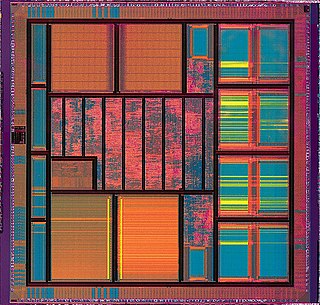

An integrated circuit (IC), also known as a microchip or simply chip, is a set of electronic circuits, consisting of various electronic components and their interconnections. These components are etched onto a small, flat piece ("chip") of semiconductor material, usually silicon. Integrated circuits are used in a wide range of electronic devices, including computers, smartphones, and televisions, to perform various functions such as processing and storing information. They have greatly impacted the field of electronics by enabling device miniaturization and enhanced functionality.

Very-large-scale integration (VLSI) is the process of creating an integrated circuit (IC) by combining millions or billions of MOS transistors onto a single chip. VLSI began in the 1970s when MOS integrated circuit chips were developed and then widely adopted, enabling complex semiconductor and telecommunications technologies. The microprocessor and memory chips are VLSI devices.

Digital electronics is a field of electronics involving the study of digital signals and the engineering of devices that use or produce them. This is in contrast to analog electronics which work primarily with analog signals. Despite the name, digital electronics designs include important analog design considerations.

In microelectronics, a dual in-line package is an electronic component package with a rectangular housing and two parallel rows of electrical connecting pins. The package may be through-hole mounted to a printed circuit board (PCB) or inserted in a socket. The dual-inline format was invented by Don Forbes, Rex Rice and Bryant Rogers at Fairchild R&D in 1964, when the restricted number of leads available on circular transistor-style packages became a limitation in the use of integrated circuits. Increasingly complex circuits required more signal and power supply leads ; eventually microprocessors and similar complex devices required more leads than could be put on a DIP package, leading to development of higher-density chip carriers. Furthermore, square and rectangular packages made it easier to route printed-circuit traces beneath the packages.

A breadboard, solderless breadboard, or protoboard is a construction base used to build semi-permanent prototypes of electronic circuits. Unlike a perfboard or stripboard, breadboards do not require soldering or destruction of tracks and are hence reusable. For this reason, breadboards are also popular with students and in technological education.

The history of computing hardware starting at 1960 is marked by the conversion from vacuum tube to solid-state devices such as transistors and then integrated circuit (IC) chips. Around 1953 to 1959, discrete transistors started being considered sufficiently reliable and economical that they made further vacuum tube computers uncompetitive. Metal–oxide–semiconductor (MOS) large-scale integration (LSI) technology subsequently led to the development of semiconductor memory in the mid-to-late 1960s and then the microprocessor in the early 1970s. This led to primary computer memory moving away from magnetic-core memory devices to solid-state static and dynamic semiconductor memory, which greatly reduced the cost, size, and power consumption of computers. These advances led to the miniaturized personal computer (PC) in the 1970s, starting with home computers and desktop computers, followed by laptops and then mobile computers over the next several decades.

Stripboard is the generic name for a widely used type of electronics prototyping material for circuit boards characterized by a pre-formed 0.1 inches (2.54 mm) regular (rectangular) grid of holes, with wide parallel strips of copper cladding running in one direction all the way along one side of an insulating bonded paper board. It is commonly also known by the name of the original product Veroboard, which is a trademark, in the UK, of British company Vero Technologies Ltd and Canadian company Pixel Print Ltd. It was originated and developed in the early 1960s by the Electronics Department of Vero Precision Engineering Ltd (VPE). It was introduced as a general-purpose material for use in constructing electronic circuits - differing from purpose-designed printed circuit boards (PCBs) in that a variety of electronics circuits may be constructed using a standard wiring board.

Integrated circuit packaging is the final stage of semiconductor device fabrication, in which the die is encapsulated in a supporting case that prevents physical damage and corrosion. The case, known as a "package", supports the electrical contacts which connect the device to a circuit board.

The CDC 8600 was the last of Seymour Cray's supercomputer designs while he worked for Control Data Corporation. As the natural successor to the CDC 6600 and CDC 7600, the 8600 was intended to be about 10 times as fast as the 7600, already the fastest computer on the market. The design was essentially four 7600's, packed into a very small chassis so they could run at higher clock speeds.

The Cray-3 was a vector supercomputer, Seymour Cray's designated successor to the Cray-2. The system was one of the first major applications of gallium arsenide (GaAs) semiconductors in computing, using hundreds of custom built ICs packed into a 1 cubic foot (0.028 m3) CPU. The design goal was performance around 16 GFLOPS, about 12 times that of the Cray-2.

The Cray-2 is a supercomputer with four vector processors made by Cray Research starting in 1985. At 1.9 GFLOPS peak performance, it was the fastest machine in the world when it was released, replacing the Cray X-MP in that spot. It was, in turn, replaced in that spot by the Cray Y-MP in 1988.

In electronics, through-hole technology is a manufacturing scheme in which leads on the components are inserted through holes drilled in printed circuit boards (PCB) and soldered to pads on the opposite side, either by manual assembly or by the use of automated insertion mount machines.

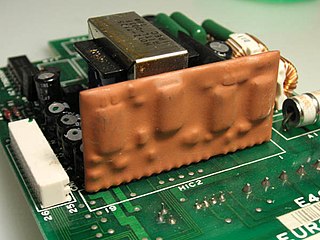

DIY Audio, do it yourself audio. Rather than buying a piece of possibly expensive audio equipment, such as a high-end audio amplifier or speaker, the person practicing DIY Audio will make it themselves. Alternatively, a DIYer may take an existing manufactured item of vintage era and update or modify it. The benefits of doing so include the satisfaction of creating something enjoyable, the possibility that the equipment made or updated is of higher quality than commercially available products and the pleasure of creating a custom-made device for which no exact equivalent is marketed. Other motivations for DIY audio can include getting audio components at a lower cost, the entertainment of using the item, and being able to ensure quality of workmanship.

A hybrid integrated circuit (HIC), hybrid microcircuit, hybrid circuit or simply hybrid is a miniaturized electronic circuit constructed of individual devices, such as semiconductor devices and passive components, bonded to a substrate or printed circuit board (PCB). A PCB having components on a Printed wiring board (PWB) is not considered a true hybrid circuit according to the definition of MIL-PRF-38534.

A field-replaceable unit (FRU) is a printed circuit board, part, or assembly that can be quickly and easily removed from a computer or other piece of electronic equipment, and replaced by the user or a technician without having to send the entire product or system to a repair facility. FRUs allow a technician lacking in-depth product knowledge to isolate faults and replace faulty components. The granularity of FRUs in a system impacts total cost of ownership and support, including the costs of stocking spare parts, where spares are deployed to meet repair time goals, how diagnostic tools are designed and implemented, levels of training for field personnel, whether end-users can do their own FRU replacement, etc.

In integrated circuits, depletion-load NMOS is a form of digital logic family that uses only a single power supply voltage, unlike earlier NMOS logic families that needed more than one different power supply voltage. Although manufacturing these integrated circuits required additional processing steps, improved switching speed and the elimination of the extra power supply made this logic family the preferred choice for many microprocessors and other logic elements.

Integrated circuit design, semiconductor design, chip design or IC design, is a sub-field of electronics engineering, encompassing the particular logic and circuit design techniques required to design integrated circuits, or ICs. ICs consist of miniaturized electronic components built into an electrical network on a monolithic semiconductor substrate by photolithography.

A three-dimensional integrated circuit is a MOS integrated circuit (IC) manufactured by stacking as many as 16 or more ICs and interconnecting them vertically using, for instance, through-silicon vias (TSVs) or Cu-Cu connections, so that they behave as a single device to achieve performance improvements at reduced power and smaller footprint than conventional two dimensional processes. The 3D IC is one of several 3D integration schemes that exploit the z-direction to achieve electrical performance benefits in microelectronics and nanoelectronics.

The first planar monolithic integrated circuit (IC) chip was demonstrated in 1960. The idea of integrating electronic circuits into a single device was born when the German physicist and engineer Werner Jacobi developed and patented the first known integrated transistor amplifier in 1949 and the British radio engineer Geoffrey Dummer proposed to integrate a variety of standard electronic components in a monolithic semiconductor crystal in 1952. A year later, Harwick Johnson filed a patent for a prototype IC. Between 1953 and 1957, Sidney Darlington and Yasuo Tarui proposed similar chip designs where several transistors could share a common active area, but there was no electrical isolation to separate them from each other.