In mathematics, any vector space has a corresponding dual vector space consisting of all linear forms on together with the vector space structure of pointwise addition and scalar multiplication by constants.

In mathematics, a geometric algebra is an extension of elementary algebra to work with geometrical objects such as vectors. Geometric algebra is built out of two fundamental operations, addition and the geometric product. Multiplication of vectors results in higher-dimensional objects called multivectors. Compared to other formalisms for manipulating geometric objects, geometric algebra is noteworthy for supporting vector division and addition of objects of different dimensions.

In mathematics, an inner product space is a real vector space or a complex vector space with an operation called an inner product. The inner product of two vectors in the space is a scalar, often denoted with angle brackets such as in . Inner products allow formal definitions of intuitive geometric notions, such as lengths, angles, and orthogonality of vectors. Inner product spaces generalize Euclidean vector spaces, in which the inner product is the dot product or scalar product of Cartesian coordinates. Inner product spaces of infinite dimension are widely used in functional analysis. Inner product spaces over the field of complex numbers are sometimes referred to as unitary spaces. The first usage of the concept of a vector space with an inner product is due to Giuseppe Peano, in 1898.

Linear algebra is the branch of mathematics concerning linear equations such as:

In mathematics and physics, a vector space is a set whose elements, often called vectors, may be added together and multiplied ("scaled") by numbers called scalars. Scalars are often real numbers, but can be complex numbers or, more generally, elements of any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called vector axioms. Real vector space and complex vector space are kinds of vector spaces based on different kinds of scalars: real coordinate space or complex coordinate space.

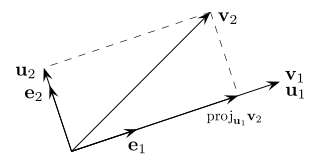

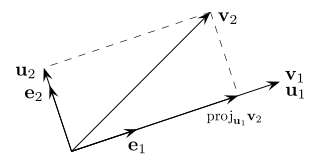

In mathematics, particularly linear algebra and numerical analysis, the Gram–Schmidt process or Gram-Schmidt algorithm is a way of making two or more vectors perpendicular to each other.

In geometry, a normal is an object that is perpendicular to a given object. For example, the normal line to a plane curve at a given point is the line perpendicular to the tangent line to the curve at the point.

In linear algebra, two vectors in an inner product space are orthonormal if they are orthogonal unit vectors. A unit vector means that the vector has a length of 1, which is also known as normalized. Orthogonal means that the vectors are all perpendicular to each other. A set of vectors form an orthonormal set if all vectors in the set are mutually orthogonal and all of unit length. An orthonormal set which forms a basis is called an orthonormal basis.

In mathematics, particularly linear algebra, an orthonormal basis for an inner product space with finite dimension is a basis for whose vectors are orthonormal, that is, they are all unit vectors and orthogonal to each other. For example, the standard basis for a Euclidean space is an orthonormal basis, where the relevant inner product is the dot product of vectors. The image of the standard basis under a rotation or reflection is also orthonormal, and every orthonormal basis for arises in this fashion.

In mathematics, a linear form is a linear map from a vector space to its field of scalars.

In mathematics, the Hodge star operator or Hodge star is a linear map defined on the exterior algebra of a finite-dimensional oriented vector space endowed with a nondegenerate symmetric bilinear form. Applying the operator to an element of the algebra produces the Hodge dual of the element. This map was introduced by W. V. D. Hodge.

A 3D projection is a design technique used to display a three-dimensional (3D) object on a two-dimensional (2D) surface. These projections rely on visual perspective and aspect analysis to project a complex object for viewing capability on a simpler plane.

In linear algebra and functional analysis, a projection is a linear transformation from a vector space to itself such that . That is, whenever is applied twice to any vector, it gives the same result as if it were applied once. It leaves its image unchanged. This definition of "projection" formalizes and generalizes the idea of graphical projection. One can also consider the effect of a projection on a geometrical object by examining the effect of the projection on points in the object.

In the mathematical fields of linear algebra and functional analysis, the orthogonal complement of a subspace of a vector space equipped with a bilinear form is the set of all vectors in that are orthogonal to every vector in . Informally, it is called the perp, short for perpendicular complement. It is a subspace of .

The name paravector is used for the combination of a scalar and a vector in any Clifford algebra, known as geometric algebra among physicists.

In linear algebra, a frame of an inner product space is a generalization of a basis of a vector space to sets that may be linearly dependent. In the terminology of signal processing, a frame provides a redundant, stable way of representing a signal. Frames are used in error detection and correction and the design and analysis of filter banks and more generally in applied mathematics, computer science, and engineering.

In the mathematical discipline of functional analysis, the concept of a compact operator on Hilbert space is an extension of the concept of a matrix acting on a finite-dimensional vector space; in Hilbert space, compact operators are precisely the closure of finite-rank operators in the topology induced by the operator norm. As such, results from matrix theory can sometimes be extended to compact operators using similar arguments. By contrast, the study of general operators on infinite-dimensional spaces often requires a genuinely different approach.

In mathematics, Hilbert spaces allow the methods of linear algebra and calculus to be generalized from (finite-dimensional) Euclidean vector spaces to spaces that may be infinite-dimensional. Hilbert spaces arise naturally and frequently in mathematics and physics, typically as function spaces. Formally, a Hilbert space is a vector space equipped with an inner product that induces a distance function for which the space is a complete metric space.

The concept of angles between lines, between two planes or between a line and a plane can be generalized to arbitrary dimensions. This generalization was first discussed by Camille Jordan. For any pair of flats in a Euclidean space of arbitrary dimension one can define a set of mutual angles which are invariant under isometric transformation of the Euclidean space. If the flats do not intersect, their shortest distance is one more invariant. These angles are called canonical or principal. The concept of angles can be generalized to pairs of flats in a finite-dimensional inner product space over the complex numbers.

This is a glossary for the terminology in a mathematical field of functional analysis.