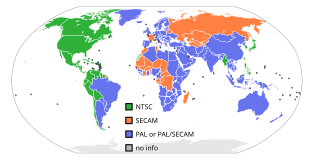

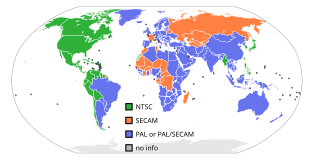

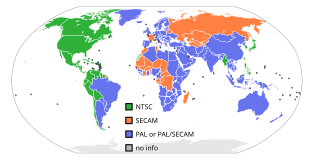

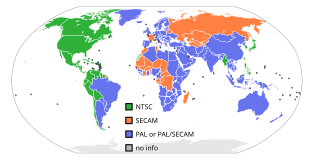

NTSC is the first American standard for analog television, published in 1941. In 1961, it was assigned the designation System M. It is also known as EIA standard 170.

Phase Alternating Line (PAL) is a colour encoding system for analogue television. It was one of three major analogue colour television standards, the others being NTSC and SECAM. In most countries it was broadcast at 625 lines, 50 fields per second, and associated with CCIR analogue broadcast television systems B, D, G, H, I or K. The articles on analog broadcast television systems further describe frame rates, image resolution, and audio modulation.

SECAM, also written SÉCAM, is an analog color television system that was used in France, Russia and some other countries or territories of Europe and Africa. It was one of three major analog color television standards, the others being PAL and NTSC. Like PAL, a SECAM picture is also made up of 625 interlaced lines and is displayed at a rate of 25 frames per second. However, due to the way SECAM processes color information, it is not compatible with the German PAL video format standard. This page primarily discusses the SECAM colour encoding system. The articles on broadcast television systems and analog television further describe frame rates, image resolution, and audio modulation. SECAM video is composite video because the luminance and chrominance are transmitted together as one signal.

Interlaced video is a technique for doubling the perceived frame rate of a video display without consuming extra bandwidth. The interlaced signal contains two fields of a video frame captured consecutively. This enhances motion perception to the viewer, and reduces flicker by taking advantage of the phi phenomenon.

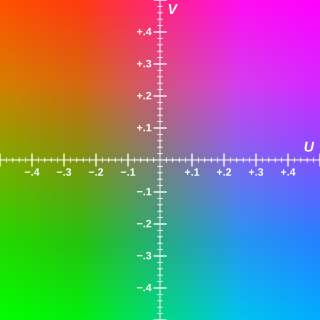

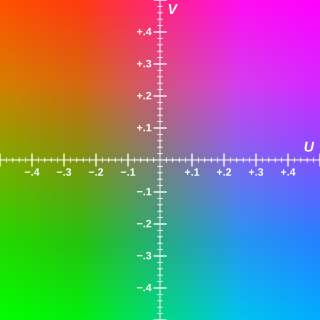

Y′UV, also written YUV, is the color model found in the PAL analogue color TV standard. A color is described as a Y′ component (luma) and two chroma components U and V. The prime symbol (') denotes that the luma is calculated from gamma-corrected RGB input and that it is different from true luminance. Today, the term YUV is commonly used in the computer industry to describe colorspaces that are encoded using YCbCr.

Chroma subsampling is the practice of encoding images by implementing less resolution for chroma information than for luma information, taking advantage of the human visual system's lower acuity for color differences than for luminance.

ITU-R Recommendation BT.601, more commonly known by the abbreviations Rec. 601 or BT.601, is a standard originally issued in 1982 by the CCIR for encoding interlaced analog video signals in digital video form. It includes methods of encoding 525-line 60 Hz and 625-line 50 Hz signals, both with an active region covering 720 luminance samples and 360 chrominance samples per line. The color encoding system is known as YCbCr 4:2:2.

D-1 or 4:2:2 Component Digital is an SMPTE digital recording video standard, introduced in 1986 through efforts by SMPTE engineering committees. It started as a Sony and Bosch – BTS product and was the first major professional digital video format. SMPTE standardized the format within ITU-R 601, also known as Rec. 601, which was derived from SMPTE 125M and EBU 3246-E standards.

YIQ is the color space used by the analog NTSC color TV system. I stands for in-phase, while Q stands for quadrature, referring to the components used in quadrature amplitude modulation. Other TV systems used different color spaces, such as YUV for PAL or YDbDr for SECAM. Later digital standards use the YCbCr color space. These color spaces are all broadly related, and work based on the principle of adding a color component named chrominance, to a black and white image named luma.

Serial digital interface (SDI) is a family of digital video interfaces first standardized by SMPTE in 1989. For example, ITU-R BT.656 and SMPTE 259M define digital video interfaces used for broadcast-grade video. A related standard, known as high-definition serial digital interface (HD-SDI), is standardized in SMPTE 292M; this provides a nominal data rate of 1.485 Gbit/s.

YCbCr, Y′CbCr, or Y Pb/Cb Pr/Cr, also written as YCBCR or Y′CBCR, is a family of color spaces used as a part of the color image pipeline in video and digital photography systems. Y′ is the luma component and CB and CR are the blue-difference and red-difference chroma components. Y′ is distinguished from Y, which is luminance, meaning that light intensity is nonlinearly encoded based on gamma corrected RGB primaries.

HD-MAC was a broadcast television standard proposed by the European Commission in 1986, as part of Eureka 95 project. It belongs to the MAC - Multiplexed Analogue Components standard family. It is an early attempt by the EEC to provide High-definition television (HDTV) in Europe. It is a complex mix of analogue signal, multiplexed with digital sound, and assistance data for decoding (DATV). The video signal was encoded with a modified D2-MAC encoder.

Optical resolution describes the ability of an imaging system to resolve detail, in the object that is being imaged. An imaging system may have many individual components, including one or more lenses, and/or recording and display components. Each of these contributes to the optical resolution of the system; the environment in which the imaging is done often is a further important factor.

Multiplexed Analogue Components (MAC) was an analog television standard where luminance and chrominance components were transmitted separately. This was an evolution from older color TV systems where there was interference between chrominance and luminance.

Analog high-definition television has referred to a variety of analog video broadcast television systems with various display resolutions throughout history.

In video, luma represents the brightness in an image. Luma is typically paired with chrominance. Luma represents the achromatic image, while the chroma components represent the color information. Converting R′G′B′ sources into luma and chroma allows for chroma subsampling: because human vision has finer spatial sensitivity to luminance differences than chromatic differences, video systems can store and transmit chromatic information at lower resolution, optimizing perceived detail at a particular bandwidth.

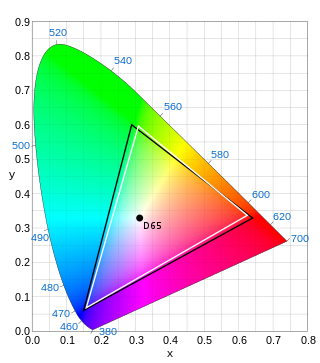

Rec. 709, also known as Rec.709, BT.709, and ITU 709, is a standard developed by ITU-R for image encoding and signal characteristics of high-definition television.

High-definition television (HDTV) describes a television or video system which provides a substantially higher image resolution than the previous generation of technologies. The term has been used since at least 1933; in more recent times, it refers to the generation following standard-definition television (SDTV). It is the current de facto standard video format used in most broadcasts: terrestrial broadcast television, cable television, satellite television.

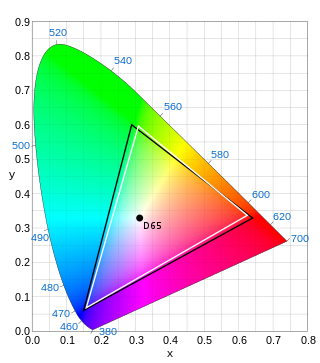

ITU-R Recommendation BT.2020, more commonly known by the abbreviations Rec. 2020 or BT.2020, defines various aspects of ultra-high-definition television (UHDTV) with standard dynamic range (SDR) and wide color gamut (WCG), including picture resolutions, frame rates with progressive scan, bit depths, color primaries, RGB and luma-chroma color representations, chroma subsamplings, and an opto-electronic transfer function. The first version of Rec. 2020 was posted on the International Telecommunication Union (ITU) website on August 23, 2012, and two further editions have been published since then.

Clear-Vision is a Japanese EDTV television system introduced in the 1990s, that improves audio and video quality while remaining compatible with the existing broadcast standard. Developed to improve analog NTSC, it adds features like progressive scan, ghost cancellation and widescreen image format. A similar system named PALPlus was develop in Europe with the goal of improving analog PAL broadcasts.