In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

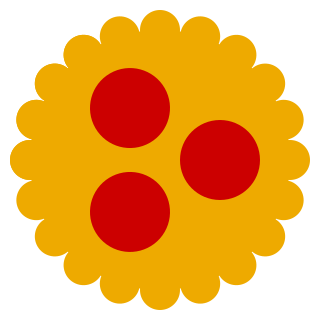

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. The different versions of the photo of the cat on this page show how higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques.

Image compression is a type of data compression applied to digital images, to reduce their cost for storage or transmission. Algorithms may take advantage of visual perception and the statistical properties of image data to provide superior results compared with generic data compression methods which are used for other digital data.

A video codec is software or hardware that compresses and decompresses digital video. In the context of video compression, codec is a portmanteau of encoder and decoder, while a device that only compresses is typically called an encoder, and one that only decompresses is a decoder.

A compression artifact is a noticeable distortion of media caused by the application of lossy compression. Lossy data compression involves discarding some of the media's data so that it becomes small enough to be stored within the desired disk space or transmitted (streamed) within the available bandwidth. If the compressor cannot store enough data in the compressed version, the result is a loss of quality, or introduction of artifacts. The compression algorithm may not be intelligent enough to discriminate between distortions of little subjective importance and those objectionable to the user.

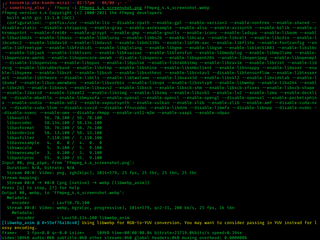

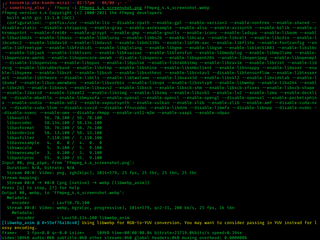

FFmpeg is a free and open-source software project consisting of a suite of libraries and programs for handling video, audio, and other multimedia files and streams. At its core is the command-line ffmpeg tool itself, designed for processing of video and audio files. It is widely used for format transcoding, basic editing, video scaling, video post-production effects and standards compliance.

Generation loss is the loss of quality between subsequent copies or transcodes of data. Anything that reduces the quality of the representation when copying, and would cause further reduction in quality on making a copy of the copy, can be considered a form of generation loss. File size increases are a common result of generation loss, as the introduction of artifacts may actually increase the entropy of the data through each generation.

Video quality is a characteristic of a video passed through a video transmission or processing system that describes perceived video degradation. Video processing systems may introduce some amount of distortion or artifacts in the video signal that negatively impact the user's perception of the system. For many stakeholders in video production and distribution, ensuring video quality is an important task.

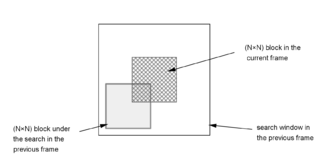

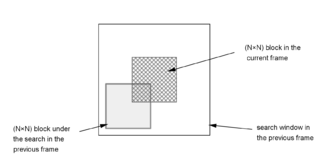

A Block Matching Algorithm is a way of locating matching macroblocks in a sequence of digital video frames for the purposes of motion estimation. The underlying supposition behind motion estimation is that the patterns corresponding to objects and background in a frame of video sequence move within the frame to form corresponding objects on the subsequent frame. This can be used to discover temporal redundancy in the video sequence, increasing the effectiveness of inter-frame video compression by defining the contents of a macroblock by reference to the contents of a known macroblock which is minimally different.

The structural similarityindex measure (SSIM) is a method for predicting the perceived quality of digital television and cinematic pictures, as well as other kinds of digital images and videos. It is also used for measuring the similarity between two images. The SSIM index is a full reference metric; in other words, the measurement or prediction of image quality is based on an initial uncompressed or distortion-free image as reference.

Α video codec is software or a device that provides encoding and decoding for digital video, and which may or may not include the use of video compression and/or decompression. Most codecs are typically implementations of video coding formats.

PGF is a wavelet-based bitmapped image format that employs lossless and lossy data compression. PGF was created to improve upon and replace the JPEG format. It was developed at the same time as JPEG 2000 but with a focus on speed over compression ratio.

Image quality can refer to the level of accuracy with which different imaging systems capture, process, store, compress, transmit and display the signals that form an image. Another definition refers to image quality as "the weighted combination of all of the visually significant attributes of an image". The difference between the two definitions is that one focuses on the characteristics of signal processing in different imaging systems and the latter on the perceptual assessments that make an image pleasant for human viewers.

A human visual system model is used by image processing, video processing and computer vision experts to deal with biological and psychological processes that are not yet fully understood. Such a model is used to simplify the behaviors of what is a very complex system. As our knowledge of the true visual system improves, the model is updated.

Uncompressed video is digital video that either has never been compressed or was generated by decompressing previously compressed digital video. It is commonly used by video cameras, video monitors, video recording devices, and in video processors that perform functions such as image resizing, image rotation, deinterlacing, and text and graphics overlay. It is conveyed over various types of baseband digital video interfaces, such as HDMI, DVI, DisplayPort and SDI. Standards also exist for the carriage of uncompressed video over computer networks.

High Efficiency Video Coding (HEVC), also known as H.265 and MPEG-H Part 2, is a video compression standard designed as part of the MPEG-H project as a successor to the widely used Advanced Video Coding. In comparison to AVC, HEVC offers from 25% to 50% better data compression at the same level of video quality, or substantially improved video quality at the same bit rate. It supports resolutions up to 8192×4320, including 8K UHD, and unlike the primarily 8-bit AVC, HEVC's higher fidelity Main 10 profile has been incorporated into nearly all supporting hardware.

Diagnostically acceptable irreversible compression (DAIC) is the amount of lossy compression which can be used on a medical image to produce a result that does not prevent the reader from using the image to make a medical diagnosis.

Guetzli is a freely licensed JPEG encoder that Jyrki Alakuijala, Robert Obryk, and Zoltán Szabadka have developed in Google's Zürich research branch. The encoder seeks to produce significantly smaller files than prior encoders at equivalent quality, albeit at very low speed. It is named after the Swiss German diminutive expression for biscuits, in line with the names of other compression technology from Google.

Video Multimethod Assessment Fusion (VMAF) is an objective full-reference video quality metric developed by Netflix in cooperation with the University of Southern California, The IPI/LS2N lab Nantes Université, and the Laboratory for Image and Video Engineering (LIVE) at The University of Texas at Austin. It predicts subjective video quality based on a reference and distorted video sequence. The metric can be used to evaluate the quality of different video codecs, encoders, encoding settings, or transmission variants.