MPEG-2 is a standard for "the generic coding of moving pictures and associated audio information". It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard.

Digital audio is a representation of sound recorded in, or converted into, digital form. In digital audio, the sound wave of the audio signal is typically encoded as numerical samples in a continuous sequence. For example, in CD audio, samples are taken 44,100 times per second, each with 16-bit sample depth. Digital audio is also the name for the entire technology of sound recording and reproduction using audio signals that have been encoded in digital form. Following significant advances in digital audio technology during the 1970s and 1980s, it gradually replaced analog audio technology in many areas of audio engineering, record production and telecommunications in the 1990s and 2000s.

S/PDIF is a type of digital audio interface used in consumer audio equipment to output audio over relatively short distances. The signal is transmitted over either a coaxial cable or a fiber-optic cable with TOSLINK connectors. S/PDIF interconnects components in home theaters and other digital high-fidelity systems.

SMPTE timecode is a set of cooperating standards to label individual frames of video or film with a timecode. The system is defined by the Society of Motion Picture and Television Engineers in the SMPTE 12M specification. SMPTE revised the standard in 2008, turning it into a two-part document: SMPTE 12M-1 and SMPTE 12M-2, including new explanations and clarifications.

AC'97 is an audio codec standard developed by Intel Architecture Labs and various codec manufacturers in 1997. The standard was used in motherboards, modems, and sound cards.

Serial digital interface (SDI) is a family of digital video interfaces first standardized by SMPTE in 1989. For example, ITU-R BT.656 and SMPTE 259M define digital video interfaces used for broadcast-grade video. A related standard, known as high-definition serial digital interface (HD-SDI), is standardized in SMPTE 292M; this provides a nominal data rate of 1.485 Gbit/s.

The Digital Audio Stationary Head or DASH standard is a reel-to-reel, digital audio tape format introduced by Sony in early 1982 for high-quality multitrack studio recording and mastering, as an alternative to analog recording methods. DASH is capable of recording two channels of audio on a quarter-inch tape, and 24 or 48 tracks on 1⁄2-inch-wide (13 mm) tape on open reels of up to 14 inches. The data is recorded on the tape linearly, with a stationary recording head, as opposed to the DAT format, where data is recorded helically with a rotating head, in the same manner as a VCR. The audio data is encoded as linear PCM and boasts strong cyclic redundancy check (CRC) error correction, allowing the tape to be physically edited with a razor blade as analog tape would, e.g. by cutting and splicing, and played back with no loss of signal. In a two-track DASH recorder, the digital data is recorded onto the tape across nine data tracks: eight for the digital audio data and one for the CRC data; there is also provision for two linear analog cue tracks and one additional linear analog track dedicated to recording time code.

I²S, is an electrical serial bus interface standard used for connecting digital audio devices together. It is used to communicate PCM audio data between integrated circuits in an electronic device. The I²S bus separates clock and serial data signals, resulting in simpler receivers than those required for asynchronous communications systems that need to recover the clock from the data stream. Alternatively I²S is spelled I2S or IIS. Despite the similar name, I²S is unrelated to the bidirectional I²C (IIC) bus.

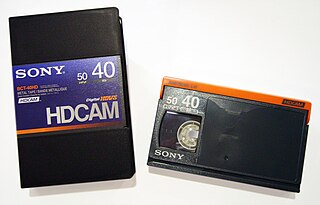

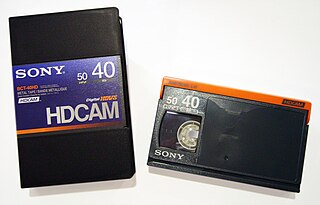

HDCAM is a high-definition video digital recording videocassette version of Digital Betacam introduced in 1997 that uses an 8-bit discrete cosine transform (DCT) compressed 3:1:1 recording, in 1080i-compatible down-sampled resolution of 1440×1080, and adding 24p and 23.976 progressive segmented frame (PsF) modes to later models. The HDCAM codec uses rectangular pixels and as such the recorded 1440×1080 content is upsampled to 1920×1080 on playback. The recorded video bit rate is 144 Mbit/s. Audio is also similar, with four channels of AES3 20-bit, 48 kHz digital audio. Like Betacam, HDCAM tapes were produced in small and large cassette sizes; the small cassette uses the same form factor as the original Betamax. The main competitor to HDCAM was the DVCPRO HD format offered by Panasonic, which uses a similar compression scheme and bit rates ranging from 40 Mbit/s to 100 Mbit/s depending on frame rate.

AES47 is a standard which describes a method for transporting AES3 professional digital audio streams over Asynchronous Transfer Mode (ATM) networks.

Dolby Digital Plus, also known as Enhanced AC-3, is a digital audio compression scheme developed by Dolby Labs for the transport and storage of multi-channel digital audio. It is a successor to Dolby Digital (AC-3), and has a number of improvements over that codec, including support for a wider range of data rates, an increased channel count, and multi-program support, as well as additional tools (algorithms) for representing compressed data and counteracting artifacts. Whereas Dolby Digital (AC-3) supports up to five full-bandwidth audio channels at a maximum bitrate of 640 kbit/s, E-AC-3 supports up to 15 full-bandwidth audio channels at a maximum bitrate of 6.144 Mbit/s.

SMPTE 292 is a digital video transmission line standard published by the Society of Motion Picture and Television Engineers (SMPTE). This technical standard is usually referred to as HD-SDI; it is part of a family of standards that define a Serial Digital Interface based on a coaxial cable, intended to be used for transport of uncompressed digital video and audio in a television studio environment.

Ancillary data is data that has been added to given data and uses the same form of transport. Common examples are cover art images for media files or streams, or digital data added to radio or television broadcasts.

AES52 is a standard first published by the Audio Engineering Society in March 2006 that specifies the insertion of unique identifiers into the AES3 digital audio transport structure.

Multichannel Audio Digital Interface (MADI) standardized as AES10 by the Audio Engineering Society (AES) defines the data format and electrical characteristics of an interface that carries multiple channels of digital audio. The AES first documented the MADI standard in AES10-1991 and updated it in AES10-2003 and AES10-2008. The MADI standard includes a bit-level description and has features in common with the two-channel AES3 interface.

McASP is an acronym for Multichannel Audio Serial Port, a communication peripheral found in Texas Instruments family of digital signal processors (DSPs) and Microcontroller Units (MCUs).

The McASP functions as a general-purpose audio serial port optimized for the needs of multichannel audio applications. Depending on the implementation, the McASP may be useful for time-division multiplexed (TDM) stream, Inter-Integrated Sound (I2S) protocols, and intercomponent digital audio interface transmission (DIT). However, some implementations are limited to supporting just the Inter-Integrated Sound (I2S) protocol.

The McASP consists of transmit and receive sections that may operate synchronized, or completely independently with separate master clocks, bit clocks, and frame syncs, and using different transmit modes with different bit-stream formats. The McASP module also includes up to 16 serializers that can be individually enabled to either transmit or receive. In addition, all of the McASP pins can be configured as general-purpose input/output (GPIO) pins.

The ADAT Lightpipe, officially the ADAT Optical Interface, is a standard for the transfer of digital audio between equipment. It was originally developed by Alesis but has since become widely accepted, with many third party hardware manufacturers including Lightpipe interfaces on their equipment. The protocol has become so popular that the term ADAT is now often used to refer to the transfer standard rather than to the Alesis Digital Audio Tape itself.

The Serial Low-power Inter-chip Media Bus (SLIMbus) is a standard interface between baseband or application processors and peripheral components in mobile terminals. It was developed within the MIPI Alliance, founded by ARM, Nokia, STMicroelectronics and Texas Instruments. The interface supports many digital audio components simultaneously, and carries multiple digital audio data streams at differing sample rates and bit widths.

AES67 is a technical standard for audio over IP and audio over Ethernet (AoE) interoperability. The standard was developed by the Audio Engineering Society and first published in September 2013. It is a layer 3 protocol suite based on existing standards and is designed to allow interoperability between various IP-based audio networking systems such as RAVENNA, Livewire, Q-LAN and Dante.

AES50 is an Audio over Ethernet protocol for multichannel digital audio. It is defined by the AES50-2011 standard for High-resolution multi-channel audio interconnection (HRMAI).