A microphone array is any number of microphones operating in tandem. There are many applications:

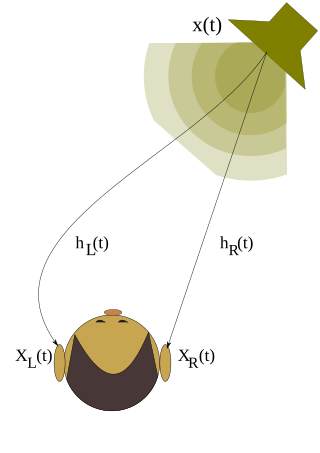

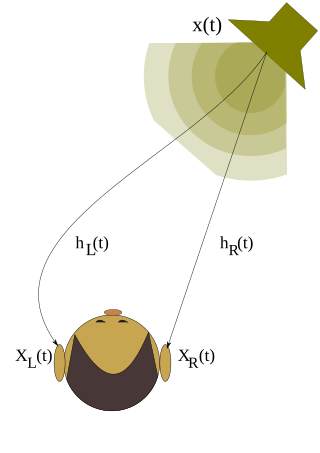

Binaural recording is a method of recording sound that uses two microphones, arranged with the intent to create a 3D stereo sound sensation for the listener of actually being in the room with the performers or instruments. This effect is often created using a technique known as dummy head recording, wherein a mannequin head is fitted with a microphone in each ear. Binaural recording is intended for replay using headphones and will not translate properly over stereo speakers. This idea of a three-dimensional or "internal" form of sound has also translated into useful advancement of technology in many things such as stethoscopes creating "in-head" acoustics and IMAX movies being able to create a three-dimensional acoustic experience.

A head-related transfer function (HRTF) is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person.

Ambisonics is a full-sphere surround sound format: in addition to the horizontal plane, it covers sound sources above and below the listener.

Simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. While this initially appears to be a chicken or the egg problem, there are several algorithms known to solve it in, at least approximately, tractable time for certain environments. Popular approximate solution methods include the particle filter, extended Kalman filter, covariance intersection, and GraphSLAM. SLAM algorithms are based on concepts in computational geometry and computer vision, and are used in robot navigation, robotic mapping and odometry for virtual reality or augmented reality.

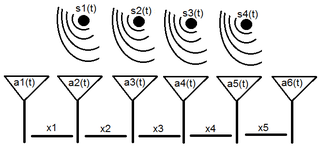

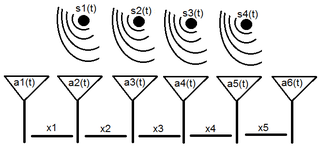

A sensor array is a group of sensors, usually deployed in a certain geometry pattern, used for collecting and processing electromagnetic or acoustic signals. The advantage of using a sensor array over using a single sensor lies in the fact that an array adds new dimensions to the observation, helping to estimate more parameters and improve the estimation performance. For example an array of radio antenna elements used for beamforming can increase antenna gain in the direction of the signal while decreasing the gain in other directions, i.e., increasing signal-to-noise ratio (SNR) by amplifying the signal coherently. Another example of sensor array application is to estimate the direction of arrival of impinging electromagnetic waves. The related processing method is called array signal processing. A third examples includes chemical sensor arrays, which utilize multiple chemical sensors for fingerprint detection in complex mixtures or sensing environments. Application examples of array signal processing include radar/sonar, wireless communications, seismology, machine condition monitoring, astronomical observations fault diagnosis, etc.

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance.

Array processing is a wide area of research in the field of signal processing that extends from the simplest form of 1 dimensional line arrays to 2 and 3 dimensional array geometries. Array structure can be defined as a set of sensors that are spatially separated, e.g. radio antenna and seismic arrays. The sensors used for a specific problem may vary widely, for example microphones, accelerometers and telescopes. However, many similarities exist, the most fundamental of which may be an assumption of wave propagation. Wave propagation means there is a systemic relationship between the signal received on spatially separated sensors. By creating a physical model of the wave propagation, or in machine learning applications a training data set, the relationships between the signals received on spatially separated sensors can be leveraged for many applications.

Machine olfaction is the automated simulation of the sense of smell. An emerging application in modern engineering, it involves the use of robots or other automated systems to analyze air-borne chemicals. Such an apparatus is often called an electronic nose or e-nose. The development of machine olfaction is complicated by the fact that e-nose devices to date have responded to a limited number of chemicals, whereas odors are produced by unique sets of odorant compounds. The technology, though still in the early stages of development, promises many applications, such as: quality control in food processing, detection and diagnosis in medicine, detection of drugs, explosives and other dangerous or illegal substances, disaster response, and environmental monitoring.

Acoustic location is a method of determining the position of an object or sound source by using sound waves. Location can take place in gases, liquids, and in solids.

In land warfare, artillery sound ranging is a method of determining the coordinates of a hostile battery using data derived from the sound of its guns firing, so called target acquisition.

Geophysical survey is the systematic collection of geophysical data for spatial studies. Detection and analysis of the geophysical signals forms the core of Geophysical signal processing. The magnetic and gravitational fields emanating from the Earth's interior hold essential information concerning seismic activities and the internal structure. Hence, detection and analysis of the electric and Magnetic fields is very crucial. As the Electromagnetic and gravitational waves are multi-dimensional signals, all the 1-D transformation techniques can be extended for the analysis of these signals as well. Hence this article also discusses multi-dimensional signal processing techniques.

Time-domain thermoreflectance is a method by which the thermal properties of a material can be measured, most importantly thermal conductivity. This method can be applied most notably to thin film materials, which have properties that vary greatly when compared to the same materials in bulk. The idea behind this technique is that once a material is heated up, the change in the reflectance of the surface can be utilized to derive the thermal properties. The reflectivity is measured with respect to time, and the data received can be matched to a model with coefficients that correspond to thermal properties.

An acoustic camera is an imaging device used to locate sound sources and to characterize them. It consists of a group of microphones, also called a microphone array, from which signals are simultaneously collected and processed to form a representation of the location of the sound sources.

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

The spectral correlation density (SCD), sometimes also called the cyclic spectral density or spectral correlation function, is a function that describes the cross-spectral density of all pairs of frequency-shifted versions of a time-series. The spectral correlation density applies only to cyclostationary processes because stationary processes do not exhibit spectral correlation. Spectral correlation has been used both in signal detection and signal classification. The spectral correlation density is closely related to each of the bilinear time-frequency distributions, but is not considered one of Cohen's class of distributions.

Beamforming is a signal processing technique used to spatially select propagating waves. In order to implement beamforming on digital hardware the received signals need to be discretized. This introduces quantization error, perturbing the array pattern. For this reason, the sample rate must be generally much greater than the Nyquist rate.

Steered-response power (SRP) is a family of acoustic source localization algorithms that can be interpreted as a beamforming-based approach that searches for the candidate position or direction that maximizes the output of a steered delay-and-sum beamformer.

3D sound reconstruction is the application of reconstruction techniques to 3D sound localization technology. These methods of reconstructing three-dimensional sound are used to recreate sounds to match natural environments and provide spatial cues of the sound source. They also see applications in creating 3D visualizations on a sound field to include physical aspects of sound waves including direction, pressure, and intensity. This technology is used in entertainment to reproduce a live performance through computer speakers. The technology is also used in military applications to determine location of sound sources. Reconstructing sound fields is also applicable to medical imaging to measure points in ultrasound.

3D sound is most commonly defined as the sounds of everyday human experience. Sound arrives at the ears from every direction and distance, which contribute to the three-dimensional aural image of what humans hear. Scientists and engineers who work with 3D sound work to accurately synthesize the complexity of real-world sounds.