This article may be too technical for most readers to understand.(December 2013) |

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

This article may be too technical for most readers to understand.(December 2013) |

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

Human listeners combine information from two ears to localize and separate sound sources originating in different locations in a process called binaural hearing. The powerful signal processing methods found in the neural systems and brains of humans and other animals are flexible, environmentally adaptable, [1] and take place rapidly and seemingly without effort. [2] Emulating the mechanisms of binaural hearing can improve recognition accuracy and signal separation in DSP algorithms, especially in noisy environments. [3] Furthermore, by understanding and exploiting biological mechanisms of sound localization, virtual sound scenes may be rendered with more perceptually relevant methods, allowing listeners to accurately perceive the locations of auditory events. [4] One way to obtain the perceptual-based sound localization is from the sparse approximations of the anthropometric features. Perceptual-based sound localization may be used to enhance and supplement robotic navigation and environment recognition capability. [1] In addition, it is also used to create virtual auditory spaces which is widely implemented in hearing aids.

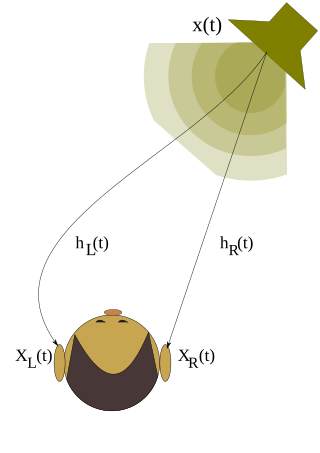

While the relationship between human perception of sound and various attributes of the sound field is not yet well understood, [2] DSP algorithms for sound localization are able to employ several mechanisms found in neural systems, including the interaural time difference (ITD, the difference in arrival time of a sound between two locations), the interaural intensity difference (IID, the difference in intensity of a sound between two locations), artificial pinnae, the precedence effect, and head-related transfer functions (HRTF). When localizing 3D sound in spatial domain, one could take into account that the incoming sound signal could be reflected, diffracted and scattered by the upper torso of the human which consists of shoulders, head and pinnae. Localization also depends on the direction of the sound source. [5]

Brüel's & Kjær's Head And Torso Simulator (HATS) is a mannequin prototype with built-in ear and mouth simulators that provides a realistic reproduction of the acoustic properties of an average adult human head and torso. It is designed to be used in electro-acoustics tests, for example, headsets, audio conference devices, microphones, headphones and hearing aids. Various existing approaches are based on this structural model. [6]

It is essential to be able to analyze the distance and intensity of various sources in a spatial domain. We can track each such sound source, by using a probabilistic temporal integration, based on data obtained through a microphone array and a particle filtering tracker. Using this approach, the Probability Density Function (PDF) representing the location of each source is represented as a set of particles to which different weights (probabilities) are assigned. The choice of particle filtering over Kalman filtering is further justified by the non-gaussian probabilities arising from false detections and multiple sources. [7]

According to the duplex theory, ITDs have a greater contribution to the localisation of low frequency sounds (below 1 kHz), [4] while ILDs are used in the localisation of high frequency sound. These approaches can be applied to selective reconstructions of spatialized signals, where spectrotemporal components believed to be dominated by the desired sound source are identified and isolated through the Short-time Fourier transform (STFT). Modern systems typically compute the STFT of the incoming signal from two or more microphones, and estimate the ITD or each spectrotemporal component by comparing the phases of the STFTs. An advantage to this approach is that it may be generalized to more than two microphones, which can improve accuracy in 3 dimensions and remove the front-back localization ambiguity that occurs with only two ears or microphones. [1] Another advantage is that the ITD is relatively strong and easy to obtain without biomimetic instruments such as dummy heads and artificial pinnae, though these may still be used to enhance amplitude disparities. [1] HRTF phase response is mostly linear and listeners are insensitive to the details of the interaural phase spectrum as long as the interaural time delay (ITD) of the combined low-frequency part of the waveform is maintained.

Interaural level differences (ILD) represents the difference in sound pressure level reaching the two ears. They provide salient cues for localizing high-frequency sounds in space, and populations of neurons that are sensitive to ILD are found at almost every synaptic level from brain stem to cortex. These cells are predominantly excited by stimulation of one ear and predominantly inhibited by stimulation of the other ear, such that the magnitude of their response is determined in large part by the intensities at the 2 ears. This gives rise to the concept of resonant damping. [8] Interaural level difference (ILD) is best for high frequency sounds because low frequency sounds are not attenuated much by the head. ILD (also known as Interaural Intensity Difference) arises when the sound source is not centred, the listener's head partially shadows the ear opposite to the source, diminishing the intensity of the sound in that ear (particularly at higher frequencies). The pinnae filters the sound in a way that is directionally dependent. This is particularly useful in determining if a sound comes from above, below, in front, or behind.

Interaural time and level differences (ITD, ILD) play a role in azimuth perception but can't explain vertical localization. According to the duplex theory, ITDs have a greater contribution to the localisation of low frequency sounds (below 1 kHz), while ILDs are used in the localisation of high frequency sound. [8] The ILD arises from the fact that, a sound coming from a source located to one side of the head will have a higher intensity, or be louder, at the ear nearest the sound source. One can therefore create the illusion of a sound source emanating from one side of the head merely by adjusting the relative level of the sounds that are fed to two separated speakers or headphones. This is the basis of the commonly used pan control.

Interaural Phase Difference (IPD) refers to the difference in the phase of a wave that reaches each ear, and is dependent on the frequency of the sound wave and the interaural time differences (ITD). [8]

Once the brain has analyzed IPD, ITD, and ILD, the location of the sound source can be determined with relative accuracy.

The precedence effect is the observation that sound localization can be dominated by the components of a complex sound that are the first to arrive. By allowing the direct field components (those that arrive directly from the sound source) to dominate while suppressing the influence of delayed reflected components from other directions, the precedence effect may improve the accuracy of perceived sound location in a reverberant environment. Processing of the precedence effect involves enhancing the leading edge of sound envelopes of the signal after dividing it into frequency bands via bandpass filtering. This approach can be done at the monaural level as well as the binaural level, and improves accuracy in reverberant environments in both cases. However, the benefits of using the precedence effect can break down in an anechoic environment.

The body of a human listener obstructs incoming sound waves, causing linear filtering of the sound signal due to interference from the head, ears, and body. Humans use dynamic cues to reinforce localization. These arise from active, sometimes unconscious, motions of the listener, which change the relative position of the source. It is reported that front/back confusions which are common in static listening tests disappear when listeners are allowed to slightly turn their heads to help them in localization. However, if the sound scene is presented through headphones without compensation for head motion, the scene does not change with the user's motion, and dynamic cues are absent. [9]

Head-related transfer functions contain all the descriptors of localization cues such as ITD and IID as well as monaural cues. Every HRTF uniquely represents the transfer of sound from a specific position in 3D space to the ears of a listener. The decoding process performed by the auditory system can be imitated using an artificial setup consisting of two microphones, two artificial ears and a HRTF database. [10] To determine the position of an audio source in 3D space, the ear input signals are convolved with the inverses of all possible HRTF pairs, where the correct inverse maximizes cross-correlation between the convolved right and left signals. In the case of multiple simultaneous sound sources, the transmission of sound from source to ears can be considered a multiple-input and multiple-output. Here, the HRTFs the source signals were filtered with en route to the microphones can be found using methods such as convolutive blind source separation, which has the advantage of efficient implementation in real-time systems. Overall, these approaches using HRTFs can be well optimized to localize multiple moving sound sources. [10] The average human has the remarkable ability to locate a sound source with better than 5◦ accuracy in both azimuth and elevation, in challenging environments.[ citation needed ]

Binaural recording is a method of recording sound that uses two microphones, arranged with the intent to create a 3-D stereo sound sensation for the listener of actually being in the room with the performers or instruments. This effect is often created using a technique known as dummy head recording, wherein a mannequin head is fitted with a microphone in each ear. Binaural recording is intended for replay using headphones and will not translate properly over stereo speakers. This idea of a three-dimensional or "internal" form of sound has also translated into useful advancement of technology in many things such as stethoscopes creating "in-head" acoustics and IMAX movies being able to create a three-dimensional acoustic experience.

A head-related transfer function (HRTF), also known as a head shadow, is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person.

Ambisonics is a full-sphere surround sound format: in addition to the horizontal plane, it covers sound sources above and below the listener.

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance.

Virtual acoustic space (VAS), also known as virtual auditory space, is a technique in which sounds presented over headphones appear to originate from any desired direction in space. The illusion of a virtual sound source outside the listener's head is created.

The superior olivary complex (SOC) or superior olive is a collection of brainstem nuclei that functions in multiple aspects of hearing and is an important component of the ascending and descending auditory pathways of the auditory system. The SOC is intimately related to the trapezoid body: most of the cell groups of the SOC are dorsal to this axon bundle while a number of cell groups are embedded in the trapezoid body. Overall, the SOC displays a significant interspecies variation, being largest in bats and rodents and smaller in primates.

The interaural time difference when concerning humans or animals, is the difference in arrival time of a sound between two ears. It is important in the localization of sounds, as it provides a cue to the direction or angle of the sound source from the head. If a signal arrives at the head from one side, the signal has further to travel to reach the far ear than the near ear. This pathlength difference results in a time difference between the sound's arrivals at the ears, which is detected and aids the process of identifying the direction of sound source.

Eric Knudsen is a professor of neurobiology at Stanford University. He is best known for his discovery, along with Masakazu Konishi, of a brain map of sound location in two dimensions in the barn owl, tyto alba. His work has contributed to the understanding of information processing in the auditory system of the barn owl, the plasticity of the auditory space map in developing and adult barn owls, the influence of auditory and visual experience on the space map, and more recently, mechanisms of attention and learning. He is a recipient of the Lashley Award, the Gruber Prize in Neuroscience, and the Newcomb Cleveland prize and is a member of the National Academy of Sciences.

Binaural fusion or binaural integration is a cognitive process that involves the combination of different auditory information presented binaurally, or to each ear. In humans, this process is essential in understanding speech as one ear may pick up more information about the speech stimuli than the other.

Computational auditory scene analysis (CASA) is the study of auditory scene analysis by computational means. In essence, CASA systems are "machine listening" systems that aim to separate mixtures of sound sources in the same way that human listeners do. CASA differs from the field of blind signal separation in that it is based on the mechanisms of the human auditory system, and thus uses no more than two microphone recordings of an acoustic environment. It is related to the cocktail party problem.

Dichotic pitch is a pitch heard due to binaural processing, when the brain combines two noises presented simultaneously to the ears. In other words, it cannot be heard when the sound stimulus is presented monaurally but, when it is presented binaurally a sensation of a pitch can be heard. The binaural stimulus is presented to both ears through headphones simultaneously, and is the same in several respects except for a narrow frequency band that is manipulated. The most common variation is the Huggins Pitch, which presents white-noise that only differ in the interaural phase relation over a narrow range of frequencies. For humans, this phenomenon is restricted to fundamental frequencies lower than 330 Hz and extremely low sound pressure levels. Experts investigate the effects of the dichotic pitch on the brain. For instance, there are studies that suggested it evokes activation at the lateral end of Heschl's gyrus.

Ambiophonics is a method in the public domain that employs digital signal processing (DSP) and two loudspeakers directly in front of the listener in order to improve reproduction of stereophonic and 5.1 surround sound for music, movies, and games in home theaters, gaming PCs, workstations, or studio monitoring applications. First implemented using mechanical means in 1986, today a number of hardware and VST plug-in makers offer Ambiophonic DSP. Ambiophonics eliminates crosstalk inherent in the conventional stereo triangle speaker placement, and thereby generates a speaker-binaural soundfield that emulates headphone-binaural sound, and creates for the listener improved perception of reality of recorded auditory scenes. A second speaker pair can be added in back in order to enable 360° surround sound reproduction. Additional surround speakers may be used for hall ambience, including height, if desired.

Spatial hearing loss refers to a form of deafness that is an inability to use spatial cues about where a sound originates from in space. Poor sound localization in turn affects the ability to understand speech in the presence of background noise.

Amblyaudia is a term coined by Dr. Deborah Moncrieff to characterize a specific pattern of performance from dichotic listening tests. Dichotic listening tests are widely used to assess individuals for binaural integration, a type of auditory processing skill. During the tests, individuals are asked to identify different words presented simultaneously to the two ears. Normal listeners can identify the words fairly well and show a small difference between the two ears with one ear slightly dominant over the other. For the majority of listeners, this small difference is referred to as a "right-ear advantage" because their right ear performs slightly better than their left ear. But some normal individuals produce a "left-ear advantage" during dichotic tests and others perform at equal levels in the two ears. Amblyaudia is diagnosed when the scores from the two ears are significantly different with the individual's dominant ear score much higher than the score in the non-dominant ear Researchers interested in understanding the neurophysiological underpinnings of amblyaudia consider it to be a brain based hearing disorder that may be inherited or that may result from auditory deprivation during critical periods of brain development. Individuals with amblyaudia have normal hearing sensitivity but have difficulty hearing in noisy environments like restaurants or classrooms. Even in quiet environments, individuals with amblyaudia may fail to understand what they are hearing, especially if the information is new or complicated. Amblyaudia can be conceptualized as the auditory analog of the better known central visual disorder amblyopia. The term “lazy ear” has been used to describe amblyaudia although it is currently not known whether it stems from deficits in the auditory periphery or from other parts of the auditory system in the brain, or both. A characteristic of amblyaudia is suppression of activity in the non-dominant auditory pathway by activity in the dominant pathway which may be genetically determined and which could also be exacerbated by conditions throughout early development.

Stimulus filtering occurs when an animal's nervous system fails to respond to stimuli that would otherwise cause a reaction to occur. The nervous system has developed the capability to perceive and distinguish between minute differences in stimuli, which allows the animal to only react to significant impetus. This enables the animal to conserve energy as it is not responding to unimportant signals.

3D sound localization refers to an acoustic technology that is used to locate the source of a sound in a three-dimensional space. The source location is usually determined by the direction of the incoming sound waves and the distance between the source and sensors. It involves the structure arrangement design of the sensors and signal processing techniques.

Most owls are nocturnal or crepuscular birds of prey. Because they hunt at night, they must rely on non-visual senses. Experiments by Roger Payne have shown that owls are sensitive to the sounds made by their prey, not the heat or the smell. In fact, the sound cues are both necessary and sufficient for localization of mice from a distant location where they are perched. For this to work, the owls must be able to accurately localize both the azimuth and the elevation of the sound source.

3D sound reconstruction is the application of reconstruction techniques to 3D sound localization technology. These methods of reconstructing three-dimensional sound are used to recreate sounds to match natural environments and provide spatial cues of the sound source. They also see applications in creating 3D visualizations on a sound field to include physical aspects of sound waves including direction, pressure, and intensity. This technology is used in entertainment to reproduce a live performance through computer speakers. The technology is also used in military applications to determine location of sound sources. Reconstructing sound fields is also applicable to medical imaging to measure points in ultrasound.

3D sound is most commonly defined as the daily human experience of sounds. The sounds arrive to the ears from every direction and varying distances, which contribute to the three-dimensional aural image humans hear. Scientists and engineers who work with 3D sound work to accurately synthesize the complexity of real-world sounds.

Binaural unmasking is phenomenon of auditory perception discovered by Ira Hirsh. In binaural unmasking, the brain combines information from the two ears in order to improve signal detection and identification in noise. The phenomenon is most commonly observed when there is a difference between the interaural phase of the signal and the interaural phase of the noise. When such a difference is present there is an improvement in masking threshold compared to a reference situation in which the interaural phases are the same, or when the stimulus has been presented monaurally. Those two cases usually give very similar thresholds. The size of the improvement is known as the "binaural masking level difference" (BMLD), or simply as the "masking level difference".

{{cite journal}}: Cite journal requires |journal= (help){{cite journal}}: Cite journal requires |journal= (help){{cite journal}}: Cite journal requires |journal= (help)