Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals. Such machines may be called AIs.

Friendly artificial intelligence is hypothetical artificial general intelligence (AGI) that would have a positive (benign) effect on humanity or at least align with human interests such as fostering the improvement of the human species. It is a part of the ethics of artificial intelligence and is closely related to machine ethics. While machine ethics is concerned with how an artificially intelligent agent should behave, friendly artificial intelligence research is focused on how to practically bring about this behavior and ensuring it is adequately constrained.

Stuart Jonathan Russell is a British computer scientist known for his contributions to artificial intelligence (AI). He is a professor of computer science at the University of California, Berkeley and was from 2008 to 2011 an adjunct professor of neurological surgery at the University of California, San Francisco. He holds the Smith-Zadeh Chair in Engineering at University of California, Berkeley. He founded and leads the Center for Human-Compatible Artificial Intelligence (CHAI) at UC Berkeley. Russell is the co-author with Peter Norvig of the authoritative textbook of the field of AI: Artificial Intelligence: A Modern Approach used in more than 1,500 universities in 135 countries.

Kate Crawford is a researcher, writer, composer, producer and academic, who studies the social and political implications of artificial intelligence. She is based in New York and works as a principal researcher at Microsoft Research, the co-founder and former director of research at the AI Now Institute at NYU, a visiting professor at the MIT Center for Civic Media, a senior fellow at the Information Law Institute at NYU, and an associate professor in the Journalism and Media Research Centre at the University of New South Wales. She is also a member of the WEF's Global Agenda Council on Data-Driven Development.

The ethics of artificial intelligence covers a broad range of topics within the field that are considered to have particular ethical stakes. This includes algorithmic biases, fairness, automated decision-making, accountability, privacy, and regulation. It also covers various emerging or potential future challenges such as machine ethics, lethal autonomous weapon systems, arms race dynamics, AI safety and alignment, technological unemployment, AI-enabled misinformation, how to treat certain AI systems if they have a moral status, artificial superintelligence and existential risks.

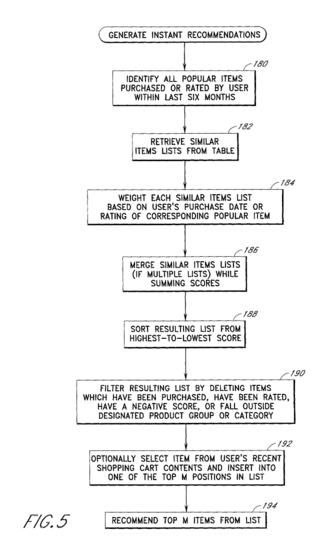

Artificial intelligence marketing (AIM) is a form of marketing that uses artificial intelligence concepts and models such as machine learning, natural language processing (NLP), and computer vision to achieve marketing goals. The main difference between AIM and traditional forms of marketing resides in the reasoning, which is performed by a computer algorithm rather than a human.

Machine ethics is a part of the ethics of artificial intelligence concerned with adding or ensuring moral behaviors of man-made machines that use artificial intelligence, otherwise known as artificial intelligent agents. Machine ethics differs from other ethical fields related to engineering and technology. It should not be confused with computer ethics, which focuses on human use of computers. It should also be distinguished from the philosophy of technology, which concerns itself with technology's grander social effects.

Existential risk from artificial intelligence refers to the idea that substantial progress in artificial general intelligence (AGI) could lead to human extinction or an irreversible global catastrophe.

Kate Devlin, born Adela Katharine Devlin is a Northern Irish computer scientist specialising in Artificial intelligence and Human–computer interaction (HCI). She is best known for her work on human sexuality and robotics and was co-chair of the annual Love and Sex With Robots convention in 2016 held in London and was founder of the UK's first ever sex tech hackathon held in 2016 at Goldsmiths, University of London. She is Senior Lecturer in Social and Cultural Artificial Intelligence in the Department of Digital Humanities, King's College London and is the author of Turned On: Science, Sex and Robots in addition to several academic papers.

Algorithmic bias describes systematic and repeatable errors in a computer system that create "unfair" outcomes, such as "privileging" one category over another in ways different from the intended function of the algorithm.

Joanna Joy Bryson is professor at Hertie School in Berlin. She works on Artificial Intelligence, ethics and collaborative cognition. She has been a British citizen since 2007.

Meredith Whittaker is the president of the Signal Foundation and serves on its board of directors. She was formerly the Minderoo Research Professor at New York University (NYU), and the co-founder and faculty director of the AI Now Institute. She also served as a senior advisor on AI to Chair Lina Khan at the Federal Trade Commission. Whittaker was employed at Google for 13 years, where she founded Google's Open Research group and co-founded the M-Lab. In 2018, she was a core organizer of the Google Walkouts and resigned from the company in July 2019.

The AI Now Institute is an American research institute studying the social implications of artificial intelligence and policy research that addresses the concentration of power in the tech industry. AI Now has partnered with organizations such as the Distributed AI Research Institute (DAIR), Data & Society, Ada Lovelace Institute, New York University Tandon School of Engineering, New York University Center for Data Science, Partnership on AI, and the ACLU. AI Now has produced annual reports that examine the social implications of artificial intelligence. In 2021-2, AI Now’s leadership served as a Senior Advisors on AI to Chair Lina Khan at the Federal Trade Commission. Its executive director is Amba Kak.

Rumman Chowdhury is a Bangladeshi American data scientist, a business founder, and former responsible artificial intelligence lead at Accenture. She was born in Rockland County, New York. She is recognized for her contributions to the field of data science.

Timnit Gebru is an Eritrean Ethiopian-born computer scientist who works in the fields of artificial intelligence (AI), algorithmic bias and data mining. She is a co-founder of Black in AI, an advocacy group that has pushed for more Black roles in AI development and research. She is the founder of the Distributed Artificial Intelligence Research Institute (DAIR).

ACM Conference on Fairness, Accountability, and Transparency is a peer-reviewed academic conference series about ethics and computing systems. Sponsored by the Association for Computing Machinery, this conference focuses on issues such as algorithmic transparency, fairness in machine learning, bias, and ethics from a multi-disciplinary perspective. The conference community includes computer scientists, statisticians, social scientists, scholars of law, and others.

Regulation of artificial intelligence is the development of public sector policies and laws for promoting and regulating artificial intelligence (AI). It is part of the broader regulation of algorithms. The regulatory and policy landscape for AI is an emerging issue in jurisdictions worldwide, including for international organizations without direct enforcement power like the IEEE or the OECD.

Rashida Richardson is a visiting scholar at Rutgers Law School and the Rutgers Institute for Information Policy and the Law and an attorney advisor to the Federal Trade Commission. She is also an assistant professor of law and political science at the Northeastern University School of Law and the Northeastern University Department of Political Science in the College of Social Sciences and Humanities.

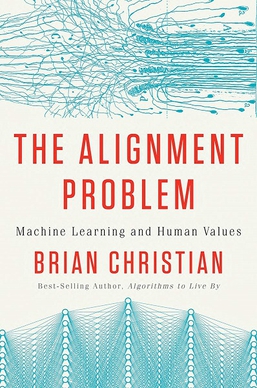

The Alignment Problem: Machine Learning and Human Values is a 2020 non-fiction book by the American writer Brian Christian. It is based on numerous interviews with experts trying to build artificial intelligence systems, particularly machine learning systems, that are aligned with human values.

The AAAI/ACM Conference on AI, Ethics, and Society (AIES) is a peer-reviewed academic conference series focused on societal and ethical aspects of artificial intelligence. The conference is jointly organized by the Association for Computing Machinery, namely the Special Interest Group on Artificial Intelligence (SIGAI), and the Association for the Advancement of Artificial Intelligence, and "is designed to shift the dynamics of the conversation on AI and ethics to concrete actions that scientists, businesses and society alike can take to ensure this promising technology is ushered into the world responsibility." The conference community includes lawyers, practitioners, and academics in computer science, philosophy, public policy, economics, human-computer interaction, and more.