The control unit (CU) is a component of a computer's central processing unit (CPU) that directs the operation of the processor. A CU typically uses a binary decoder to convert coded instructions into timing and control signals that direct the operation of the other units.

In computer programming, machine code is computer code consisting of machine language instructions, which are used to control a computer's central processing unit (CPU). For conventional binary computers, machine code is the binary representation of a computer program which is actually read and interpreted by the computer. A program in machine code consists of a sequence of machine instructions.

The PDP-8 is a family of 12-bit minicomputers that was produced by Digital Equipment Corporation (DEC). It was the first commercially successful minicomputer, with over 50,000 units being sold over the model's lifetime. Its basic design follows the pioneering LINC but has a smaller instruction set, which is an expanded version of the PDP-5 instruction set. Similar machines from DEC are the PDP-12 which is a modernized version of the PDP-8 and LINC concepts, and the PDP-14 industrial controller system.

An optimizing compiler is a compiler designed to generate code that is optimized in aspects such as minimizing program execution time, memory usage, storage size, and power consumption. Optimization is generally implemented as a sequence of optimizing transformations—algorithms that transform code to produce semantically equivalent code optimized for some aspect.

In computer science, control flow is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an imperative programming language from a declarative programming language.

In computer science, threaded code is a programming technique where the code has a form that essentially consists entirely of calls to subroutines. It is often used in compilers, which may generate code in that form or be implemented in that form themselves. The code may be processed by an interpreter or it may simply be a sequence of machine code call instructions.

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is a processor register that indicates where a computer is in its program sequence.

The Motorola 68000 series is a family of 32-bit complex instruction set computer (CISC) microprocessors. During the 1980s and early 1990s, they were popular in personal computers and workstations and were the primary competitors of Intel's x86 microprocessors. They were best known as the processors used in the early Apple Macintosh, the Sharp X68000, the Commodore Amiga, the Sinclair QL, the Atari ST and Falcon, the Atari Jaguar, the Sega Genesis and Sega CD, the Philips CD-i, the Capcom System I (Arcade), the AT&T UNIX PC, the Tandy Model 16/16B/6000, the Sun Microsystems Sun-1, Sun-2 and Sun-3, the NeXT Computer, NeXTcube, NeXTstation, and NeXTcube Turbo, early Silicon Graphics IRIS workstations, the Aesthedes, computers from MASSCOMP, the Texas Instruments TI-89/TI-92 calculators, the Palm Pilot, the Control Data Corporation CDCNET Device Interface, the VTech Precomputer Unlimited and the Space Shuttle. Although no modern desktop computers are based on processors in the 680x0 series, derivative processors are still widely used in embedded systems.

In computer architecture and engineering, a sequencer or microsequencer generates the addresses used to step through the microprogram of a control store. It is used as a part of the control unit of a CPU or as a stand-alone generator for address ranges.

In computer architecture, predication is a feature that provides an alternative to conditional transfer of control, as implemented by conditional branch machine instructions. Predication works by having conditional (predicated) non-branch instructions associated with a predicate, a Boolean value used by the instruction to control whether the instruction is allowed to modify the architectural state or not. If the predicate specified in the instruction is true, the instruction modifies the architectural state; otherwise, the architectural state is unchanged. For example, a predicated move instruction will only modify the destination if the predicate is true. Thus, instead of using a conditional branch to select an instruction or a sequence of instructions to execute based on the predicate that controls whether the branch occurs, the instructions to be executed are associated with that predicate, so that they will be executed, or not executed, based on whether that predicate is true or false.

In computer science, self-modifying code is code that alters its own instructions while it is executing – usually to reduce the instruction path length and improve performance or simply to reduce otherwise repetitively similar code, thus simplifying maintenance. The term is usually only applied to code where the self-modification is intentional, not in situations where code accidentally modifies itself due to an error such as a buffer overflow.

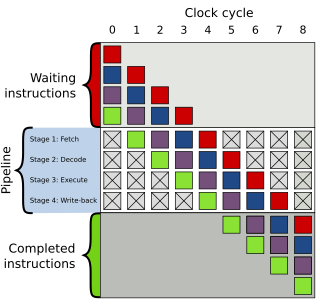

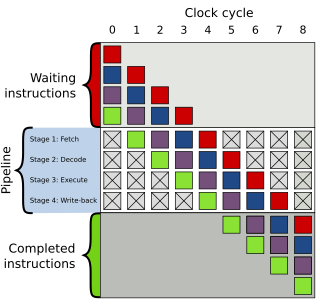

In computer engineering, instruction pipelining is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incoming instructions into a series of sequential steps performed by different processor units with different parts of instructions processed in parallel.

In the history of computer hardware, some early reduced instruction set computer central processing units used a very similar architectural solution, now called a classic RISC pipeline. Those CPUs were: MIPS, SPARC, Motorola 88000, and later the notional CPU DLX invented for education.

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures.

Addressing modes are an aspect of the instruction set architecture in most central processing unit (CPU) designs. The various addressing modes that are defined in a given instruction set architecture define how the machine language instructions in that architecture identify the operand(s) of each instruction. An addressing mode specifies how to calculate the effective memory address of an operand by using information held in registers and/or constants contained within a machine instruction or elsewhere.

In electronics, computer science and computer engineering, microarchitecture, also called computer organization and sometimes abbreviated as μarch or uarch, is the way a given instruction set architecture (ISA) is implemented in a particular processor. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology.

In computer architecture, a transport triggered architecture (TTA) is a kind of processor design in which programs directly control the internal transport buses of a processor. Computation happens as a side effect of data transports: writing data into a triggering port of a functional unit triggers the functional unit to start a computation. This is similar to what happens in a systolic array. Due to its modular structure, TTA is an ideal processor template for application-specific instruction set processors (ASIP) with customized datapath but without the inflexibility and design cost of fixed function hardware accelerators.

Control tables are tables that control the control flow or play a major part in program control. There are no rigid rules about the structure or content of a control table—its qualifying attribute is its ability to direct control flow in some way through "execution" by a processor or interpreter. The design of such tables is sometimes referred to as table-driven design. In some cases, control tables can be specific implementations of finite-state-machine-based automata-based programming. If there are several hierarchical levels of control table they may behave in a manner equivalent to UML state machines

The Hack Computer is a theoretical computer design created by Noam Nisan and Shimon Schocken and described in their book, The Elements of Computing Systems: Building a Modern Computer from First Principles. In using the term “modern”, the authors refer to a digital, binary machine that is patterned according to the von Neumann architecture model.

The COP400 or COP II is a 4-bit microcontroller family introduced in 1977 by National Semiconductor as a follow-on product to their original PMOS COP microcontroller. COP400 family members are complete microcomputers containing internal timing, logic, ROM, RAM, and I/O necessary to implement dedicated controllers. Some COP400 devices were second-sourced by Western Digital as the WD4200 family. In the Soviet Union several COP400 microcontrollers were manufactured as the 1820 series.