Bra–ket notation, also called Dirac notation, is a notation for linear algebra and linear operators on complex vector spaces together with their dual space both in the finite-dimensional and infinite-dimensional case. It is specifically designed to ease the types of calculations that frequently come up in quantum mechanics. Its use in quantum mechanics is quite widespread.

In mathematics, any vector space has a corresponding dual vector space consisting of all linear forms on together with the vector space structure of pointwise addition and scalar multiplication by constants.

In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices which are Hermitian, involutory and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries.

In mathematics, the orthogonal group in dimension , denoted , is the group of distance-preserving transformations of a Euclidean space of dimension that preserve a fixed point, where the group operation is given by composing transformations. The orthogonal group is sometimes called the general orthogonal group, by analogy with the general linear group. Equivalently, it is the group of orthogonal matrices, where the group operation is given by matrix multiplication. The orthogonal group is an algebraic group and a Lie group. It is compact.

In mechanics and geometry, the 3D rotation group, often denoted SO(3), is the group of all rotations about the origin of three-dimensional Euclidean space under the operation of composition.

In the mathematical field of differential geometry, a metric tensor is an additional structure on a manifold M that allows defining distances and angles, just as the inner product on a Euclidean space allows defining distances and angles there. More precisely, a metric tensor at a point p of M is a bilinear form defined on the tangent space at p, and a metric tensor on M consists of a metric tensor at each point p of M that varies smoothly with p.

In linear algebra, the adjugate or classical adjoint of a square matrix A is the transpose of its cofactor matrix and is denoted by adj(A). It is also occasionally known as adjunct matrix, or "adjoint", though the latter term today normally refers to a different concept, the adjoint operator which for a matrix is the conjugate transpose.

In physics, especially in multilinear algebra and tensor analysis, covariance and contravariance describe how the quantitative description of certain geometric or physical entities changes with a change of basis. In modern mathematical notation, the role is sometimes swapped.

In physics and mathematics, the Lorentz group is the group of all Lorentz transformations of Minkowski spacetime, the classical and quantum setting for all (non-gravitational) physical phenomena. The Lorentz group is named for the Dutch physicist Hendrik Lorentz.

In mathematics, an invariant subspace of a linear mapping T : V → V i.e. from some vector space V to itself, is a subspace W of V that is preserved by T. More generally, an invariant subspace for a collection of linear mappings is a subspace preserved by each mapping individually.

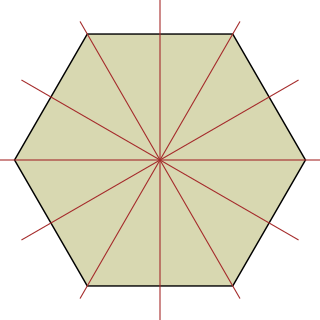

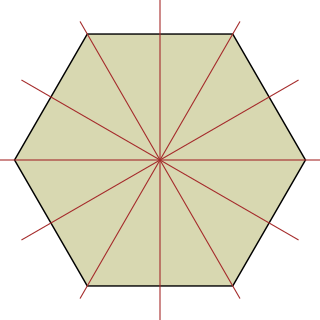

In geometry, Euler's rotation theorem states that, in three-dimensional space, any displacement of a rigid body such that a point on the rigid body remains fixed, is equivalent to a single rotation about some axis that runs through the fixed point. It also means that the composition of two rotations is also a rotation. Therefore the set of rotations has a group structure, known as a rotation group.

In mathematics, an ordered basis of a vector space of finite dimension n allows representing uniquely any element of the vector space by a coordinate vector, which is a sequence of n scalars called coordinates. If two different bases are considered, the coordinate vector that represents a vector v on one basis is, in general, different from the coordinate vector that represents v on the other basis. A change of basis consists of converting every assertion expressed in terms of coordinates relative to one basis into an assertion expressed in terms of coordinates relative to the other basis.

In mathematics, a Lie algebra is semisimple if it is a direct sum of simple Lie algebras..

In mathematics, the discrete Laplace operator is an analog of the continuous Laplace operator, defined so that it has meaning on a graph or a discrete grid. For the case of a finite-dimensional graph, the discrete Laplace operator is more commonly called the Laplacian matrix.

In mathematics and theoretical physics, a supermatrix is a Z2-graded analog of an ordinary matrix. Specifically, a supermatrix is a 2×2 block matrix with entries in a superalgebra. The most important examples are those with entries in a commutative superalgebra or an ordinary field.

In mathematics, quasi-bialgebras are a generalization of bialgebras: they were first defined by the Ukrainian mathematician Vladimir Drinfeld in 1990. A quasi-bialgebra differs from a bialgebra by having coassociativity replaced by an invertible element which controls the non-coassociativity. One of their key properties is that the corresponding category of modules forms a tensor category.

In geometry, various formalisms exist to express a rotation in three dimensions as a mathematical transformation. In physics, this concept is applied to classical mechanics where rotational kinematics is the science of quantitative description of a purely rotational motion. The orientation of an object at a given instant is described with the same tools, as it is defined as an imaginary rotation from a reference placement in space, rather than an actually observed rotation from a previous placement in space.

In mathematics, the classical groups are defined as the special linear groups over the reals R, the complex numbers C and the quaternions H together with special automorphism groups of symmetric or skew-symmetric bilinear forms and Hermitian or skew-Hermitian sesquilinear forms defined on real, complex and quaternionic finite-dimensional vector spaces. Of these, the complex classical Lie groups are four infinite families of Lie groups that together with the exceptional groups exhaust the classification of simple Lie groups. The compact classical groups are compact real forms of the complex classical groups. The finite analogues of the classical groups are the classical groups of Lie type. The term "classical group" was coined by Hermann Weyl, it being the title of his 1939 monograph The Classical Groups.

Representation theory is a branch of mathematics that studies abstract algebraic structures by representing their elements as linear transformations of vector spaces, and studies modules over these abstract algebraic structures. In essence, a representation makes an abstract algebraic object more concrete by describing its elements by matrices and their algebraic operations. The theory of matrices and linear operators is well-understood, so representations of more abstract objects in terms of familiar linear algebra objects helps glean properties and sometimes simplify calculations on more abstract theories.

There are many ways to derive the Lorentz transformations utilizing a variety of physical principles, ranging from Maxwell's equations to Einstein's postulates of special relativity, and mathematical tools, spanning from elementary algebra and hyperbolic functions, to linear algebra and group theory.