In electromagnetics, an antenna's power gain or simply gain is a key performance number which combines the antenna's directivity and electrical efficiency. In a transmitting antenna, the gain describes how well the antenna converts input power into radio waves headed in a specified direction. In a receiving antenna, the gain describes how well the antenna converts radio waves arriving from a specified direction into electrical power. When no direction is specified, gain is understood to refer to the peak value of the gain, the gain in the direction of the antenna's main lobe. A plot of the gain as a function of direction is called the gain pattern or radiation pattern.

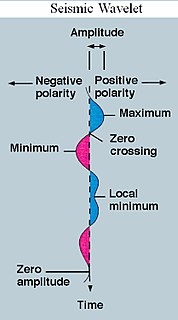

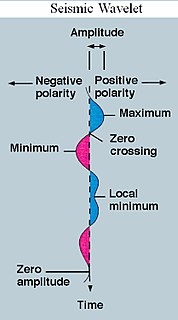

A wavelet is a wave-like oscillation with an amplitude that begins at zero, increases or decreases, and then returns to zero one or more times. Wavelets are termed a "brief oscillation". A taxonomy of wavelets has been established, based on the number and direction of its pulses. Wavelets are imbued with specific properties that make them useful for signal processing.

Quantum decoherence is the loss of quantum coherence. In quantum mechanics, particles such as electrons are described by a wave function, a mathematical representation of the quantum state of a system; a probabilistic interpretation of the wave function is used to explain various quantum effects. As long as there exists a definite phase relation between different states, the system is said to be coherent. A definite phase relationship is necessary to perform quantum computing on quantum information encoded in quantum states. Coherence is preserved under the laws of quantum physics.

In mathematics, a hypergraph is a generalization of a graph in which an edge can join any number of vertices. In contrast, in an ordinary graph, an edge connects exactly two vertices.

In physics, an operator is a function over a space of physical states onto another space of physical states. The simplest example of the utility of operators is the study of symmetry. Because of this, they are very useful tools in classical mechanics. Operators are even more important in quantum mechanics, where they form an intrinsic part of the formulation of the theory.

A multiresolution analysis (MRA) or multiscale approximation (MSA) is the design method of most of the practically relevant discrete wavelet transforms (DWT) and the justification for the algorithm of the fast wavelet transform (FWT). It was introduced in this context in 1988/89 by Stephane Mallat and Yves Meyer and has predecessors in the microlocal analysis in the theory of differential equations and the pyramid methods of image processing as introduced in 1981/83 by Peter J. Burt, Edward H. Adelson and James L. Crowley.

The Ritz method is a direct method to find an approximate solution for boundary value problems. The method is named after Walther Ritz, although also commonly called the Rayleigh–Ritz method and the Ritz-Galerkin method.

A dendrite in metallurgy is a characteristic tree-like structure of crystals growing as molten metal solidifies, the shape produced by faster growth along energetically favourable crystallographic directions. This dendritic growth has large consequences in regard to material properties.

In mathematics, a genus of a multiplicative sequence is a ring homomorphism from the ring of smooth compact manifolds up to the equivalence of bounding a smooth manifold with boundary to another ring, usually the rational numbers, having the property that they are constructed from a sequence of polynomials in characteristic classes that arise as coefficients in formal power series with good multiplicative properties.

The time-evolving block decimation (TEBD) algorithm is a numerical scheme used to simulate one-dimensional quantum many-body systems, characterized by at most nearest-neighbour interactions. It is dubbed Time-evolving Block Decimation because it dynamically identifies the relevant low-dimensional Hilbert subspaces of an exponentially larger original Hilbert space. The algorithm, based on the Matrix Product States formalism, is highly efficient when the amount of entanglement in the system is limited, a requirement fulfilled by a large class of quantum many-body systems in one dimension.

Overcompleteness is a concept from linear algebra that is widely used in mathematics, computer science, engineering, and statistics. It was introduced by R. J. Duffin and A. C. Schaeffer in 1952.

In abstract algebra, a cellular algebra is a finite-dimensional associative algebra A with a distinguished cellular basis which is particularly well-adapted to studying the representation theory of A.

Manifold alignment is a class of machine learning algorithms that produce projections between sets of data, given that the original data sets lie on a common manifold. The concept was first introduced as such by Ham, Lee, and Saul in 2003, adding a manifold constraint to the general problem of correlating sets of high-dimensional vectors.

In applied mathematics, proto-value functions (PVFs) are automatically learned basis functions that are useful in approximating task-specific value functions, providing a compact representation of the powers of transition matrices. They provide a novel framework for solving the credit assignment problem. The framework introduces a novel approach to solving Markov decision processes (MDP) and reinforcement learning problems, using multiscale spectral and manifold learning methods. Proto-value functions are generated by spectral analysis of a graph, using the graph Laplacian.

Diffusion maps is a dimensionality reduction or feature extraction algorithm introduced by Coifman and Lafon which computes a family of embeddings of a data set into Euclidean space whose coordinates can be computed from the eigenvectors and eigenvalues of a diffusion operator on the data. The Euclidean distance between points in the embedded space is equal to the "diffusion distance" between probability distributions centered at those points. Different from linear dimensionality reduction methods such as principal component analysis (PCA), diffusion maps is part of the family of nonlinear dimensionality reduction methods which focus on discovering the underlying manifold that the data has been sampled from. By integrating local similarities at different scales, diffusion maps give a global description of the data-set. Compared with other methods, the diffusion map algorithm is robust to noise perturbation and computationally inexpensive.

Automatic basis function construction is the mathematical method of looking for a set of task-independent basis functions that map the state space to a lower-dimensional embedding, while still representing the value function accurately. Automatic basis construction is independent of prior knowledge of the domain, which allows it to perform well where expert-constructed basis functions are difficult or impossible to create.

In statistical learning theory, a representer theorem is any of several related results stating that a minimizer of a regularized empirical risk functional defined over a reproducing kernel Hilbert space can be represented as a finite linear combination of kernel products evaluated on the input points in the training set data.

Asymptotic safety is a concept in quantum field theory which aims at finding a consistent and predictive quantum theory of the gravitational field. Its key ingredient is a nontrivial fixed point of the theory's renormalization group flow which controls the behavior of the coupling constants in the ultraviolet (UV) regime and renders physical quantities safe from divergences. Although originally proposed by Steven Weinberg to find a theory of quantum gravity, the idea of a nontrivial fixed point providing a possible UV completion can be applied also to other field theories, in particular to perturbatively nonrenormalizable ones. In this respect, it is similar to quantum triviality.

In mathematics, a J-structure is an algebraic structure over a field related to a Jordan algebra. The concept was introduced by Springer (1973) to develop a theory of Jordan algebras using linear algebraic groups and axioms taking the Jordan inversion as basic operation and Hua's identity as a basic relation. There is a classification of simple structures deriving from the classification of semisimple algebraic groups. Over fields of characteristic not equal to 2, the theory of J-structures is essentially the same as that of Jordan algebras.

Large deformation diffeomorphic metric mapping (LDDMM) is a specific suite of algorithms used for diffeomorphic mapping and manipulating dense imagery based on diffeomorphic metric mapping within the academic discipline of computational anatomy, to be distinguished from its precursor based on diffeomorphic mapping. The distinction between the two is that diffeomorphic metric maps satisfy the property that the length associated to their flow away from the identity induces a metric on the group of diffeomorphisms, which in turn induces a metric on the orbit of shapes and forms within the field of Computational Anatomy. The study of shapes and forms with the metric of diffeomorphic metric mapping is called diffeomorphometry.