Earned value management (EVM), earned value project management, or earned value performance management (EVPM) is a project management technique for measuring project performance and progress in an objective manner.

Project management is the process of leading the work of a team to achieve all project goals within the given constraints. This information is usually described in project documentation, created at the beginning of the development process. The primary constraints are scope, time, and budget. The secondary challenge is to optimize the allocation of necessary inputs and apply them to meet pre-defined objectives.

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution.

Critical chain project management (CCPM) is a method of planning and managing projects that emphasizes the resources required to execute project tasks. It was developed by Eliyahu M. Goldratt. It differs from more traditional methods that derive from critical path and PERT algorithms, which emphasize task order and rigid scheduling. A critical chain project network strives to keep resources levelled, and requires that they be flexible in start times.

The critical path method (CPM), or critical path analysis (CPA), is an algorithm for scheduling a set of project activities. It is commonly used in conjunction with the program evaluation and review technique (PERT). A critical path is determined by identifying the longest stretch of dependent activities and measuring the time required to complete them from start to finish.

Project management software (PMS) has the capacity to help plan, organize, and manage resource tools and develop resource estimates. Depending on the sophistication of the software, it can manage estimation and planning, scheduling, cost control and budget management, resource allocation, collaboration software, communication, decision-making, quality management, time management and documentation or administration systems. Numerous PC and browser-based project management software and contract management software products and services are available.

The program evaluation and review technique (PERT) is a statistical tool used in project management, which was designed to analyze and represent the tasks involved in completing a given project.

Brooks' law is an observation about software project management according to which "adding manpower to a late software project makes it later". It was coined by Fred Brooks in his 1975 book The Mythical Man-Month. According to Brooks, under certain conditions, an incremental person when added to a project makes it take more, not less time.

In project management, a schedule is a listing of a project's milestones, activities, and deliverables. Usually dependencies and resources are defined for each task, then start and finish dates are estimated from the resource allocation, budget, task duration, and scheduled events. A schedule is commonly used in the project planning and project portfolio management parts of project management. Elements on a schedule may be closely related to the work breakdown structure (WBS) terminal elements, the Statement of work, or a Contract Data Requirements List.

The Personal Software Process (PSP) is a structured software development process that is designed to help software engineers better understand and improve their performance by bringing discipline to the way they develop software and tracking their predicted and actual development of the code. It clearly shows developers how to manage the quality of their products, how to make a sound plan, and how to make commitments. It also offers them the data to justify their plans. They can evaluate their work and suggest improvement direction by analyzing and reviewing development time, defects, and size data. The PSP was created by Watts Humphrey to apply the underlying principles of the Software Engineering Institute's (SEI) Capability Maturity Model (CMM) to the software development practices of a single developer. It claims to give software engineers the process skills necessary to work on a team software process (TSP) team.

In Agile principles, timeboxing allocates a fixed and maximum unit of time to an activity, called a timebox, within which planned activity takes place. It is used by Agile principles-based project management approaches and for personal time management.

Cost estimation in software engineering is typically concerned with the financial spend on the effort to develop and test the software, this can also include requirements review, maintenance, training, managing and buying extra equipment, servers and software. Many methods have been developed for estimating software costs for a given project.

Big Design Up Front (BDUF) is a software development approach in which the program's design is to be completed and perfected before that program's implementation is started. It is often associated with the waterfall model of software development.

The Putnam model is an empirical software effort estimation model. The original paper by Lawrence H. Putnam published in 1978 is seen as pioneering work in the field of software process modelling. As a group, empirical models work by collecting software project data and fitting a curve to the data. Future effort estimates are made by providing size and calculating the associated effort using the equation which fit the original data.

Event chain methodology is a network analysis technique that is focused on identifying and managing events and relationship between them that affect project schedules. It is an uncertainty modeling schedule technique. Event chain methodology is an extension of quantitative project risk analysis with Monte Carlo simulations. It is the next advance beyond critical path method and critical chain project management. Event chain methodology tries to mitigate the effect of motivational and cognitive biases in estimating and scheduling. It improves accuracy of risk assessment and helps to generate more realistic risk adjusted project schedules.

In project management, the Cone of Uncertainty describes the evolution of the amount of best case uncertainty during a project. At the beginning of a project, comparatively little is known about the product or work results, and so estimates are subject to large uncertainty. As more research and development is done, more information is learned about the project, and the uncertainty then tends to decrease, reaching 0% when all residual risk has been terminated or transferred. This usually happens by the end of the project i.e. by transferring the responsibilities to a separate maintenance group.

A glossary of terms relating to project management and consulting.

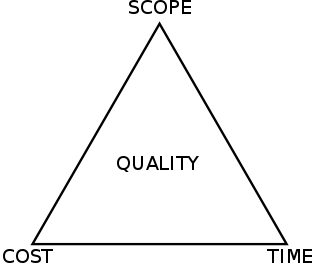

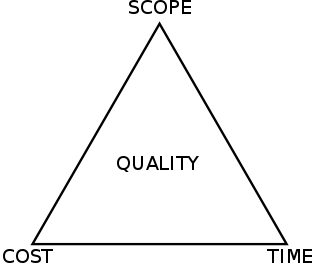

The project management triangle is a model of the constraints of project management. While its origins are unclear, it has been used since at least the 1950s. It contends that:

- The quality of work is constrained by the project's budget, deadlines and scope (features).

- The project manager can trade between constraints.

- Changes in one constraint necessitate changes in others to compensate or quality will suffer.

The following outline is provided as an overview of and topical guide to project management:

Electronic systems’ power consumption has been a real challenge for Hardware and Software designers as well as users especially in portable devices like cell phones and laptop computers. Power consumption also has been an issue for many industries that use computer systems heavily such as Internet service providers using servers or companies with many employees using computers and other computational devices. Many different approaches have been discovered by researchers to estimate power consumption efficiently. This survey paper focuses on the different methods where power consumption can be estimated or measured in real-time.