Application in machine learning

In the context of machine learning, the exploration-exploitation tradeoff is fundamental in reinforcement learning (RL), a type of machine learning that involves training agents to make decisions based on feedback from the environment. Crucially, this feedback may be incomplete or delayed. [4] The agent must decide whether to exploit the current best-known policy or explore new policies to improve its performance.

Multi-armed bandit methods

The multi-armed bandit (MAB) problem was a classic example of the tradeoff, and many methods were developed for it, such as epsilon-greedy, Thompson sampling, and the upper confidence bound (UCB). See the page on MAB for details.

In more complex RL situations than the MAB problem, the agent can treat each choice as a MAB, where the payoff is the expected future reward. For example, if the agent performs epsilon-greedy method, then the agent would often "pull the best lever" by picking the action that had the best predicted expected reward (exploit). However, it would with probability epsilon to pick a random action (explore). The Monte Carlo Tree Search, for example, uses a variant of the UCB method. [5]

Exploration problems

There are some problems that make exploration difficult. [5]

- Sparse reward. If rewards occur only once a long while, then the agent might not persist in exploring. Furthermore, if the space of actions is large, then the sparse reward would mean the agent would not be guided by the reward to find a good direction for deeper exploration. A standard example is Montezuma's Revenge. [6]

- Deceptive reward. If some early actions give immediate small reward, but other actions give later large reward, then the agent might be lured away from exploring the other actions.

- Noisy TV problem. If certain observations are irreducibly noisy (such as a television showing random images), then the agent might be trapped exploring those observations (watching the television). [7]

Exploration reward

This section based on. [8]

The exploration reward (also called exploration bonus) methods convert the exploration-exploitation dilemma into a balance of exploitations. That is, instead of trying to get the agent to balance exploration and exploitation, exploration is simply treated as another form of exploitation, and the agent simply attempts to maximize the sum of rewards from exploration and exploitation. The exploration reward can be treated as a form of intrinsic reward. [9]

We write these as , meaning the intrinsic and extrinsic rewards at time step .

However, exploration reward is different from exploitation in two regards:

- The reward of exploitation is not freely chosen, but given by the environment, but the reward of exploration may be picked freely. Indeed, there are many different ways to design described below.

- The reward of exploitation is usually stationary (i.e. the same action in the same state gives the same reward), but the reward of exploration is non-stationary (i.e. the same action in the same state should give less and less reward). [7]

Count-based exploration uses , the number of visits to a state during the time-steps , to calculate the exploration reward. This is only possible in small and discrete state space. Density-based exploration extends count-based exploration by using a density model . The idea is that, if a state has been visited, then nearby states are also partly-visited. [10]

In maximum entropy exploration, the entropy of the agent's policy is included as a term in the intrinsic reward. That is, . [11]

Prediction-based

This section based on. [8]

The forward dynamics model is a function for predicting the next state based on the current state and the current action: . The forward dynamics model is trained as the agent plays. The model becomes better at predicting state transition for state-action pairs that had been done many times.

A forward dynamics model can define an exploration reward by . That is, the reward is the squared-error of the prediction compared to reality. This rewards the agent to perform state-action pairs that had not been done many times. This is however susceptible to the noisy TV problem.

Dynamics model can be run in latent space. That is, for some featurizer . The featurizer can be the identity function (i.e. ), randomly generated, the encoder-half of a variational autoencoder, etc. A good featurizer improves forward dynamics exploration. [12]

The Intrinsic Curiosity Module (ICM) method trains simultaneously a forward dynamics model and a featurizer. The featurizer is trained by an inverse dynamics model, which is a function for predicting the current action based on the features of the current and the next state: . By optimizing the inverse dynamics, both the inverse dynamics model and the featurizer are improved. Then, the improved featurizer improves the forward dynamics model, which improves the exploration of the agent. [13]

Random Network Distillation (RND) method attempts to solve this problem by teacher-student distillation. Instead of a forward dynamics model, it has two models . The teacher model is fixed, and the student model is trained to minimize on states . As a state is visited more and more, the student network becomes better at predicting the teacher. Meanwhile, the prediction error is also an exploration reward for the agent, and so the agent learns to perform actions that result in higher prediction error. Thus, we have a student network attempting to minimize the prediction error, while the agent attempting to maximize it, resulting in exploration.

The states are normalized by subtracting a running average and dividing a running variance, which is necessary since the teacher model is frozen. The rewards are normalized by dividing with a running variance. [7] [14]

Exploration by disagreement trains an ensemble of forward dynamics models, each on a random subset of all tuples. The exploration reward is the variance of the models' predictions. [15]

Noise

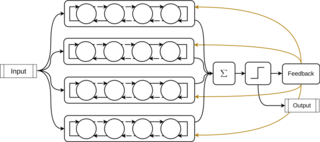

For neural-network based agents, the NoisyNet method changes some of its neural network modules by noisy versions. That is, some network parameters are random variables from a probability distribution. The parameters of the distribution are themselves learnable. [16] For example, in a linear layer , both are sampled from gaussian distributions at every step, and the parameters are learned via the reparameterization trick. [17]

![<span class="mw-page-title-main">Knowledge graph embedding</span> Dimensionality reduction of graph-based semantic data objects [machine learning task]](https://upload.wikimedia.org/wikipedia/commons/thumb/3/3f/KnowledgeGraphEmbedding.png/320px-KnowledgeGraphEmbedding.png)