Related Research Articles

In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

In computational intelligence (CI), an evolutionary algorithm (EA) is a subset of evolutionary computation, a generic population-based metaheuristic optimization algorithm. An EA uses mechanisms inspired by biological evolution, such as reproduction, mutation, recombination, and selection. Candidate solutions to the optimization problem play the role of individuals in a population, and the fitness function determines the quality of the solutions. Evolution of the population then takes place after the repeated application of the above operators.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. Recently, artificial neural networks have been able to surpass many previous approaches in performance.

In computer science, evolutionary computation is a family of algorithms for global optimization inspired by biological evolution, and the subfield of artificial intelligence and soft computing studying these algorithms. In technical terms, they are a family of population-based trial and error problem solvers with a metaheuristic or stochastic optimization character.

Bio-inspired computing, short for biologically inspired computing, is a field of study which seeks to solve computer science problems using models of biology. It relates to connectionism, social behavior, and emergence. Within computer science, bio-inspired computing relates to artificial intelligence and machine learning. Bio-inspired computing is a major subset of natural computation.

Neuroevolution, or neuro-evolution, is a form of artificial intelligence that uses evolutionary algorithms to generate artificial neural networks (ANN), parameters, and rules. It is most commonly applied in artificial life, general game playing and evolutionary robotics. The main benefit is that neuroevolution can be applied more widely than supervised learning algorithms, which require a syllabus of correct input-output pairs. In contrast, neuroevolution requires only a measure of a network's performance at a task. For example, the outcome of a game can be easily measured without providing labeled examples of desired strategies. Neuroevolution is commonly used as part of the reinforcement learning paradigm, and it can be contrasted with conventional deep learning techniques that use backpropagation with a fixed topology.

Intelligent control is a class of control techniques that use various artificial intelligence computing approaches like neural networks, Bayesian probability, fuzzy logic, machine learning, reinforcement learning, evolutionary computation and genetic algorithms.

The expression computational intelligence (CI) usually refers to the ability of a computer to learn a specific task from data or experimental observation. Even though it is commonly considered a synonym of soft computing, there is still no commonly accepted definition of computational intelligence.

A recurrent neural network (RNN) is one of the two broad types of artificial neural network, characterized by direction of the flow of information between its layers. In contrast to the uni-directional feedforward neural network, it is a bi-directional artificial neural network, meaning that it allows the output from some nodes to affect subsequent input to the same nodes. Their ability to use internal state (memory) to process arbitrary sequences of inputs makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. The term "recurrent neural network" is used to refer to the class of networks with an infinite impulse response, whereas "convolutional neural network" refers to the class of finite impulse response. Both classes of networks exhibit temporal dynamic behavior. A finite impulse recurrent network is a directed acyclic graph that can be unrolled and replaced with a strictly feedforward neural network, while an infinite impulse recurrent network is a directed cyclic graph that can not be unrolled.

Quantum neural networks are computational neural network models which are based on the principles of quantum mechanics. The first ideas on quantum neural computation were published independently in 1995 by Subhash Kak and Ron Chrisley, engaging with the theory of quantum mind, which posits that quantum effects play a role in cognitive function. However, typical research in quantum neural networks involves combining classical artificial neural network models with the advantages of quantum information in order to develop more efficient algorithms. One important motivation for these investigations is the difficulty to train classical neural networks, especially in big data applications. The hope is that features of quantum computing such as quantum parallelism or the effects of interference and entanglement can be used as resources. Since the technological implementation of a quantum computer is still in a premature stage, such quantum neural network models are mostly theoretical proposals that await their full implementation in physical experiments.

The following outline is provided as an overview of and topical guide to artificial intelligence:

Computational neurogenetic modeling (CNGM) is concerned with the study and development of dynamic neuronal models for modeling brain functions with respect to genes and dynamic interactions between genes. These include neural network models and their integration with gene network models. This area brings together knowledge from various scientific disciplines, such as computer and information science, neuroscience and cognitive science, genetics and molecular biology, as well as engineering.

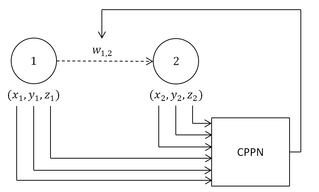

Hypercube-based NEAT, or HyperNEAT, is a generative encoding that evolves artificial neural networks (ANNs) with the principles of the widely used NeuroEvolution of Augmented Topologies (NEAT) algorithm developed by Kenneth Stanley. It is a novel technique for evolving large-scale neural networks using the geometric regularities of the task domain. It uses Compositional Pattern Producing Networks (CPPNs), which are used to generate the images for Picbreeder.orgArchived 2011-07-25 at the Wayback Machine and shapes for EndlessForms.comArchived 2018-11-14 at the Wayback Machine. HyperNEAT has recently been extended to also evolve plastic ANNs and to evolve the location of every neuron in the network.

The promoter based genetic algorithm (PBGA) is a genetic algorithm for neuroevolution developed by F. Bellas and R.J. Duro in the Integrated Group for Engineering Research (GII) at the University of Coruña, in Spain. It evolves variable size feedforward artificial neural networks (ANN) that are encoded into sequences of genes for constructing a basic ANN unit. Each of these blocks is preceded by a gene promoter acting as an on/off switch that determines if that particular unit will be expressed or not.

Natural computing, also called natural computation, is a terminology introduced to encompass three classes of methods: 1) those that take inspiration from nature for the development of novel problem-solving techniques; 2) those that are based on the use of computers to synthesize natural phenomena; and 3) those that employ natural materials to compute. The main fields of research that compose these three branches are artificial neural networks, evolutionary algorithms, swarm intelligence, artificial immune systems, fractal geometry, artificial life, DNA computing, and quantum computing, among others.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

In artificial intelligence, a differentiable neural computer (DNC) is a memory augmented neural network architecture (MANN), which is typically recurrent in its implementation. The model was published in 2016 by Alex Graves et al. of DeepMind.

The following outline is provided as an overview of and topical guide to machine learning:

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process.

Soft computing is an umbrella term used to describe types of algorithms that produce approximate solutions to unsolvable high-level problems in computer science. Typically, traditional hard-computing algorithms heavily rely on concrete data and mathematical models to produce solutions to problems. Soft computing was coined in the late 20th century. During this period, revolutionary research in three fields greatly impacted soft computing. Fuzzy logic is a computational paradigm that entertains the uncertainties in data by using levels of truth rather than rigid 0s and 1s in binary. Next, neural networks which are computational models influenced by human brain functions. Finally, evolutionary computation is a term to describe groups of algorithm that mimic natural processes such as evolution and natural selection.

References

- ↑ Rogers, David (1989). Touretzky, David S. (ed.). Advances in neural information processing systems: Weather prediction using a genetic memory. Los Altos, Calif: M. Kaufmann Publishers. pp. 455–464. ISBN 978-1-55860-100-0.

- ↑ Rocha LM, Hordijk W (2005). "Material representations: From the genetic code to the evolution of cellular automata". Artificial Life. 11 (1–2): 189–214. CiteSeerX 10.1.1.115.6605 . doi:10.1162/1064546053278964. PMID 15811227. S2CID 5742197.