In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference.

In analytical mechanics, generalized coordinates are a set of parameters used to represent the state of a system in a configuration space. These parameters must uniquely define the configuration of the system relative to a reference state. The generalized velocities are the time derivatives of the generalized coordinates of the system. The adjective "generalized" distinguishes these parameters from the traditional use of the term "coordinate" to refer to Cartesian coordinates.

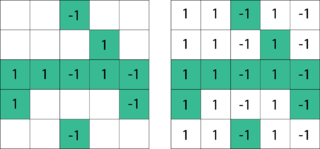

In linear algebra, a tridiagonal matrix is a band matrix that has nonzero elements only on the main diagonal, the subdiagonal/lower diagonal, and the supradiagonal/upper diagonal. For example, the following matrix is tridiagonal:

The Gauss–Newton algorithm is used to solve non-linear least squares problems, which is equivalent to minimizing a sum of squared function values. It is an extension of Newton's method for finding a minimum of a non-linear function. Since a sum of squares must be nonnegative, the algorithm can be viewed as using Newton's method to iteratively approximate zeroes of the components of the sum, and thus minimizing the sum. In this sense, the algorithm is also an effective method for solving overdetermined systems of equations. It has the advantage that second derivatives, which can be challenging to compute, are not required.

In statistics a minimum-variance unbiased estimator (MVUE) or uniformly minimum-variance unbiased estimator (UMVUE) is an unbiased estimator that has lower variance than any other unbiased estimator for all possible values of the parameter.

In mathematics, differential algebra is, broadly speaking, the area of mathematics consisting in the study of differential equations and differential operators as algebraic objects in view of deriving properties of differential equations and operators without computing the solutions, similarly as polynomial algebras are used for the study of algebraic varieties, which are solution sets of systems of polynomial equations. Weyl algebras and Lie algebras may be considered as belonging to differential algebra.

Covariance matrix adaptation evolution strategy (CMA-ES) is a particular kind of strategy for numerical optimization. Evolution strategies (ES) are stochastic, derivative-free methods for numerical optimization of non-linear or non-convex continuous optimization problems. They belong to the class of evolutionary algorithms and evolutionary computation. An evolutionary algorithm is broadly based on the principle of biological evolution, namely the repeated interplay of variation and selection: in each generation (iteration) new individuals are generated by variation of the current parental individuals, usually in a stochastic way. Then, some individuals are selected to become the parents in the next generation based on their fitness or objective function value . Like this, individuals with better and better -values are generated over the generation sequence.

Stochastic approximation methods are a family of iterative methods typically used for root-finding problems or for optimization problems. The recursive update rules of stochastic approximation methods can be used, among other things, for solving linear systems when the collected data is corrupted by noise, or for approximating extreme values of functions which cannot be computed directly, but only estimated via noisy observations.

Non-linear least squares is the form of least squares analysis used to fit a set of m observations with a model that is non-linear in n unknown parameters (m ≥ n). It is used in some forms of nonlinear regression. The basis of the method is to approximate the model by a linear one and to refine the parameters by successive iterations. There are many similarities to linear least squares, but also some significant differences. In economic theory, the non-linear least squares method is applied in (i) the probit regression, (ii) threshold regression, (iii) smooth regression, (iv) logistic link regression, (v) Box–Cox transformed regressors ().

The Routh array is a tabular method permitting one to establish the stability of a system using only the coefficients of the characteristic polynomial. Central to the field of control systems design, the Routh–Hurwitz theorem and Routh array emerge by using the Euclidean algorithm and Sturm's theorem in evaluating Cauchy indices.

In statistical decision theory, where we are faced with the problem of estimating a deterministic parameter (vector) from observations an estimator is called minimax if its maximal risk is minimal among all estimators of . In a sense this means that is an estimator which performs best in the worst possible case allowed in the problem.

In quantum computing, the quantum phase estimation algorithm is a quantum algorithm to estimate the phase corresponding to an eigenvalue of a given unitary operator. Because the eigenvalues of a unitary operator always have unit modulus, they are characterized by their phase, and therefore the algorithm can be equivalently described as retrieving either the phase or the eigenvalue itself. The algorithm was initially introduced by Alexei Kitaev in 1995.

In coding theory, generalized minimum-distance (GMD) decoding provides an efficient algorithm for decoding concatenated codes, which is based on using an errors-and-erasures decoder for the outer code.

For certain applications in linear algebra, it is useful to know properties of the probability distribution of the largest eigenvalue of a finite sum of random matrices. Suppose is a finite sequence of random matrices. Analogous to the well-known Chernoff bound for sums of scalars, a bound on the following is sought for a given parameter t:

Matrix completion is the task of filling in the missing entries of a partially observed matrix, which is equivalent to performing data imputation in statistics. A wide range of datasets are naturally organized in matrix form. One example is the movie-ratings matrix, as appears in the Netflix problem: Given a ratings matrix in which each entry represents the rating of movie by customer , if customer has watched movie and is otherwise missing, we would like to predict the remaining entries in order to make good recommendations to customers on what to watch next. Another example is the document-term matrix: The frequencies of words used in a collection of documents can be represented as a matrix, where each entry corresponds to the number of times the associated term appears in the indicated document.

In Computer Science, Optimal Computing Budget Allocation (OCBA) is a simulation optimization method designed to maximize the Probability of Correct Selection (PCS) while minimizing computational costs. First introduced by Dr. Chun-Hung Chen in the mid-1990s, OCBA determines how many simulation runs (or how much computational time) or the number of replications each design alternative needs to identify the best option while using as few resources as possible. It works by focusing more on alternatives that are harder to evaluate, such as those with higher uncertainty or close performance to the best option.

Quantum counting algorithm is a quantum algorithm for efficiently counting the number of solutions for a given search problem. The algorithm is based on the quantum phase estimation algorithm and on Grover's search algorithm.

Pure inductive logic (PIL) is the area of mathematical logic concerned with the philosophical and mathematical foundations of probabilistic inductive reasoning. It combines classical predicate logic and probability theory. Probability values are assigned to sentences of a first-order relational language to represent degrees of belief that should be held by a rational agent. Conditional probability values represent degrees of belief based on the assumption of some received evidence.

In quantum computing, the variational quantum eigensolver (VQE) is a quantum algorithm for quantum chemistry, quantum simulations and optimization problems. It is a hybrid algorithm that uses both classical computers and quantum computers to find the ground state of a given physical system. Given a guess or ansatz, the quantum processor calculates the expectation value of the system with respect to an observable, often the Hamiltonian, and a classical optimizer is used to improve the guess. The algorithm is based on the variational method of quantum mechanics.

Deep backward stochastic differential equation method is a numerical method that combines deep learning with Backward stochastic differential equation (BSDE). This method is particularly useful for solving high-dimensional problems in financial derivatives pricing and risk management. By leveraging the powerful function approximation capabilities of deep neural networks, deep BSDE addresses the computational challenges faced by traditional numerical methods in high-dimensional settings.