In computer science, a queue is a collection of entities that are maintained in a sequence and can be modified by the addition of entities at one end of the sequence and the removal of entities from the other end of the sequence. By convention, the end of the sequence at which elements are added is called the back, tail, or rear of the queue, and the end at which elements are removed is called the head or front of the queue, analogously to the words used when people line up to wait for goods or services.

Queueing theory is the mathematical study of waiting lines, or queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service.

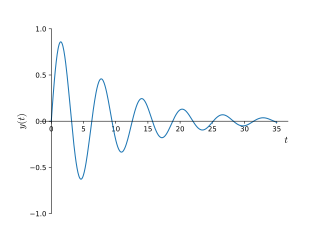

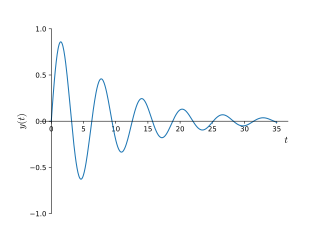

In physics and engineering, the quality factor or Q factor is a dimensionless parameter that describes how underdamped an oscillator or resonator is. It is defined as the ratio of the initial energy stored in the resonator to the energy lost in one radian of the cycle of oscillation. Q factor is alternatively defined as the ratio of a resonator's centre frequency to its bandwidth when subject to an oscillating driving force. These two definitions give numerically similar, but not identical, results. Higher Q indicates a lower rate of energy loss and the oscillations die out more slowly. A pendulum suspended from a high-quality bearing, oscillating in air, has a high Q, while a pendulum immersed in oil has a low one. Resonators with high quality factors have low damping, so that they ring or vibrate longer.

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

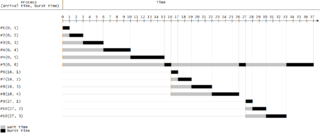

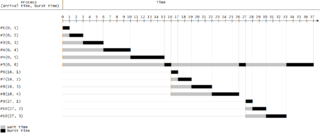

In computer science, rate-monotonic scheduling (RMS) is a priority assignment algorithm used in real-time operating systems (RTOS) with a static-priority scheduling class. The static priorities are assigned according to the cycle duration of the job, so a shorter cycle duration results in a higher job priority.

In system analysis, among other fields of study, a linear time-invariant (LTI) system is a system that produces an output signal from any input signal subject to the constraints of linearity and time-invariance; these terms are briefly defined below. These properties apply (exactly or approximately) to many important physical systems, in which case the response y(t) of the system to an arbitrary input x(t) can be found directly using convolution: y(t) = (x ∗ h)(t) where h(t) is called the system's impulse response and ∗ represents convolution (not to be confused with multiplication). What's more, there are systematic methods for solving any such system (determining h(t)), whereas systems not meeting both properties are generally more difficult (or impossible) to solve analytically. A good example of an LTI system is any electrical circuit consisting of resistors, capacitors, inductors and linear amplifiers.

Air–fuel ratio (AFR) is the mass ratio of air to a solid, liquid, or gaseous fuel present in a combustion process. The combustion may take place in a controlled manner such as in an internal combustion engine or industrial furnace, or may result in an explosion.

In physics, the Einstein relation is a previously unexpected connection revealed independently by William Sutherland in 1904, Albert Einstein in 1905, and by Marian Smoluchowski in 1906 in their works on Brownian motion. The more general form of the equation in the classical case is

Shortest job next (SJN), also known as shortest job first (SJF) or shortest process next (SPN), is a scheduling policy that selects for execution the waiting process with the smallest execution time. SJN is a non-preemptive algorithm. Shortest remaining time is a preemptive variant of SJN.

In probability theory, the Kelly criterion is a formula for sizing a bet. The Kelly bet size is found by maximizing the expected value of the logarithm of wealth, which is equivalent to maximizing the expected geometric growth rate. It assumes that the expected returns are known and is optimal for a bettor who values their wealth logarithmically. J. L. Kelly Jr, a researcher at Bell Labs, described the criterion in 1956. Under the stated assumptions, the Kelly criterion leads to higher wealth than any other strategy in the long run.

Shortest remaining time, also known as shortest remaining time first (SRTF), is a scheduling method that is a preemptive version of shortest job next scheduling. In this scheduling algorithm, the process with the smallest amount of time remaining until completion is selected to execute. Since the currently executing process is the one with the shortest amount of time remaining by definition, and since that time should only reduce as execution progresses, the process will either run until it completes or get preempted if a new process is added that requires a smaller amount of time.

In queueing theory, a discipline within the mathematical theory of probability, an M/M/1 queue represents the queue length in a system having a single server, where arrivals are determined by a Poisson process and job service times have an exponential distribution. The model name is written in Kendall's notation. The model is the most elementary of queueing models and an attractive object of study as closed-form expressions can be obtained for many metrics of interest in this model. An extension of this model with more than one server is the M/M/c queue.

Biological neuron models, also known as a spiking neuron models, are mathematical descriptions of the properties of certain cells in the nervous system. They generate sharp electrical potentials across their cell membrane, roughly one millisecond in duration, called action potentials or spikes. Since spikes are transmitted along the axon and synapses from the sending neuron to many other neurons, spiking neurons are considered to be a major information processing unit of the nervous system. Spiking neuron models can be divided into different categories: the most detailed mathematical models, biophysical neuron models, describe the membrane voltage as a function of the input current and the activation of ion channels. Mathematically simpler are integrate-and-fire models that describe the membrane voltage as a function of the input current and predict the spike times without a description of the biophysical processes that shape the time course of an action potential. Even more abstract models only predict output spikes as a function of the stimulation where the stimulation can occur through sensory input or pharmacologically.

Response factor, usually in chromatography and spectroscopy, is the ratio between a signal produced by an analyte, and the quantity of analyte which produces the signal. Ideally, and for easy computation, this ratio is unity (one). In real-world scenarios, this is often not the case.

Samplesort is a sorting algorithm that is a divide and conquer algorithm often used in parallel processing systems. Conventional divide and conquer sorting algorithms partitions the array into sub-intervals or buckets. The buckets are then sorted individually and then concatenated together. However, if the array is non-uniformly distributed, the performance of these sorting algorithms can be significantly throttled. Samplesort addresses this issue by selecting a sample of size s from the n-element sequence, and determining the range of the buckets by sorting the sample and choosing p−1 < s elements from the result. These elements then divide the array into p approximately equal-sized buckets. Samplesort is described in the 1970 paper, "Samplesort: A Sampling Approach to Minimal Storage Tree Sorting", by W. D. Frazer and A. C. McKellar.

Random walk closeness centrality is a measure of centrality in a network, which describes the average speed with which randomly walking processes reach a node from other nodes of the network. It is similar to the closeness centrality except that the farness is measured by the expected length of a random walk rather than by the shortest path.

Phase stretch transform (PST) is a computational approach to signal and image processing. One of its utilities is for feature detection and classification. PST is related to time stretch dispersive Fourier transform. It transforms the image by emulating propagation through a diffractive medium with engineered 3D dispersive property. The operation relies on symmetry of the dispersion profile and can be understood in terms of dispersive eigenfunctions or stretch modes. PST performs similar functionality as phase-contrast microscopy, but on digital images. PST can be applied to digital images and temporal data. It is a physics-based feature engineering algorithm.

An RF chain is a cascade of electronic components and sub-units which may include amplifiers, filters, mixers, attenuators and detectors. It can take many forms, for example, as a wide-band receiver-detector for electronic warfare (EW) applications, as a tunable narrow-band receiver for communications purposes, as a repeater in signal distribution systems, or as an amplifier and up-converters for a transmitter-driver. In this article, the term RF covers the frequency range "Medium Frequencies" up to "Microwave Frequencies", i.e. from 100 kHz to 20 GHz.

In computer science, multiway number partitioning is the problem of partitioning a multiset of numbers into a fixed number of subsets, such that the sums of the subsets are as similar as possible. It was first presented by Ronald Graham in 1969 in the context of the identical-machines scheduling problem. The problem is parametrized by a positive integer k, and called k-way number partitioning. The input to the problem is a multiset S of numbers, whose sum is k*T.

Identical-machines scheduling is an optimization problem in computer science and operations research. We are given n jobs J1, J2, ..., Jn of varying processing times, which need to be scheduled on m identical machines, such that a certain objective function is optimized, for example, the makespan is minimized.