An analog computer or analogue computer is a type of computation machine (computer) that uses the continuous variation aspect of physical phenomena such as electrical, mechanical, or hydraulic quantities to model the problem being solved. In contrast, digital computers represent varying quantities symbolically and by discrete values of both time and amplitude.

Linear filters process time-varying input signals to produce output signals, subject to the constraint of linearity. In most cases these linear filters are also time invariant in which case they can be analyzed exactly using LTI system theory revealing their transfer functions in the frequency domain and their impulse responses in the time domain. Real-time implementations of such linear signal processing filters in the time domain are inevitably causal, an additional constraint on their transfer functions. An analog electronic circuit consisting only of linear components will necessarily fall in this category, as will comparable mechanical systems or digital signal processing systems containing only linear elements. Since linear time-invariant filters can be completely characterized by their response to sinusoids of different frequencies, they are sometimes known as frequency filters.

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis. It is the study of numerical methods that attempt to find approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences like economics, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics, numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine and biology.

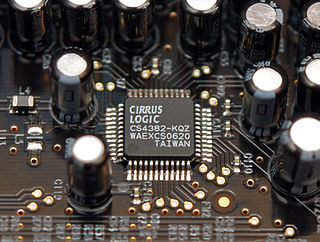

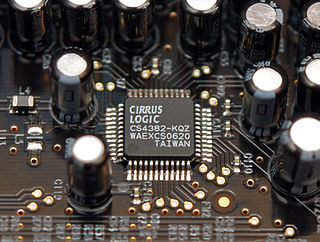

In signal processing, a digital filter is a system that performs mathematical operations on a sampled, discrete-time signal to reduce or enhance certain aspects of that signal. This is in contrast to the other major type of electronic filter, the analog filter, which is typically an electronic circuit operating on continuous-time analog signals.

In electronics and telecommunications, jitter is the deviation from true periodicity of a presumably periodic signal, often in relation to a reference clock signal. In clock recovery applications it is called timing jitter. Jitter is a significant, and usually undesired, factor in the design of almost all communications links.

In electronics, a digital-to-analog converter is a system that converts a digital signal into an analog signal. An analog-to-digital converter (ADC) performs the reverse function.

Signal refers to both the process and the result of transmission of data over some media accomplished by embedding some variation. Signals are important in multiple subject fields including signal processing, information theory and biology.

Neuromorphic computing is an approach to computing that is inspired by the structure and function of the human brain. A neuromorphic computer/chip is any device that uses physical artificial neurons to do computations. In recent times, the term neuromorphic has been used to describe analog, digital, mixed-mode analog/digital VLSI, and software systems that implement models of neural systems. Recent advances have even discovered ways to mimic the human nervous system through liquid solutions of chemical systems.

In numerical analysis, adaptive mesh refinement (AMR) is a method of adapting the accuracy of a solution within certain sensitive or turbulent regions of simulation, dynamically and during the time the solution is being calculated. When solutions are calculated numerically, they are often limited to predetermined quantified grids as in the Cartesian plane which constitute the computational grid, or 'mesh'. Many problems in numerical analysis, however, do not require a uniform precision in the numerical grids used for graph plotting or computational simulation, and would be better suited if specific areas of graphs which needed precision could be refined in quantification only in the regions requiring the added precision. Adaptive mesh refinement provides such a dynamic programming environment for adapting the precision of the numerical computation based on the requirements of a computation problem in specific areas of multi-dimensional graphs which need precision while leaving the other regions of the multi-dimensional graphs at lower levels of precision and resolution.

Mesh generation is the practice of creating a mesh, a subdivision of a continuous geometric space into discrete geometric and topological cells. Often these cells form a simplicial complex. Usually the cells partition the geometric input domain. Mesh cells are used as discrete local approximations of the larger domain. Meshes are created by computer algorithms, often with human guidance through a GUI, depending on the complexity of the domain and the type of mesh desired. A typical goal is to create a mesh that accurately captures the input domain geometry, with high-quality (well-shaped) cells, and without so many cells as to make subsequent calculations intractable. The mesh should also be fine in areas that are important for the subsequent calculations.

VisSim is a visual block diagram program for the simulation of dynamical systems and model-based design of embedded systems, with its own visual language. It is developed by Visual Solutions of Westford, Massachusetts. Visual Solutions was acquired by Altair in August 2014 and its products have been rebranded as Altair Embed as a part of Altair's Model Based Development Suite. With Embed, virtual prototypes of dynamic systems can be developed. Models are built by sliding blocks into the work area and wiring them together with the mouse. Embed automatically converts the control diagrams into C-code ready to be downloaded to the target hardware.

Unconventional computing is computing by any of a wide range of new or unusual methods.

HRS-100, ХРС-100, GVS-100 or ГВС-100, was a third generation hybrid computer developed by Mihajlo Pupin Institute and engineers from USSR in the period from 1968 to 1971. Three systems HRS-100 were deployed in Academy of Sciences of USSR in Moscow and Novosibirsk (Akademgorodok) in 1971 and 1978. More production was contemplated for use in Czechoslovakia and German Democratic Republic (DDR), but that was not realised.

Computational mechanics is the discipline concerned with the use of computational methods to study phenomena governed by the principles of mechanics. Before the emergence of computational science as a "third way" besides theoretical and experimental sciences, computational mechanics was widely considered to be a sub-discipline of applied mechanics. It is now considered to be a sub-discipline within computational science.

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible.

Models of neural computation are attempts to elucidate, in an abstract and mathematical fashion, the core principles that underlie information processing in biological nervous systems, or functional components thereof. This article aims to provide an overview of the most definitive models of neuro-biological computation as well as the tools commonly used to construct and analyze them.

Locally Optimal Block Preconditioned Conjugate Gradient (LOBPCG) is a matrix-free method for finding the largest eigenvalues and the corresponding eigenvectors of a symmetric generalized eigenvalue problem

Validated numerics, or rigorous computation, verified computation, reliable computation, numerical verification is numerics including mathematically strict error evaluation, and it is one field of numerical analysis. For computation, interval arithmetic is used, and all results are represented by intervals. Validated numerics were used by Warwick Tucker in order to solve the 14th of Smale's problems, and today it is recognized as a powerful tool for the study of dynamical systems.