In communications and information processing, code is a system of rules to convert information—such as a letter, word, sound, image, or gesture—into another form, sometimes shortened or secret, for communication through a communication channel or storage in a storage medium. An early example is an invention of language, which enabled a person, through speech, to communicate what they thought, saw, heard, or felt to others. But speech limits the range of communication to the distance a voice can carry and limits the audience to those present when the speech is uttered. The invention of writing, which converted spoken language into visual symbols, extended the range of communication across space and time.

Digital data, in information theory and information systems, is information represented as a string of discrete symbols each of which can take on one of only a finite number of values from some alphabet, such as letters or digits. An example is a text document, which consists of a string of alphanumeric characters. The most common form of digital data in modern information systems is binary data, which is represented by a string of binary digits (bits) each of which can have one of two values, either 0 or 1.

A logic gate is an idealized or physical device implementing a Boolean function, a logical operation performed on one or more binary inputs that produces a single binary output. Depending on the context, the term may refer to an ideal logic gate, one that has for instance zero rise time and unlimited fan-out, or it may refer to a non-ideal physical device.

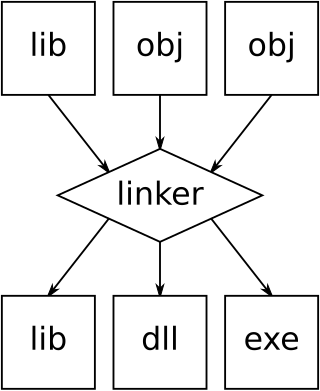

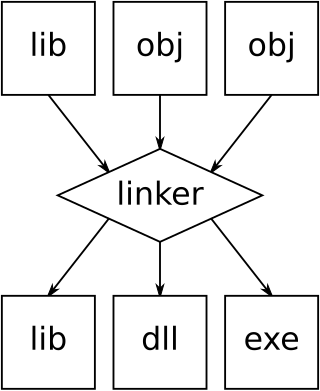

In computing, a linker or link editor is a computer system program that takes one or more object files and combines them into a single executable file, library file, or another "object" file.

A mathematical model is a description of a system using mathematical concepts and language. The process of developing a mathematical model is termed mathematical modeling. Mathematical models are used in the natural sciences and engineering disciplines, as well as in non-physical systems such as the social sciences. The use of mathematical models to solve problems in business or military operations is a large part of the field of operations research. Mathematical models are also used in music, linguistics, and philosophy.

In signal processing, distortion is the alteration of the original shape of a signal. In communications and electronics it means the alteration of the waveform of an information-bearing signal, such as an audio signal representing sound or a video signal representing images, in an electronic device or communication channel.

In computing, a linear-feedback shift register (LFSR) is a shift register whose input bit is a linear function of its previous state.

In computer and machine-based telecommunications terminology, a character is a unit of information that roughly corresponds to a grapheme, grapheme-like unit, or symbol, such as in an alphabet or syllabary in the written form of a natural language.

Lempel–Ziv–Welch (LZW) is a universal lossless data compression algorithm created by Abraham Lempel, Jacob Ziv, and Terry Welch. It was published by Welch in 1984 as an improved implementation of the LZ78 algorithm published by Lempel and Ziv in 1978. The algorithm is simple to implement and has the potential for very high throughput in hardware implementations. It is the algorithm of the widely used Unix file compression utility compress and is used in the GIF image format.

LZ77 and LZ78 are the two lossless data compression algorithms published in papers by Abraham Lempel and Jacob Ziv in 1977 and 1978. They are also known as LZ1 and LZ2 respectively. These two algorithms form the basis for many variations including LZW, LZSS, LZMA and others. Besides their academic influence, these algorithms formed the basis of several ubiquitous compression schemes, including GIF and the DEFLATE algorithm used in PNG and ZIP.

Automata theory is the study of abstract machines and automata, as well as the computational problems that can be solved using them. It is a theory in theoretical computer science. The word automata comes from the Greek word αὐτόματος, which means "self-acting, self-willed, self-moving". An automaton is an abstract self-propelled computing device which follows a predetermined sequence of operations automatically. An automaton with a finite number of states is called a Finite Automaton (FA) or Finite-State Machine (FSM). The figure on the right illustrates a finite-state machine, which is a well-known type of automaton. This automaton consists of states and transitions. As the automaton sees a symbol of input, it makes a transition to another state, according to its transition function, which takes the previous state and current input symbol as its arguments.

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used for information transfer of, for example, a digital bit stream, from one or several senders to one or several receivers. A channel has a certain capacity for transmitting information, often measured by its bandwidth in Hz or its data rate in bits per second.

In philosophy of mind, functionalism is the thesis that mental states are constituted solely by their functional role, which means, their causal relations with other mental states, sensory inputs and behavioral outputs. Functionalism developed largely as an alternative to the identity theory of mind and behaviorism.

In computer programming, Base64 is a group of binary-to-text encoding schemes that represent binary data in sequences of 24 bits that can be represented by four 6-bit Base64 digits.

In the theory of computation, a Mealy machine is a finite-state machine whose output values are determined both by its current state and the current inputs. This is in contrast to a Moore machine, whose output values are determined solely by its current state. A Mealy machine is a deterministic finite-state transducer: for each state and input, at most one transition is possible.

The Greek alphabet has been used to write the Greek language since the late 9th or early 8th century BCE. It is derived from the earlier Phoenician alphabet, and was the earliest known alphabetic script to have distinct letters for vowels as well as consonants. In Archaic and early Classical times, the Greek alphabet existed in many local variants, but, by the end of the 4th century BCE, the Euclidean alphabet, with 24 letters, ordered from alpha to omega, had become standard and it is this version that is still used for Greek writing today.

Diacritical marks of two dots¨, placed side-by-side over or under a letter, are used in a number of languages for several different purposes. The most familiar to English language speakers are the diaeresis and the umlaut, though there are numerous others. For example, in Albanian, ë represents a schwa. Such dots are also sometimes used for stylistic reasons.

Object-oriented design (OOD) is the process of planning a system of interacting objects for the purpose of solving a software problem. It is one approach to software design.

Embodied cognitive science is an interdisciplinary field of research, the aim of which is to explain the mechanisms underlying intelligent behavior. It comprises three main methodologies: the modeling of psychological and biological systems in a holistic manner that considers the mind and body as a single entity; the formation of a common set of general principles of intelligent behavior; and the experimental use of robotic agents in controlled environments.

This glossary of computer science is a list of definitions of terms and concepts used in computer science, its sub-disciplines, and related fields, including terms relevant to software, data science, and computer programming.