Related Research Articles

Audio signal processing is a subfield of signal processing that is concerned with the electronic manipulation of audio signals. Audio signals are electronic representations of sound waves—longitudinal waves which travel through air, consisting of compressions and rarefactions. The energy contained in audio signals is typically measured in decibels. As audio signals may be represented in either digital or analog format, processing may occur in either domain. Analog processors operate directly on the electrical signal, while digital processors operate mathematically on its digital representation.

Nokia Bell Labs, originally named Bell Telephone Laboratories (1925–1984), then AT&T Bell Laboratories (1984–1996) and Bell Labs Innovations (1996–2007), is an American industrial research and scientific development company owned by multinational company Nokia. With headquarters located in Murray Hill, New Jersey, the company operates several laboratories in the United States and around the world.

Claude Elwood Shannon was an American mathematician, electrical engineer, and cryptographer known as a "father of information theory".

A vocoder is a category of speech coding that analyzes and synthesizes the human voice signal for audio data compression, multiplexing, voice encryption or voice transformation.

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model.

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition.

Digital music technology encompasses digital instruments, computers, electronic effects units, software, or digital audio equipment by a performer, composer, sound engineer, DJ, or record producer to produce, perform or record music. The term refers to electronic devices, instruments, computer hardware, and software used in performance, playback, recording, composition, mixing, analysis, and editing of music.

Richard Wesley Hamming was an American mathematician whose work had many implications for computer engineering and telecommunications. His contributions include the Hamming code, the Hamming window, Hamming numbers, sphere-packing, Hamming graph concepts, and the Hamming distance.

Max Vernon Mathews was a pioneer of computer music.

George Robert Stibitz was a Bell Labs researcher internationally recognized as one of the fathers of the modern digital computer. He was known for his work in the 1930s and 1940s on the realization of Boolean logic digital circuits using electromechanical relays as the switching element.

John Larry Kelly Jr., was a scientist who worked at Bell Labs. From a "system he'd developed to analyze information transmitted over networks," from Claude Shannon's earlier work on information theory, he is best known for his 1956 work in creating the Kelly criterion formula. With notable volatility in its sequence of outcomes, the Kelly criterion can be used to estimate what proportion of wealth to risk in a sequence of positive expected value bets to maximize the rate of return. As a substantial warning, the outcome for the Kelly criterion's recommendation on bet-size "relies heavily on the accuracy" of the statistical probabilities given to a gamble's positive expectations.

A. Michael Noll is an American engineer, and professor emeritus at the Annenberg School for Communication and Journalism at the University of Southern California. He served as dean of the Annenberg School from 1992 to 1994. He was a very early pioneer in digital computer art and 3D animation and tactile communication.

James Loton Flanagan was an American electrical engineer. He was Rutgers University's vice president for research until 2004. He was also director of Rutgers' Center for Advanced Information Processing and the Board of Governors Professor of Electrical and Computer Engineering. He is known for co-developing adaptive differential pulse-code modulation (ADPCM) with P. Cummiskey and Nikil Jayant at Bell Labs.

Haskins Laboratories, Inc. is an independent 501(c) non-profit corporation, founded in 1935 and located in New Haven, Connecticut, since 1970. Upon moving to New Haven, Haskins entered in to formal affiliation agreements with both Yale University and the University of Connecticut; it remains fully independent, administratively and financially, of both Yale and UConn. Haskins is a multidisciplinary and international community of researchers which conducts basic research on spoken and written language. A guiding perspective of their research is to view speech and language as emerging from biological processes, including those of adaptation, response to stimuli, and conspecific interaction. The Laboratories has a long history of technological and theoretical innovation, from creating systems of rules for speech synthesis and development of an early working prototype of a reading machine for the blind to developing the landmark concept of phonemic awareness as the critical preparation for learning to read an alphabetic writing system.

Articulatory synthesis refers to computational techniques for synthesizing speech based on models of the human vocal tract and the articulation processes occurring there. The shape of the vocal tract can be controlled in a number of ways which usually involves modifying the position of the speech articulators, such as the tongue, jaw, and lips. Speech is created by digitally simulating the flow of air through the representation of the vocal tract.

Franklin Seaney Cooper was an American physicist and inventor who was a pioneer in speech research.

Lorinda Landgraf Cherry was an American computer scientist and programmer. Much of her career was spent at Bell Labs, where she was for many years a member of the original Unix Lab. Cherry developed several mathematical tools and utilities for text formatting and analysis, and influenced the creation of others.

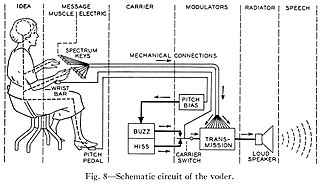

The Bell Telephone Laboratory's Voder was the first attempt to electronically synthesize human speech by breaking it down into its acoustic components. It was invented by Homer Dudley in 1937–1938 and developed on his earlier work on the vocoder. The quality of the speech was limited; however, it demonstrated the synthesis of the human voice, which became one component of the vocoder used in voice communications for security and to save bandwidth.

Irving Solomon "Irv" Teibel was an American field recordist, graphic designer, and photographer. His company, Syntonic Research, Inc., is best known for its influential environments psychoacoustic recording series (1969–1979) and The Altered Nixon Speech (1973). Teibel was also an accomplished photographer who worked as an editor for Ziff Davis and photographed for Popular Photography and Car and Driver.

Stephen E. Levinson is a professor of Electrical and Computer Engineering at the University of Illinois at Urbana-Champaign (UIUC), leader of the Language Acquisition and Robotics Lab at UIUC, and a full-time faculty member of the Beckman Institute for Advanced Science and Technology at UIUC. He works on speech synthesis, acquisition and recognition and the development of anthropomorphic robots.

References

- 1 2 3 4 5 6 Lambert, Bruce (21 March 1992). "Louis Gerstman, 61, a Specialist In Speech Disorders and Processes". The New York Times. Retrieved 6 November 2015.

- ↑ "'Enjoyale' Backgrounds Expanding Odd Catalog" (PDF). Billboard. March 22, 1975. Retrieved 6 November 2015.

- ↑ Schroeder, Manfred R. (17 April 2013). Computer Speech: Recognition, Compression, Synthesis. Springer Science & Business Media. p. XVI. ISBN 9783662063842 . Retrieved 6 November 2015.

- ↑ "Music From Mathematics". Discogs. Retrieved 6 November 2015.

- ↑ "Synthesized Speech". Discogs. Retrieved 6 November 2015.