In computing, floating-point arithmetic (FP) is arithmetic that represents subsets of real numbers using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. Numbers of this form are called floating-point numbers. For example, 12.345 is a floating-point number in base ten with five digits of precision:

Double-precision floating-point format is a floating-point number format, usually occupying 64 bits in computer memory; it represents a wide range of numeric values by using a floating radix point.

In machine learning, support vector machines are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories by Vladimir Vapnik with colleagues SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik and Chervonenkis (1974).

Floating point operations per second is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations.

Archimedes' principle states that the upward buoyant force that is exerted on a body immersed in a fluid, whether fully or partially, is equal to the weight of the fluid that the body displaces. Archimedes' principle is a law of physics fundamental to fluid mechanics. It was formulated by Archimedes of Syracuse.

In information theory, the cross-entropy between two probability distributions and , over the same underlying set of events, measures the average number of bits needed to identify an event drawn from the set when the coding scheme used for the set is optimized for an estimated probability distribution , rather than the true distribution .

Machine epsilon or machine precision is an upper bound on the relative approximation error due to rounding in floating point number systems. This value characterizes computer arithmetic in the field of numerical analysis, and by extension in the subject of computational science. The quantity is also called macheps and it has the symbols Greek epsilon .

In computing, minifloats are floating-point values represented with very few bits. This reduced precision makes them ill-suited for general-purpose numerical calculations, but they are useful for special purposes such as:

In computing, CUDA is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs (GPGPU). CUDA API and its runtime: The CUDA API is an extension of the C programming language that adds the ability to specify thread-level parallelism in C and also to specify GPU device specific operations. CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for the execution of compute kernels. In addition to drivers and runtime kernels, the CUDA platform includes compilers, libraries and developer tools to help programmers accelerate their applications.

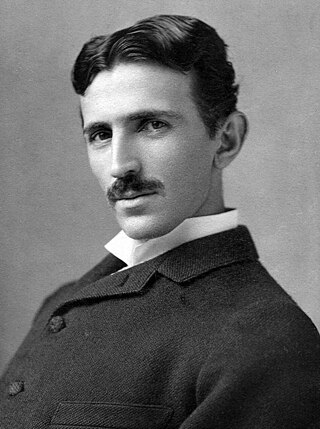

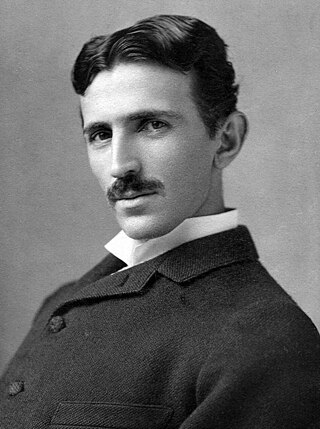

Tesla is the codename for a GPU microarchitecture developed by Nvidia, and released in 2006, as the successor to Curie microarchitecture. It was named after the pioneering electrical engineer Nikola Tesla. As Nvidia's first microarchitecture to implement unified shaders, it was used with GeForce 8 series, GeForce 9 series, GeForce 100 series, GeForce 200 series, and GeForce 300 series of GPUs, collectively manufactured in 90 nm, 80 nm, 65 nm, 55 nm, and 40 nm. It was also in the GeForce 405 and in the Quadro FX, Quadro x000, Quadro NVS series, and Nvidia Tesla computing modules.

In computing, half precision is a binary floating-point computer number format that occupies 16 bits in computer memory. It is intended for storage of floating-point values in applications where higher precision is not essential, in particular image processing and neural networks.

Fermi is the codename for a graphics processing unit (GPU) microarchitecture developed by Nvidia, first released to retail in April 2010, as the successor to the Tesla microarchitecture. It was the primary microarchitecture used in the GeForce 400 series and 500 series. All desktop Fermi GPUs were manufactured in 40nm, mobile Fermi GPUs in 40nm and 28nm. Fermi is the oldest microarchitecture from Nvidia that receives support for Microsoft's rendering API Direct3D 12 feature_level 11.

Pascal is the codename for a GPU microarchitecture developed by Nvidia, as the successor to the Maxwell architecture. The architecture was first introduced in April 2016 with the release of the Tesla P100 (GP100) on April 5, 2016, and is primarily used in the GeForce 10 series, starting with the GeForce GTX 1080 and GTX 1070, which were released on May 27, 2016, and June 10, 2016, respectively. Pascal was manufactured using TSMC's 16 nm FinFET process, and later Samsung's 14 nm FinFET process.

Volta is the codename, but not the trademark, for a GPU microarchitecture developed by Nvidia, succeeding Pascal. It was first announced on a roadmap in March 2013, although the first product was not announced until May 2017. The architecture is named after 18th–19th century Italian chemist and physicist Alessandro Volta. It was Nvidia's first chip to feature Tensor Cores, specially designed cores that have superior deep learning performance over regular CUDA cores. The architecture is produced with TSMC's 12 nm FinFET process. The Ampere microarchitecture is the successor to Volta.

Block floating point (BFP) is a method used to provide an arithmetic approaching floating point while using a fixed-point processor. BFP assigns a group of significands to a single exponent, rather than single significand being assigned its own exponent. BFP can be advantageous to limit space use in hardware to perform the same functions as floating-point algorithms, by reusing the exponent; some operations over multiple values between blocks can also be done with a reduced amount of computation.

The bfloat16 floating-point format is a computer number format occupying 16 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point. This format is a shortened (16-bit) version of the 32-bit IEEE 754 single-precision floating-point format (binary32) with the intent of accelerating machine learning and near-sensor computing. It preserves the approximate dynamic range of 32-bit floating-point numbers by retaining 8 exponent bits, but supports only an 8-bit precision rather than the 24-bit significand of the binary32 format. More so than single-precision 32-bit floating-point numbers, bfloat16 numbers are unsuitable for integer calculations, but this is not their intended use. Bfloat16 is used to reduce the storage requirements and increase the calculation speed of machine learning algorithms.

Ampere is the codename for a graphics processing unit (GPU) microarchitecture developed by Nvidia as the successor to both the Volta and Turing architectures. It was officially announced on May 14, 2020 and is named after French mathematician and physicist André-Marie Ampère.

In the study of artificial neural networks (ANNs), the neural tangent kernel (NTK) is a kernel that describes the evolution of deep artificial neural networks during their training by gradient descent. It allows ANNs to be studied using theoretical tools from kernel methods.

Tesla Dojo is a supercomputer designed and built by Tesla for computer vision video processing and recognition. It is used for training Tesla's machine learning models to improve its Full Self-Driving (FSD) advanced driver-assistance system. According to Tesla, it went into production in July 2023.

Model compression is a machine learning technique for reducing the size of trained models. Large models can achieve high accuracy, but often at the cost of significant resource requirements. Compression techniques aim to compress models without significant performance reduction. Smaller models require less storage space, and consume less memory and compute during inference.