In functional analysis, a branch of mathematics, nest algebras are a class of operator algebras that generalise the upper-triangular matrix algebras to a Hilbert space context. They were introduced by Ringrose ( 1965 ) and have many interesting properties. They are non-selfadjoint algebras, are closed in the weak operator topology and are reflexive.

Functional analysis is a branch of mathematical analysis, the core of which is formed by the study of vector spaces endowed with some kind of limit-related structure and the linear functions defined on these spaces and respecting these structures in a suitable sense. The historical roots of functional analysis lie in the study of spaces of functions and the formulation of properties of transformations of functions such as the Fourier transform as transformations defining continuous, unitary etc. operators between function spaces. This point of view turned out to be particularly useful for the study of differential and integral equations.

In functional analysis, an operator algebra is an algebra of continuous linear operators on a topological vector space with the multiplication given by the composition of mappings.

The mathematical concept of a Hilbert space, named after David Hilbert, generalizes the notion of Euclidean space. It extends the methods of vector algebra and calculus from the two-dimensional Euclidean plane and three-dimensional space to spaces with any finite or infinite number of dimensions. A Hilbert space is an abstract vector space possessing the structure of an inner product that allows length and angle to be measured. Furthermore, Hilbert spaces are complete: there are enough limits in the space to allow the techniques of calculus to be used.

Contents

Nest algebras are among the simplest examples of commutative subspace lattice algebras. Indeed, they are formally defined as the algebra of bounded operators leaving invariant each subspace contained in a subspace nest, that is, a set of subspaces which is totally ordered by inclusion and is also a complete lattice. Since the orthogonal projections corresponding to the subspaces in a nest commute, nests are commutative subspace lattices.

In functional analysis, a bounded linear operator is a linear transformation L between normed vector spaces X and Y for which the ratio of the norm of L(v) to that of v is bounded above by the same number, over all non-zero vectors v in X. In other words, there exists some such that for all v in X

In mathematics, an invariant is a property, held by a class of mathematical objects, which remains unchanged when transformations of a certain type are applied to the objects. The particular class of objects and type of transformations are usually indicated by the context in which the term is used. For example, the area of a triangle is an invariant with respect to isometries of the Euclidean plane. The phrases "invariant under" and "invariant to" a transformation are both used. More generally, an invariant with respect to an equivalence relation is a property that is constant on each equivalence class.

In mathematics, and more specifically in linear algebra, a linear subspace, also known as a vector subspace is a vector space that is a subset of some larger vector space. A linear subspace is usually called simply a subspace when the context serves to distinguish it from other kinds of subspace.

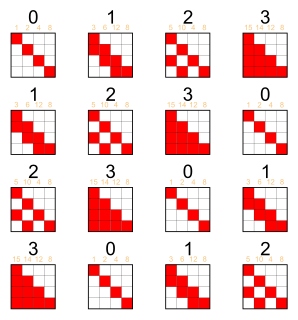

By way of an example, let us apply this definition to recover the finite-dimensional upper-triangular matrices. Let us work in the -dimensional complex vector space , and let be the standard basis. For , let be the -dimensional subspace of spanned by the first basis vectors . Let

In physics and mathematics, the dimension of a mathematical space is informally defined as the minimum number of coordinates needed to specify any point within it. Thus a line has a dimension of one because only one coordinate is needed to specify a point on it – for example, the point at 5 on a number line. A surface such as a plane or the surface of a cylinder or sphere has a dimension of two because two coordinates are needed to specify a point on it – for example, both a latitude and longitude are required to locate a point on the surface of a sphere. The inside of a cube, a cylinder or a sphere is three-dimensional because three coordinates are needed to locate a point within these spaces.

A complex number is a number that can be expressed in the form a + bi, where a and b are real numbers, and i is a solution of the equation x2 = −1. Because no real number satisfies this equation, i is called an imaginary number. For the complex number a + bi, a is called the real part, and b is called the imaginary part. Despite the historical nomenclature "imaginary", complex numbers are regarded in the mathematical sciences as just as "real" as the real numbers, and are fundamental in many aspects of the scientific description of the natural world.

A vector space is a collection of objects called vectors, which may be added together and multiplied ("scaled") by numbers, called scalars. Scalars are often taken to be real numbers, but there are also vector spaces with scalar multiplication by complex numbers, rational numbers, or generally any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called axioms, listed below.

then N is a subspace nest, and the corresponding nest algebra of n × n complex matrices M leaving each subspace in N invariant that is, satisfying for each S in N– is precisely the set of upper-triangular matrices.

If we omit one or more of the subspaces Sj from N then the corresponding nest algebra consists of block upper-triangular matrices.