A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there are supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS).

A Beowulf cluster is a computer cluster of what are normally identical, commodity-grade computers networked into a small local area network with libraries and programs installed which allow processing to be shared among them. The result is a high-performance parallel computing cluster from inexpensive personal computer hardware.

Quadrics was a supercomputer company formed in 1996 as a joint venture between Alenia Spazio and the technical team from Meiko Scientific. They produced hardware and software for clustering commodity computer systems into massively parallel systems. Their highpoint was in June 2003 when six out of the ten fastest supercomputers in the world were based on Quadrics' interconnect. They officially closed on June 29, 2009.

NetApp, Inc. is an American hybrid cloud data services and data management company headquartered in Sunnyvale, California. It has ranked in the Fortune 500 since 2012. Founded in 1992 with an IPO in 1995, NetApp offers cloud data services for management of applications and data both online and physically.

Lustre is a type of parallel distributed file system, generally used for large-scale cluster computing. The name Lustre is a portmanteau word derived from Linux and cluster. Lustre file system software is available under the GNU General Public License and provides high performance file systems for computer clusters ranging in size from small workgroup clusters to large-scale, multi-site systems. Since June 2005, Lustre has consistently been used by at least half of the top ten, and more than 60 of the top 100 fastest supercomputers in the world, including the world's No. 1 ranked TOP500 supercomputer in June 2020, Fugaku, as well as previous top supercomputers such as Titan and Sequoia.

Inspur, whose full name is Inspur Group, is an information technology conglomerate in mainland China focusing on cloud computing, big data, key application hosts, servers, storage, artificial intelligence and ERP. On April 18, 2006, Inspur changed its English name from Langchao to Inspur. It is listed on the SSE, SZSE, and SEHK. It owns Inspur Information, Inspur Software, Inspur International and Huaguang Optoelectronics, and VIT, C.A..

SUSE is a German-based multinational open-source software company that develops and sells Linux products to business customers. Founded in 1992, it was the first company to market Linux for enterprise. It is the developer of SUSE Linux Enterprise and the primary sponsor of the community-supported openSUSE Project, which develops the openSUSE Linux distribution. While the openSUSE "Tumbleweed" variation is an upstream distribution for both the "Leap" variation and SUSE Linux Enterprise distribution, its branded "Leap" variation is part of a direct upgrade path to the enterprise version, which effectively makes openSUSE Leap a non-commercial version of its enterprise product.

The Texas Advanced Computing Center (TACC) at the University of Texas at Austin, United States, is an advanced computing research center that provides comprehensive advanced computing resources and support services to researchers in Texas and across the USA. The mission of TACC is to enable discoveries that advance science and society through the application of advanced computing technologies. Specializing in high performance computing, scientific visualization, data analysis & storage systems, software, research & development and portal interfaces, TACC deploys and operates advanced computational infrastructure to enable computational research activities of faculty, staff, and students of UT Austin. TACC also provides consulting, technical documentation, and training to support researchers who use these resources. TACC staff members conduct research and development in applications and algorithms, computing systems design/architecture, and programming tools and environments.

The National Energy Research Scientific Computing Center (NERSC), is a high performance computing (supercomputer) user facility operated by Lawrence Berkeley National Laboratory for the United States Department of Energy Office of Science. As the mission computing center for the Office of Science, NERSC houses high performance computing and data systems used by 7,000 scientists at national laboratories and universities around the country. NERSC's newest and largest supercomputer is Cori, which was ranked 5th on the TOP500 list of world's fastest supercomputers in November 2016. NERSC is located on the main Berkeley Lab campus in Berkeley, California.

The IBM Intelligent Cluster was a cluster solution for x86-based high-performance computing composed primarily of IBM components, integrated with network switches from various vendors and optional high-performance InfiniBand interconnects.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software.

Windows HPC Server 2008, released by Microsoft on 22 September 2008, is the successor product to Windows Compute Cluster Server 2003. Like WCCS, Windows HPC Server 2008 is designed for high-end applications that require high performance computing clusters. This version of the server software is claimed to efficiently scale to thousands of cores. It includes features unique to HPC workloads: a new high-speed NetworkDirect RDMA, highly efficient and scalable cluster management tools, a service-oriented architecture (SOA) job scheduler, an MPI library based on open-source MPICH2, and cluster interoperability through standards such as the High Performance Computing Basic Profile (HPCBP) specification produced by the Open Grid Forum (OGF).

AMAX Engineering Corporation is a privately held technology company based in Fremont, California. Founded in 1979, AMAX specializes in application-tailored cloud computing, data center, deep learning, open-architecture platforms, HPC and OEM solutions.

BeeGFS is a parallel file system, developed and optimized for high-performance computing. BeeGFS includes a distributed metadata architecture for scalability and flexibility reasons. Its most important aspect is data throughput.

Exalogic is a computer appliance made by Oracle Corporation, commercially available since 2010. It is a cluster of x86-64-servers running Oracle Linux or Solaris preinstalled.

Appro was a developer of supercomputing supporting High Performance Computing (HPC) markets focused on medium- to large-scale deployments. Appro was based in Milpitas, California with a computing center in Houston, Texas, and a manufacturing and support subsidiary in South Korea and Japan.

PSSC Labs is a California-based company that provides supercomputing solutions in the United States and internationally. Its products include "high-performance" servers, clusters, workstations, and RAID storage systems for scientific research, government and military, entertainment content creators, developers, and private clouds. The company has implemented clustering software from NASA Goddard's Beowulf project in its supercomputers designed for bioinformatics, medical imaging, computational chemistry and other scientific applications.

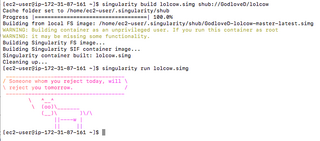

Singularity is a free, cross-platform and open-source computer program that performs operating-system-level virtualization also known as containerization.

Inspur Server Series is a series of server computers introduced in 1993 by Inspur, an information technology company, and later expanded to the international markets. The servers were likely among the first originally manufactured by a Chinese company. It is currently developed by Inspur Information and its San Francisco-based subsidiary company - Inspur Systems, both Inspur's spinoff companies. The product line includes GPU Servers, Rack-mounted servers, Open Computing Servers and Multi-node Servers.