In software quality assurance, performance testing is in general a testing practice performed to determine how a system performs in terms of responsiveness and stability under a particular workload. It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage.

In computing, an optimizing compiler is a compiler that tries to minimize or maximize some attributes of an executable computer program. Common requirements are to minimize a program's execution time, memory footprint, storage size, and power consumption.

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors.

In computing, load balancing is the process of distributing a set of tasks over a set of resources, with the aim of making their overall processing more efficient. Load balancing can optimize the response time and avoid unevenly overloading some compute nodes while other compute nodes are left idle.

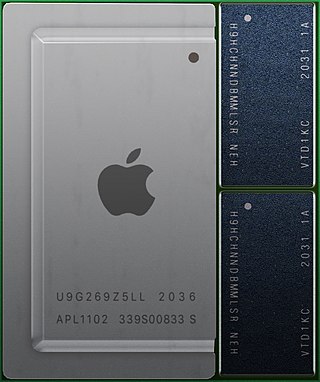

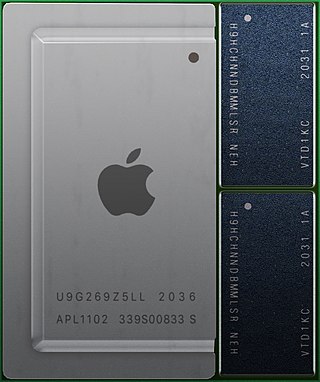

A system on a chip or system-on-chip is an integrated circuit that integrates most or all components of a computer or other electronic system. These components almost always include on-chip central processing unit (CPU), memory interfaces, input/output devices and interfaces, and secondary storage interfaces, often alongside other components such as radio modems and a graphics processing unit (GPU) – all on a single substrate or microchip. SoCs may contain digital and also analog, mixed-signal and often radio frequency signal processing functions.

In computer science, algorithmic efficiency is a property of an algorithm which relates to the amount of computational resources used by the algorithm. An algorithm must be analyzed to determine its resource usage, and the efficiency of an algorithm can be measured based on the usage of different resources. Algorithmic efficiency can be thought of as analogous to engineering productivity for a repeating or continuous process.

Scalability is the property of a system to handle a growing amount of work. One definition for software systems specifies that this may be done by adding resources to the system.

In computing, inline expansion, or inlining, is a manual or compiler optimization that replaces a function call site with the body of the called function. Inline expansion is similar to macro expansion, but occurs during compilation, without changing the source code, while macro expansion occurs prior to compilation, and results in different text that is then processed by the compiler.

In computer science, self-modifying code is code that alters its own instructions while it is executing – usually to reduce the instruction path length and improve performance or simply to reduce otherwise repetitively similar code, thus simplifying maintenance. The term is usually only applied to code where the self-modification is intentional, not in situations where code accidentally modifies itself due to an error such as a buffer overflow.

In computer science, program optimization, code optimization, or software optimization is the process of modifying a software system to make some aspect of it work more efficiently or use fewer resources. In general, a computer program may be optimized so that it executes more rapidly, or to make it capable of operating with less memory storage or other resources, or draw less power.

A content delivery network, or content distribution network (CDN), is a geographically distributed network of proxy servers and their data centers. The goal is to provide high availability and performance by distributing the service spatially relative to end users. CDNs came into existence in the late 1990s as a means for alleviating the performance bottlenecks of the Internet as the Internet was starting to become a mission-critical medium for people and enterprises. Since then, CDNs have grown to serve a large portion of the Internet content today, including web objects, downloadable objects, applications, live streaming media, on-demand streaming media, and social media sites.

In software engineering, profiling is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the frequency and duration of function calls. Most commonly, profiling information serves to aid program optimization, and more specifically, performance engineering.

Capacity management's goal is to ensure that information technology resources are sufficient to meet upcoming business requirements cost-effectively. One common interpretation of capacity management is described in the ITIL framework. ITIL version 3 views capacity management as comprising three sub-processes: business capacity management, service capacity management, and component capacity management.

Performance engineering encompasses the techniques applied during a systems development life cycle to ensure the non-functional requirements for performance will be met. It may be alternatively referred to as systems performance engineering within systems engineering, and software performance engineering or application performance engineering within software engineering.

In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructions. When it comes to high computer performance, one or more of the following factors might be involved:

Performance Monitor is a system monitoring program introduced in Windows NT 3.1. It monitors various activities on a computer such as CPU or memory usage. This type of application may be used to determine the cause of problems on a local or remote computer by measuring the performance of hardware, software services, and applications. The program can define thresholds for alerts and automatic actions, generate reports, and view past performance data.

Resin is a web server and Java application server developed by Caucho Technology. There are two versions available: Resin (GPL), which is free for production use, and Resin Pro, designed for enterprise and production environments with a licensing fee. Resin supports the Java EE standard and features a mod_php/PHP-like engine known as Quercus.

In computer science, empirical algorithmics is the practice of using empirical methods to study the behavior of algorithms. The practice combines algorithm development and experimentation: algorithms are not just designed, but also implemented and tested in a variety of situations. In this process, an initial design of an algorithm is analyzed so that the algorithm may be developed in a stepwise manner.

Web performance refers to the speed in which web pages are downloaded and displayed on the user's web browser. Web performance optimization (WPO), or website optimization is the field of knowledge about increasing web performance.

The Roofline model is an intuitive visual performance model used to provide performance estimates of a given compute kernel or application running on multi-core, many-core, or accelerator processor architectures, by showing inherent hardware limitations, and potential benefit and priority of optimizations. By combining locality, bandwidth, and different parallelization paradigms into a single performance figure, the model can be an effective alternative to assess the quality of attained performance instead of using simple percent-of-peak estimates, as it provides insights on both the implementation and inherent performance limitations.