pexec is a command-line utility for Linux and other Unix-like operating systems which allows the user to execute shell commands in parallel. The specified code can be executed either locally or on remote hosts, in which case ssh is used to build a secure tunnel between them. Similar to shell loops, a variable is changed as the loop starting the tasks iterates, so that many values can get passed to the specified command or script. pexec is a free software utility, and part of the GNU Project. [1] It is available [2] under the terms of GPLv3, and is part of the current Debian stable release. [3]

The most common usage is to replace the shell loop, for example:

forxinalphabravocharliedelta;dodo_something$xdoneto the form of:

pexec-ralphabravocharliedelta-ex-o--c\'do_something $x'where the set with the 4 elements of "alpha" "bravo" "charlie" and "delta" define the possible values for the (environmental) variable $x. The program pexec features also

Such optional features can be requested using command-line arguments. By default, pexec tries to detect the number of CPUs and uses all of them.

AWK is a domain-specific language designed for text processing and typically used as a data extraction and reporting tool. Like sed and grep, it is a filter, and is a standard feature of most Unix-like operating systems.

Bash is a Unix shell and command language written by Brian Fox for the GNU Project as a free software replacement for the Bourne shell. First released in 1989, it has been used as the default login shell for most Linux distributions. Bash was one of the first programs Linus Torvalds ported to Linux, alongside GCC. A version is also available for Windows 10 and Windows 11 via the Windows Subsystem for Linux. It is also the default user shell in Solaris 11. Bash was also the default shell in versions of Apple macOS from 10.3 to 10.15, which changed the default shell to zsh, although Bash remains available as an alternative shell.

sed is a Unix utility that parses and transforms text, using a simple, compact programming language. It was developed from 1973 to 1974 by Lee E. McMahon of Bell Labs, and is available today for most operating systems. sed was based on the scripting features of the interactive editor ed and the earlier qed. It was one of the earliest tools to support regular expressions, and remains in use for text processing, most notably with the substitution command. Popular alternative tools for plaintext string manipulation and "stream editing" include AWK and Perl.

The C shell is a Unix shell created by Bill Joy while he was a graduate student at University of California, Berkeley in the late 1970s. It has been widely distributed, beginning with the 2BSD release of the Berkeley Software Distribution (BSD) which Joy first distributed in 1978. Other early contributors to the ideas or the code were Michael Ubell, Eric Allman, Mike O'Brien and Jim Kulp.

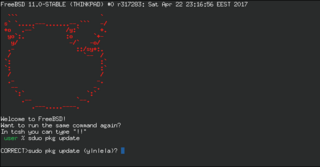

tcsh is a Unix shell based on and backward compatible with the C shell (csh).

In software development, Make is a build automation tool that builds executable programs and libraries from source code by reading files called makefiles which specify how to derive the target program. Though integrated development environments and language-specific compiler features can also be used to manage a build process, Make remains widely used, especially in Unix and Unix-like operating systems.

In computer programming, standard streams are interconnected input and output communication channels between a computer program and its environment when it begins execution. The three input/output (I/O) connections are called standard input (stdin), standard output (stdout) and standard error (stderr). Originally I/O happened via a physically connected system console, but standard streams abstract this. When a command is executed via an interactive shell, the streams are typically connected to the text terminal on which the shell is running, but can be changed with redirection or a pipeline. More generally, a child process inherits the standard streams of its parent process.

lsh is a free software implementation of the Secure Shell (SSH) protocol version 2, by the GNU Project including both server and client programs. Featuring Secure Remote Password protocol (SRP) as specified in secsh-srp besides, public-key authentication. Kerberos is somewhat supported as well. Currently however for password verification only, not as a single sign-on (SSO) method.

xargs is a command on Unix and most Unix-like operating systems used to build and execute commands from standard input. It converts input from standard input into arguments to a command.

dd is a command-line utility for Unix, Plan 9, Inferno, and Unix-like operating systems and beyond, the primary purpose of which is to convert and copy files. On Unix, device drivers for hardware and special device files appear in the file system just like normal files; dd can also read and/or write from/to these files, provided that function is implemented in their respective driver. As a result, dd can be used for tasks such as backing up the boot sector of a hard drive, and obtaining a fixed amount of random data. The dd program can also perform conversions on the data as it is copied, including byte order swapping and conversion to and from the ASCII and EBCDIC text encodings.

bc, for basic calculator, is "an arbitrary-precision calculator language" with syntax similar to the C programming language. bc is typically used as either a mathematical scripting language or as an interactive mathematical shell.

In computing, cut is a command line utility on Unix and Unix-like operating systems which is used to extract sections from each line of input — usually from a file. It is currently part of the GNU coreutils package and the BSD Base System.

In Unix-like computer operating systems, a pipeline is a mechanism for inter-process communication using message passing. A pipeline is a set of processes chained together by their standard streams, so that the output text of each process (stdout) is passed directly as input (stdin) to the next one. The second process is started as the first process is still executing, and they are executed concurrently. The concept of pipelines was championed by Douglas McIlroy at Unix's ancestral home of Bell Labs, during the development of Unix, shaping its toolbox philosophy. It is named by analogy to a physical pipeline. A key feature of these pipelines is their "hiding of internals". This in turn allows for more clarity and simplicity in the system.

A command shell is a command-line interface to interact with and manipulate a computer's operating system.

A read–eval–print loop (REPL), also termed an interactive toplevel or language shell, is a simple interactive computer programming environment that takes single user inputs, executes them, and returns the result to the user; a program written in a REPL environment is executed piecewise. The term usually refers to programming interfaces similar to the classic Lisp machine interactive environment. Common examples include command-line shells and similar environments for programming languages, and the technique is very characteristic of scripting languages.

In computing, tee is a command in command-line interpreters (shells) using standard streams which reads standard input and writes it to both standard output and one or more files, effectively duplicating its input. It is primarily used in conjunction with pipes and filters. The command is named after the T-splitter used in plumbing.

test is a command-line utility found in Unix, Plan 9, and Unix-like operating systems that evaluates conditional expressions. test was turned into a shell builtin command in 1981 with UNIX System III and at the same time made available under the alternate name [.

In computing, sort is a standard command line program of Unix and Unix-like operating systems, that prints the lines of its input or concatenation of all files listed in its argument list in sorted order. Sorting is done based on one or more sort keys extracted from each line of input. By default, the entire input is taken as sort key. Blank space is the default field separator. The command supports a number of command-line options that can vary by implementation. For instance the "-r" flag will reverse the sort order.

Files transferred over Shell protocol (FISH) is a network protocol that uses Secure Shell (SSH) or Remote Shell (RSH) to transfer files between computers and manage remote files.

GNU parallel is a command-line utility for Linux and other Unix-like operating systems which allows the user to execute shell scripts or commands in parallel. GNU parallel is free software, written by Ole Tange in Perl. It is available under the terms of GPLv3.